Question:

In this exercise, we will derive the graph convolutional networks shown in Sec. 10.5.2 from spectral graph signal processing perspective. The classic convolution on graphs can be computed by y=Ugθ(Λ)UTx where x∈RN is the input graph signal (i.e., a vector of scalars that denote feature values of all nodes at a certain feature dimension). Matrices U and Λ denote the eigen-decomposition of the normalized graph Laplacian: L=I−D−12AD−12=UΛUT. gθ(Λ) is the function of eigenvalues and is often used as the filter function.

a. If we directly use the classic graph convolution formula where gθ(Λ)=diag(θ) and θ∈ RN to compute the output y, what are the potential disadvantages?

b. Suppose we use K-polynomial filter gθ(Λ)=∑Kk=0θkΛk. What are the benefits, compared to the above filter gθ(Λ)=diag(θ) ?

c. By applying the K th order Chebyshev polynomial approximation on the filter gθ(Λ)= ∑Kk=0θkΛk, the filter function can be written as gθ′(Λ)≈∑Kk=0θ′kTk(~Λ) with a rescaled ~Λ=2λmaxΛ−I, where λmax denotes the largest eigenvalue of L, and θ′∈RK denotes the Chebyshev coefficients. The Chebyshev polynomials can be computed recursively by Tk(z)=2zTk−1(z)−Tk−2(z) with T0(z)=1 and T1(z)=

Transcribed Image Text:

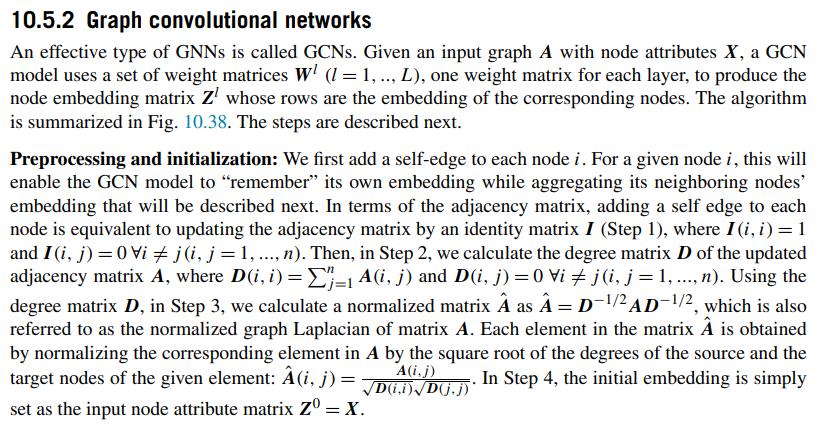

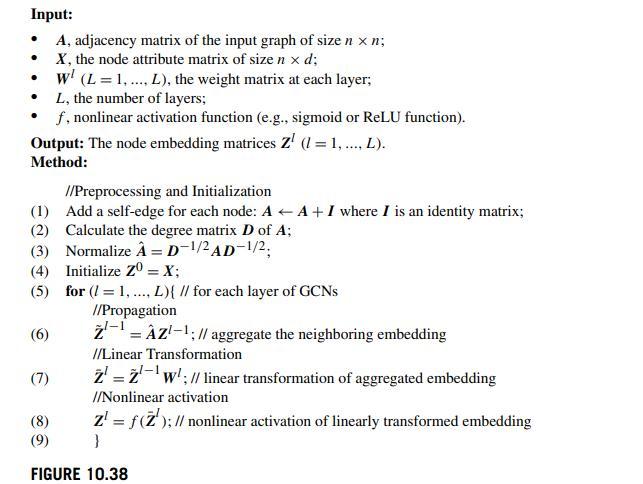

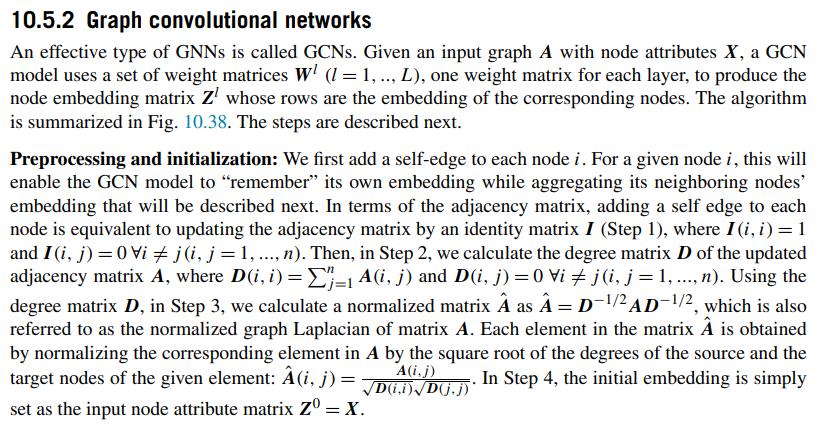

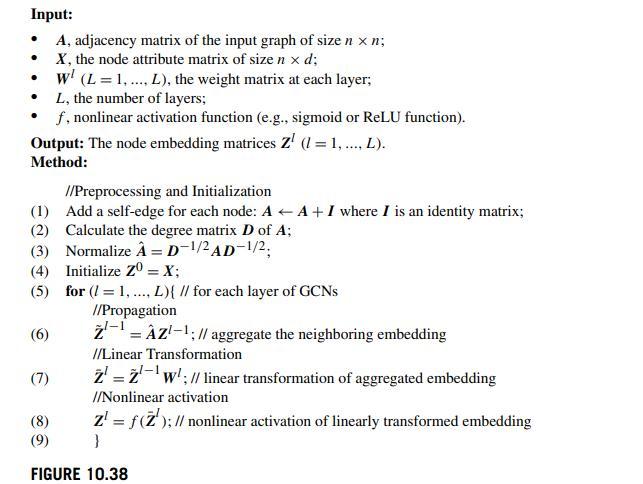

10.5.2 Graph convolutional networks An effective type of GNNs is called GCNs. Given an input graph A with node attributes X, a GCN model uses a set of weight matrices WI (l= 1,.., L), one weight matrix for each layer, to produce the node embedding matrix Z whose rows are the embedding of the corresponding nodes. The algorithm is summarized in Fig. 10.38. The steps are described next. Preprocessing and initialization: We first add a self-edge to each node i. For a given node i, this will enable the GCN model to "remember" its own embedding while aggregating its neighboring nodes' embedding that will be described next. In terms of the adjacency matrix, adding a self edge to each node is equivalent to updating the adjacency matrix by an identity matrix I (Step 1), where I (i, i) = 1 and I (i, j) = 0 Vi # j (i, j = 1, ..., n). Then, in Step 2, we calculate the degree matrix D of the updated adjacency matrix A, where D(i, i) =E=1 A(i, j) and D(i, j)=0 Vi j (i, j = 1, ..., n). Using the degree matrix D, in Step 3, we calculate a normalized matrix as = D-/2 AD-1/2, which is also referred to as the normalized graph Laplacian of matrix A. Each element in the matrix is obtained by normalizing the corresponding element in A by the square root of the degrees of the source and the A(i, j) target nodes of the given element: (i, j): D(ii) Dj.j)* D.). In Step 4, the initial embedding is simply = set as the input node attribute matrix Z = X.