Recall that in lectures we showed that the Logistic Regression for binary classification boils down to...

Fantastic news! We've Found the answer you've been seeking!

Question:

Transcribed Image Text:

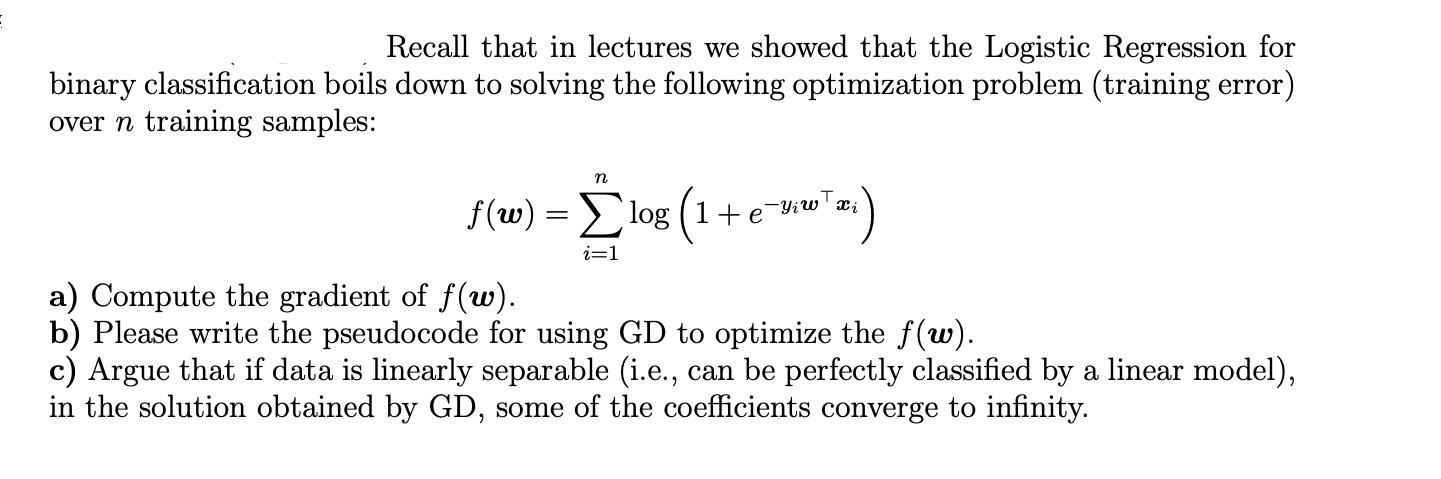

Recall that in lectures we showed that the Logistic Regression for binary classification boils down to solving the following optimization problem (training error) over n training samples: n T f(w) = Σlog (1 + e¯Yiw™; Xi -4:20-21) i=1 a) Compute the gradient of f(w). b) Please write the pseudocode for using GD to optimize the f(w). c) Argue that if data is linearly separable (i.e., can be perfectly classified by a linear model), in the solution obtained by GD, some of the coefficients converge to infinity. Recall that in lectures we showed that the Logistic Regression for binary classification boils down to solving the following optimization problem (training error) over n training samples: n T f(w) = Σlog (1 + e¯Yiw™; Xi -4:20-21) i=1 a) Compute the gradient of f(w). b) Please write the pseudocode for using GD to optimize the f(w). c) Argue that if data is linearly separable (i.e., can be perfectly classified by a linear model), in the solution obtained by GD, some of the coefficients converge to infinity.

Expert Answer:

Answer rating: 100% (QA)

a Compute the gradient of fw The gradient of fw is dfwdw y 1 1 ewxx b Write ... View the full answer

Related Book For

Statistics The Art and Science of Learning from Data

ISBN: 978-0321755940

3rd edition

Authors: Alan Agresti, Christine A. Franklin

Posted Date:

Students also viewed these accounting questions

-

For binary response variables, one reason that logistic regression is usually preferred over straight-line regression is that a fixed change in x often has a smaller impact on a probability p when p...

-

Recall that simple linear regression was used to model y = total catch of lobsters (in kilograms) during the season as a function of x = average percentage of traps allocated per day to exploring...

-

Recall that simple linear regression was used to model y = mass of the spill as a function of x = elapsed time of the spill. a. Find a 99% confidence interval for the mean mass of all spills with an...

-

If the rate of a plane in still air is x mph and the rate of a steady wind is 20 mph, what is the rate of the plane in each case? (a) The plane is flying into the wind (that is, into a headwind,...

-

What is the process to carry out an accounting analysis?

-

Match correct statement(s) with each term regarding system availability. Internal Controls a. Activities required to keep a firm running during a period of displacement or interruption of normal...

-

Based on the design, briefly discuss the data collection procedures to be used. Be sure to include the area rea of focus and targeted sample as part of these procedures. Develop a hypothetical...

-

Judi Salem opened a law office on July 1, 2017. On July 31, the balance sheet showed Cash $5,000, Accounts Receivable $1,500, Supplies $500, Equipment $6,000, Accounts Payable $4,200, and Owners...

-

How to convert this Entity-Relationship data model into a relational database model? Contains Inventory Inventory ID Customer Cust_ID Amount Availible Cust LName Must Have Coffee_Type Cust Fname...

-

During the current year, Ron and Anne sold the following assets: (Use the dividends and capital gains tax rates and tax rate schedules.) Capital Asset Market Value Tax Basis Holding Period L stock $...

-

3) A 2200 lb automobile is jacked up as shown. Determine the compression force (in lbs) in the jack bar. CM 2.4 ft 2.6 ft 4.7 ft 3.9 ft

-

Show that divergence of vector A= a, A, +a A, +a_A, in cylindrical coordinate system is developed as: 1 0(rA,) 1 0A, + r V. A= - r +z. rdo dz

-

An entrepreneur identifies a product that she knows will sell like crazy if she can only figure out a way to bring it to her area. The entrepreneur has identified two ways to bring the product to the...

-

X Consider the following 1-hidden neural networks with 2 inputs and a single output: Input Layer R W, b 0 W 0 Hidden Layer R W2 W y We can write the below equation for the given neural network: y =...

-

At time t = 1, Donald puts 1000 into a fund crediting interest at an annual simple interest rate of i. At time t = 3, Lewis puts 1000 into a different fund crediting interest using an annual discount...

-

An important characteristic of any accounting software, including QuickBooks Online, is the automatic performance of many mechanical and repetitive procedures processed by the computer using default...

-

The folloving information has been made available to you to assist in the preparation of the financal statements of ABC Lid for the year ended 30 June 2022.On 3 Auga: 202 be company received...

-

Pappa's Appliances uses the periodic inventory system. Details regarding the inventory of appliances at January 1, purchases invoices during the year, and the inventory count at December 31 are...

-

Exercise 5.65 discussed how to use simulation and the table of random digits to estimate an expected value. Referring to the previous exercise, conduct a simulation consisting of at least 20...

-

A study published in the British Journal of Health Psychology (D. Wells, vol.12, 2007, pp. 145156) found that dog owners are physically healthier than cat owners. The author of the study was quoted...

-

Based on records of automobile accidents in a recent year, the Department of Highway Safety and Motor Vehicles in Florida reported the counts who survived (S) and died (D), according to whether they...

-

Why do you and your business need to be able to communicate well?

-

What are the basic criteria for effective messages?

-

What are the questions for analyzing a business communication situation?

Study smarter with the SolutionInn App