Question: Consider a linear regression problem in which training examples have differ- ent weights. Specifically, suppose we want to minimize: J(0): = 1 - w(i)

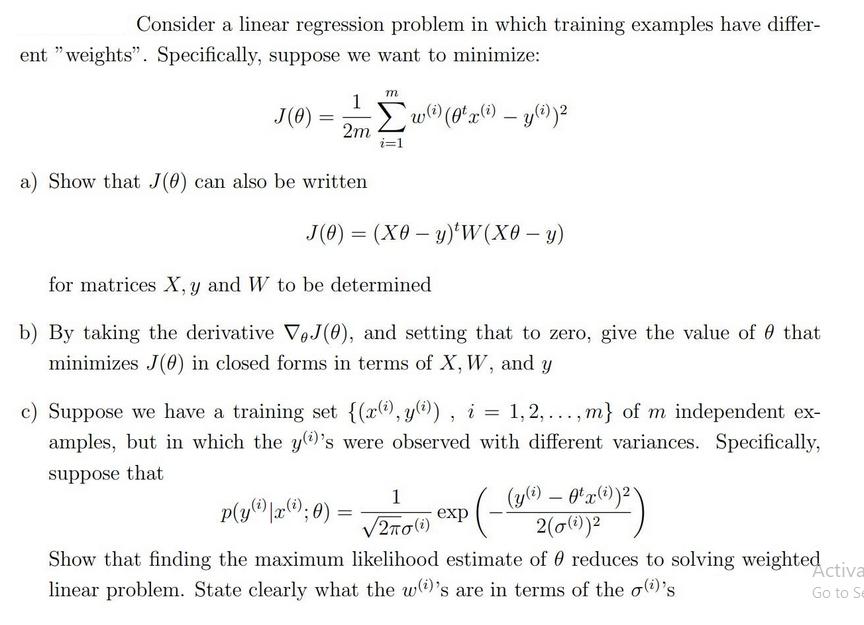

Consider a linear regression problem in which training examples have differ- ent "weights". Specifically, suppose we want to minimize: J(0): = 1 - w(i) (0 x(i) - y(i)) 2m a) Show that J(0) can also be written m J(0) = (X0 y)*W(X0y) p(y () |x (1); 0) for matrices X, y and W to be determined b) By taking the derivative VJ(0), and setting that to zero, give the value of 0 that minimizes J(0) in closed forms in terms of X, W, and y c) Suppose we have a training set {(r(), y)), i = 1,2,...,m} of m independent ex- amples, but in which the y(i)'s were observed with different variances. Specifically, suppose that = 1 27010) exp (- (2(i) (y(i) - Otx(i))2) 2(())2 Show that finding the maximum likelihood estimate of reduces to solving weighted linear problem. State clearly what the w()'s are in terms of the o()'s Activa Go to Se

Step by Step Solution

There are 3 Steps involved in it

To answer the question outlined in the provided image lets take it step by step a To show that Jtheta can also be written as Jtheta Xtheta yT W Xtheta y we have to understand the dimensions of the mat... View full answer

Get step-by-step solutions from verified subject matter experts