Writing for the least-squares and ridge regression estimators for regression coefficients , show that while its variance-covariance

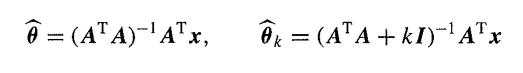

Question:

Writing

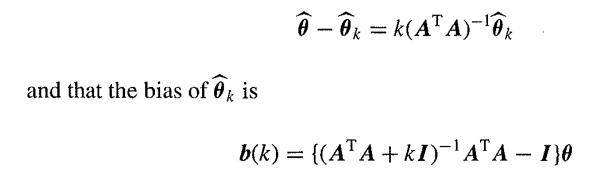

for the least-squares and ridge regression estimators for regression coefficients θ, show that

while its variance-covariance matrix is

Deduce expressions for the sum /![]() of the squares of the biases and for the sum

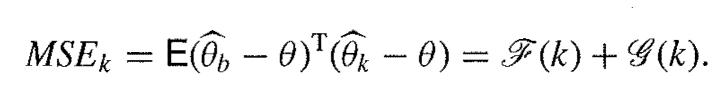

of the squares of the biases and for the sum ![]() of the variances of the regression coefficients, and hence show that the mean square error is

of the variances of the regression coefficients, and hence show that the mean square error is

Assuming that![]() is continuous and monotonic decreasing with

is continuous and monotonic decreasing with![]() (0) = 0 and that

(0) = 0 and that ![]() is continuous and monotonic increasing with

is continuous and monotonic increasing with ![]() =

= ![]()

![]() '(k) = 0, deduce that there always exists a k such that MSEk 0 (Theobald, 1974).

'(k) = 0, deduce that there always exists a k such that MSEk 0 (Theobald, 1974).

Fantastic news! We've Found the answer you've been seeking!

Step by Step Answer:

Related Book For

Question Posted: