A discrete-time Markov chain is a process with a state variable taking values in a discrete set.

Question:

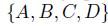

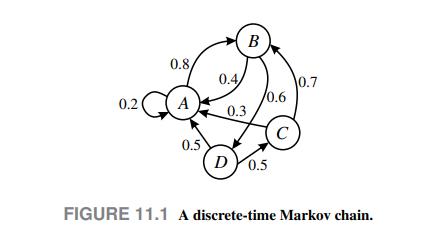

A discrete-time Markov chain is a process with a state variable taking values in a discrete set. The discrete state space may consist of an infinite, yet countable set, like the set of integer numbers, or a finite set, like the credit ratings of a bond. As we shall see, we may also consider a continuous-state Markov chain. The name chain is related to the nature of the state space. In fact, we may represent the process by a graph, as shown in Fig. 11.1. Here, the state space is the set

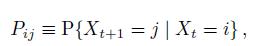

taking values in a discrete set. The discrete state space may consist of an infinite, yet countable set, like the set of integer numbers, or a finite set, like the credit ratings of a bond. As we shall see, we may also consider a continuous-state Markov chain. The name chain is related to the nature of the state space. In fact, we may represent the process by a graph, as shown in Fig. 11.1. Here, the state space is the set . Nodes correspond to states, and directed arcs represent possible transitions, labeled by the corresponding probabilities. For instance, if we are in state C now, at the next step we will be in state B, with probability 0.7 , or in state A, with probability 0.3 . Note that transition probabilities depend only on the current state, not on the whole past history. Thus, we may describe the chain in terms of the conditional transition probabilities,

. Nodes correspond to states, and directed arcs represent possible transitions, labeled by the corresponding probabilities. For instance, if we are in state C now, at the next step we will be in state B, with probability 0.7 , or in state A, with probability 0.3 . Note that transition probabilities depend only on the current state, not on the whole past history. Thus, we may describe the chain in terms of the conditional transition probabilities,

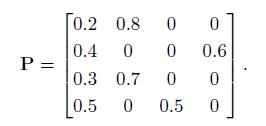

which, in the case of a finite state space of cardinality n, may be collected into the single-step transition probability matrix For the chain in Fig. 11.1,

For the chain in Fig. 11.1,

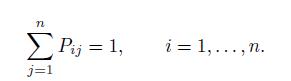

Note that the matrix need not be symmetric, and that the current state is associated with a matrix row, whereas the next states are associated with columns. On the diagonal, we have the probability of staying in the current state. After a transition, we must land somewhere within the state space. Therefore, each and every row adds up to 1 :

Data From Figure Fig. 11.1

Step by Step Answer:

An Introduction To Financial Markets A Quantitative Approach

ISBN: 9781118014776

1st Edition

Authors: Paolo Brandimarte