Question: (i) Consider the simple regression model y = 0 + 1 x + u under the first four Gauss-Markov assumptions. For some function

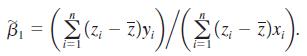

(i) Consider the simple regression model y = β0 + β1x + u under the first four Gauss-Markov assumptions. For some function g(x), for example g(x) = x2 or g(x) = log(1 + x2) , define zi = g(xi). Define a slope estimator as

Show that β̂1 1 is linear and unbiased. Remember, because E(u|x) = 0, you can treat both xi and zi as nonrandom in your derivation.

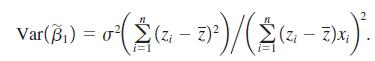

(ii) Add the homoskedasticity assumption, MLR.5. Show that

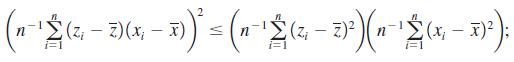

(iii) Show directly that, under the Gauss-Markov assumptions, Var (β̂1) ≤ Var (β̂1) where β̂1 is the OLS estimator. The Cauchy-Schwartz inequality in Math Refresher B implies that

notice that we can drop x from the sample covariance.

z)x;

Step by Step Solution

3.35 Rating (164 Votes )

There are 3 Steps involved in it

i For notational simplicity define this is not quite the sample covariance between z and x because w... View full answer

Get step-by-step solutions from verified subject matter experts