Question: 0 0 0 0 0 1 0 1 0 0 1 1 1 0 0 1 0 1 1 1 0 1 1 1 No.

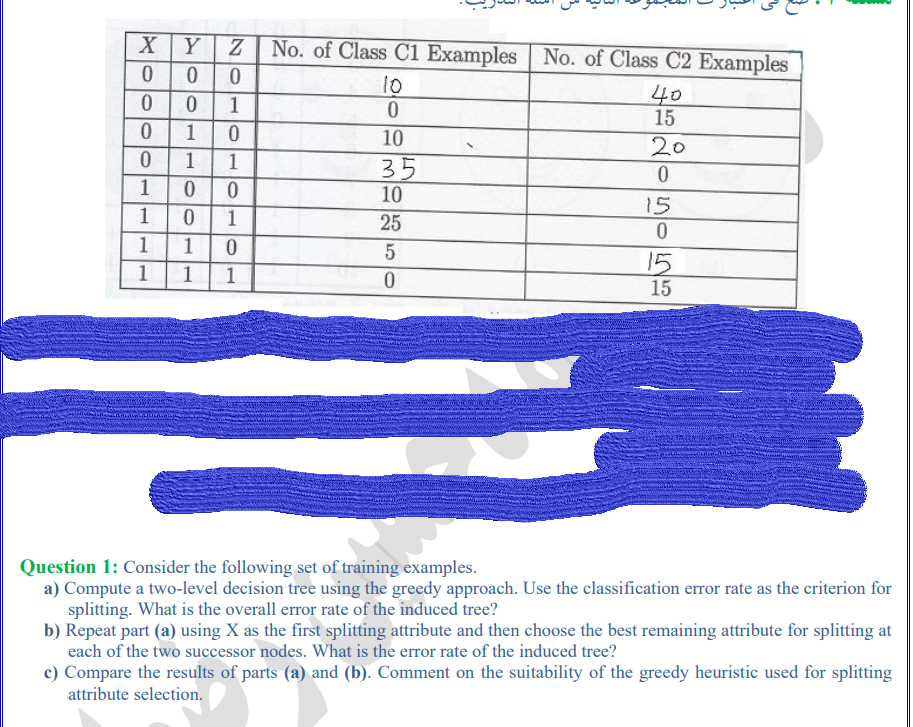

0 0 0 0 0 1 0 1 0 0 1 1 1 0 0 1 0 1 1 1 0 1 1 1 No. of Class C1 Examples No. of Class C2 Examples 10 40 0 15 10 20 35 0 10 15 25 0 5 15 0 15 Go7 Question 1: Consider the following set of training examples. a) Compute a two-level decision tree using the greedy approach. Use the classification error rate as the criterion for splitting. What is the overall error rate of the induced tree? b) Repeat part (a) using X as the first splitting attribute and then choose the best remaining attribute for splitting at each of the two successor nodes. What is the error rate of the induced tree? c) Compare the results of parts (a) and (b). Comment on the suitability of the greedy heuristic used for splitting attribute selection

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts