Question: 1. Best classification when your losses are asymmetric. Consider a two-class ({0,1}) classification problem. We saw in class that for 0-1 loss, the optimal classification

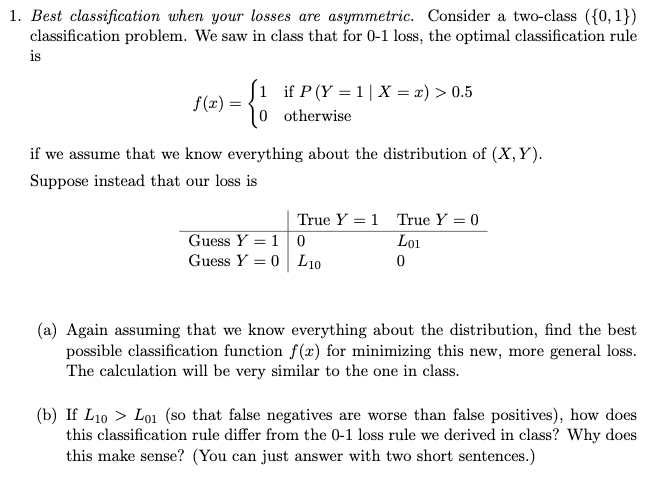

1. Best classification when your losses are asymmetric. Consider a two-class ({0,1}) classification problem. We saw in class that for 0-1 loss, the optimal classification rule is f(x) | if P(Y =1|X = x) > 0.5 0 otherwise if we assume that we know everything about the distribution of (X,Y). Suppose instead that our loss is True Y =1 True Y = 0 Guess Y=10 Loi Guess Y = 0 L10 0 (a) Again assuming that we know everything about the distribution, find the best possible classification function f(x) for minimizing this new, more general loss. The calculation will be very similar to the one in class. (b) If L10 > Loi (so that false negatives are worse than false positives), how does this classification rule differ from the 0-1 loss rule we derived in class? Why does this make sense? (You can just answer with two short sentences.) 1. Best classification when your losses are asymmetric. Consider a two-class ({0,1}) classification problem. We saw in class that for 0-1 loss, the optimal classification rule is f(x) | if P(Y =1|X = x) > 0.5 0 otherwise if we assume that we know everything about the distribution of (X,Y). Suppose instead that our loss is True Y =1 True Y = 0 Guess Y=10 Loi Guess Y = 0 L10 0 (a) Again assuming that we know everything about the distribution, find the best possible classification function f(x) for minimizing this new, more general loss. The calculation will be very similar to the one in class. (b) If L10 > Loi (so that false negatives are worse than false positives), how does this classification rule differ from the 0-1 loss rule we derived in class? Why does this make sense? (You can just answer with two short sentences.)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts