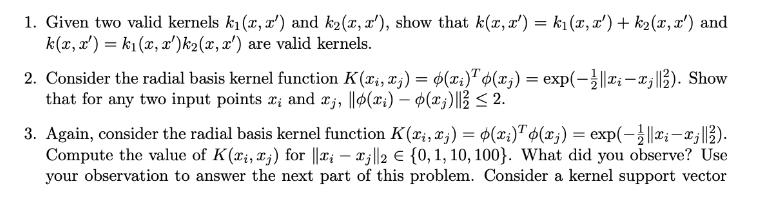

Question: 1. Given two valid kernels k(x, x') and k2(x, x'), show that k(x, x') = k(x, x') + k2(x, x') and k(x, x') =

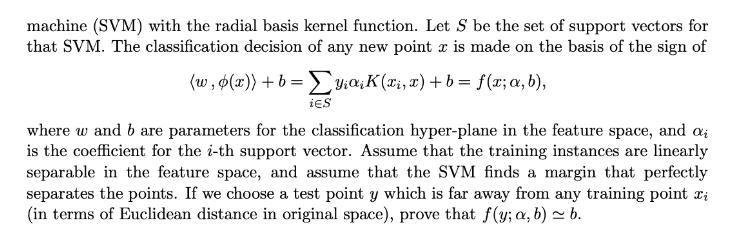

1. Given two valid kernels k(x, x') and k2(x, x'), show that k(x, x') = k(x, x') + k2(x, x') and k(x, x') = k(x, x')k2(x, x') are valid kernels. 2. Consider the radial basis kernel function K(xi, xj) = (xi) (x) = exp(-||xi-x;||). Show that for any two input points x; and ;, ||(xi) - (x;)||2. 3. Again, consider the radial basis kernel function K(xi, xj) = (xi)(x;) = exp(-||-||). Compute the value of K(xi, xj) for ||xj||2 = {0, 1, 10, 100}. What did you observe? Use your observation to answer the next part of this problem. Consider a kernel support vector machine (SVM) with the radial basis kernel function. Let S be the set of support vectors for that SVM. The classification decision of any new point x is made on the basis of the sign of (w, (x))+b=yiaK(xi, x) + b = f(x; , b), iS where w and b are parameters for the classification hyper-plane in the feature space, and a is the coefficient for the i-th support vector. Assume that the training instances are linearly separable in the feature space, and assume that the SVM finds a margin that perfectly separates the points. If we choose a test point y which is far away from any training point xi (in terms of Euclidean distance in original space), prove that f(y; a,b) = b.

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts