Question: 1 - In finding the Loss Often we need to compute the partial derivative of output with respect a . To activation function v during

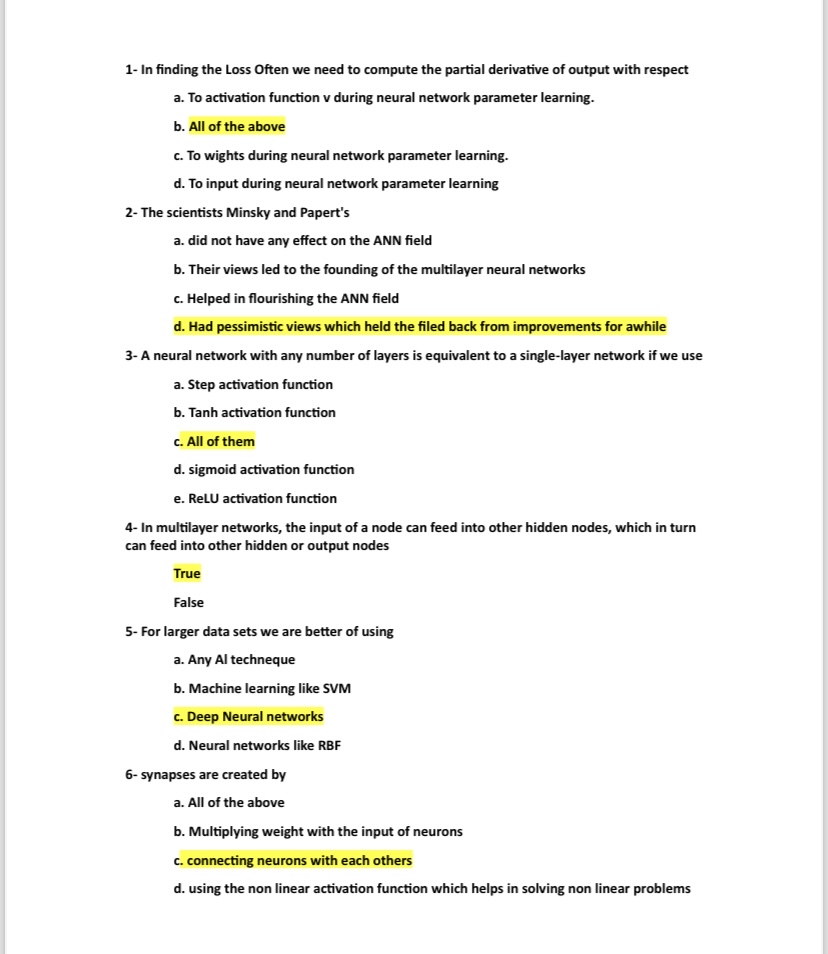

In finding the Loss Often we need to compute the partial derivative of output with respect

a To activation function during neural network parameter learning.

b All of the above

c To wights during neural network parameter learning.

d To input during neural network parameter learning

The scientists Minsky and Papert's

a did not have any effect on the ANN field

b Their views led to the founding of the multilayer neural networks

c Helped in flourishing the ANN field

d Had pessimistic views which held the filed back from improvements for awhile

A neural network with any number of layers is equivalent to a singlelayer network if we use

a Step activation function

b Tanh activation function

c All of them

d sigmoid activation function

e ReLU activation function

In multilayer networks, the input of a node can feed into other hidden nodes, which in turn can feed into other hidden or output nodes

True

False

For larger data sets we are better of using

a Any Al techneque

b Machine learning like SVM

c Deep Neural networks

d Neural networks like RBF

synapses are created by

a All of the above

b Multiplying weight with the input of neurons

c connecting neurons with each others

d using the non linear activation function which helps in solving non linear problems

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock