Question: 1. Least squares classification with regularization. The file lsq classifier data.ipynb contains feature n-vectors x1, . . . , xN , and the associated binary

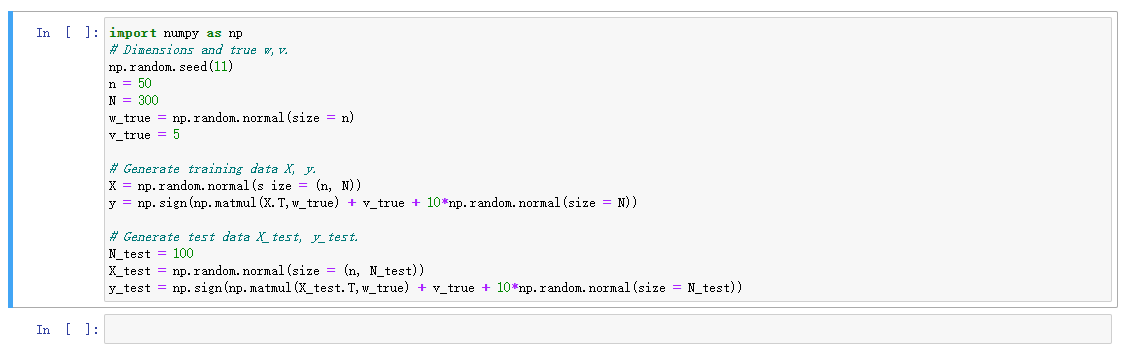

1. Least squares classification with regularization. The file lsq classifier data.ipynb contains feature n-vectors x1, . . . , xN , and the associated binary labels, y1, . . . , yN , each of which is either +1 or ?1. The feature vectors are stored as an n N matrix X with columns x1, . . . , xN , and the labels are stored as an N-vector y. We will evaluate the error rate on the (training) data X, y and (to check if the model generalizes) a test set Xtest, ytest, also given in lsq classifier data.ipynb. (a) (10 points) Least squares classifier. Find ?, v that minimize X N i=1 (x T i ? + v ? yi) 2 on the training set. Our predictions are then ?f(x) = sign(x? + v). Report the classification error on the training and test sets, the fraction of examples where ?f(xi) 6= yi . There is no need to report the ?, v values. (b) (10 points) Regularized least squares classifier. Now we add regularization to improve the generalization ability of the classifier. Find ?, v that minimize X N i=1 (x T i ? + v ? yi) 2 + ?k?k 2 , where ? > 0 is the regularization parameter, for a range of values of ?. Please use the following values for ?: 10?1 , 100 , 101 , 102 , 103 . Suggest a reasonable choice of ? and report the corresponding classification error on the training and test sets. Again, there is no need to report the ?, v values. Hint: plot the training and test set errors against log10(?)

\f\f

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts