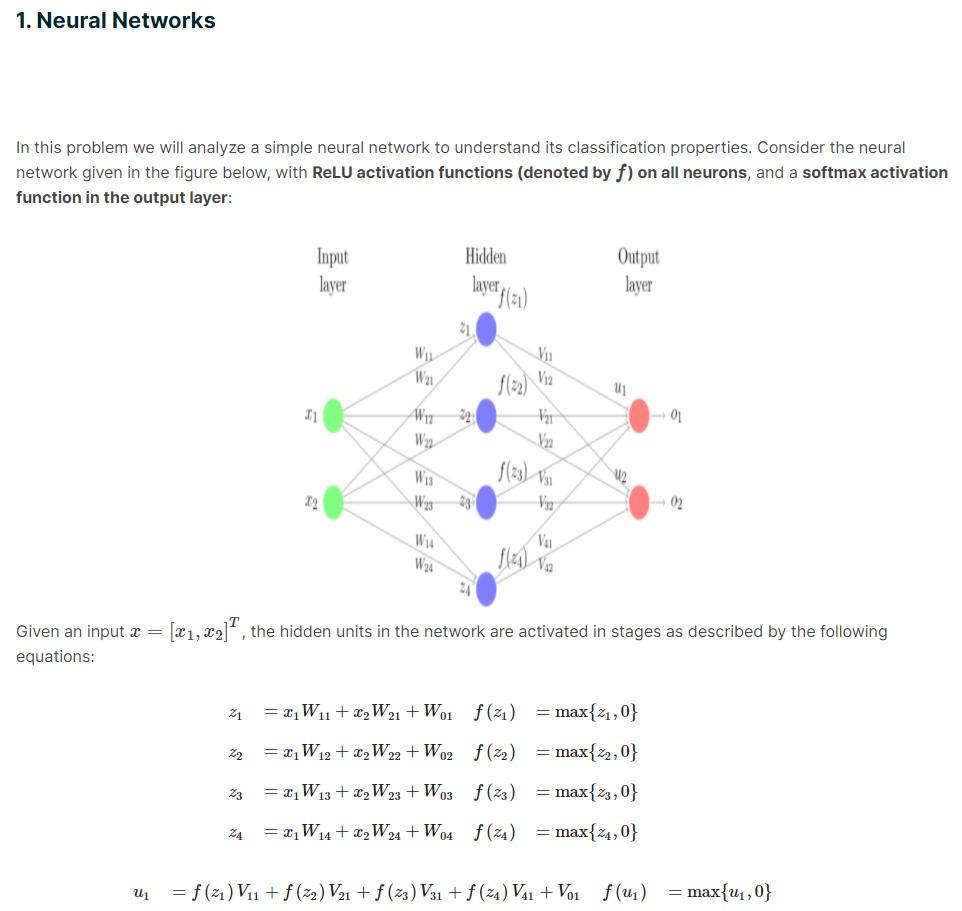

Question: 1. Neural Networks In this problem we will analyze a simple neural network to understand its classification properties. Consider the neural network given in the

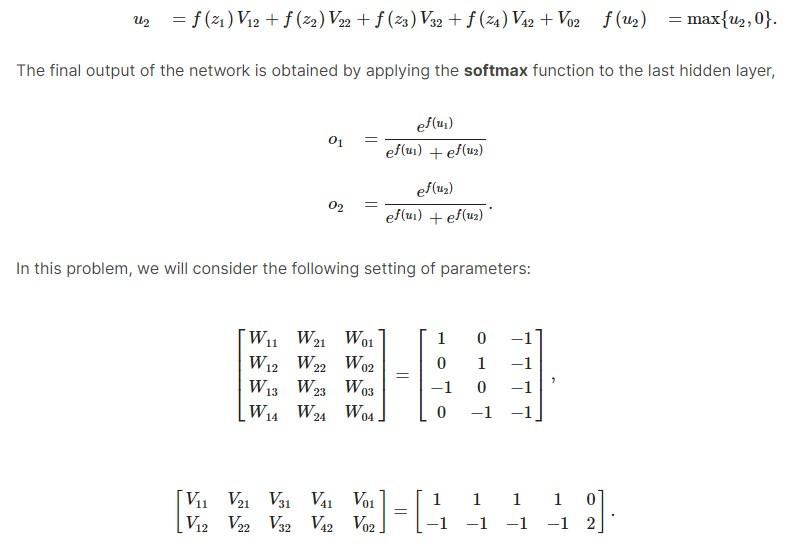

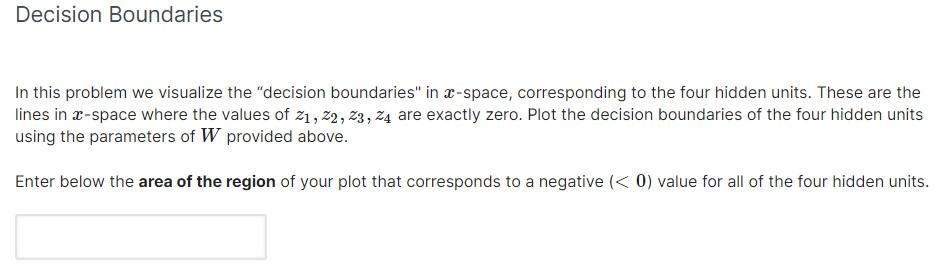

1. Neural Networks In this problem we will analyze a simple neural network to understand its classification properties. Consider the neural network given in the figure below, with ReLU activation functions (denoted by f) on all neurons, and a softmax activation function in the output layer: Hidden Input layer layer gla1 Output layer 21 Vui 11 01 WE We Vu Wu W23 12 33 Vy 02 WA Given an input = equations: [X1, ta]", the hidden units in the network are activated in stages as described by the following 21 = 21W11 + x2W21 + Wolf(1) = max{21,0} 22 = 21W12 + x2W22+W02 f(22) = max{z2,0} 23 = 21W13 + x2W 23+ Wo3 f(23) = max{23,0} Z4 = 2 W14 + x2W 24 + W4 f (24) = max{24,0} u1 = f(21) V11 + f (22) V21 + f (23) V31 + f (24) V41 + Voi f(u) = max{u1,0} un = f(21) V12 + f (22) V22 + f (23) V32 + f (24) V42 + V2 f(u) = max{U2,0}. The final output of the network is obtained by applying the softmax function to the last hidden layer, 01 ef(u) ef(u) + f(u) 02 = ef(uz) ef(u) + f(uz) In this problem, we will consider the following setting of parameters: Wo1 1 0 W11 W21 W2 W22 W02 W13 W23 WO3 W4 W24 W04 = 0 -1 1 0 -1 -1 -1 -1 0 -1 1 V1 V2 V3 V41 Voi (V12 V22 V32 V42 Vo2 = 1 1 1 0 -1 -1 -1 2 2] Decision Boundaries In this problem we visualize the "decision boundaries" in x-space, corresponding to the four hidden units. These are the lines in x-space where the values of 21, 22, 23, 24 are exactly zero. Plot the decision boundaries of the four hidden units using the parameters of W provided above. Enter below the area of the region of your plot that corresponds to a negative (

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts