Question: 1 - Packages First, import all the packages that you will need for this assignment. Some of the packages are given below. - numpo is

1 - Packages First, import all the packages that you will need for this assignment. Some of the packages are given below. - numpo is the fundamental package for working with matrices in Python. - matplotlib is a famous library to plot graphs in Python. - utils.py contains helper functions for this assignment. You do not need to modify code in this file. 2 - Problem Statement Suppose you are the CEO of a grocery store and are considering different cities for opening a new outlet. - You would like to expand your business to cities that may give your store higher profits. - The chain already has store in various cities and you have data for profits and populations from the cities. - You also have data on cities that are candidates for a new grocery store. - For these cities, you have the city population. Can you use the data to help you identify which cities may potentially give your business higher profits? 3 - Dataset range (30,30). You will store the dataset for this task to xatrain and y atrain. - variables xa tratain and yaatraio - xa+x2ain is the population of a city - Xatrain is the profit of a grocery store in that city. A negative value for profit indicates a loss. - Both xatrain and yatrain are numpy arrays. View the variables/ Before starting on any task, it is useful to get more familiar with your dataset. - A good place to start is to just print out each variable and see what it contains. Write the code to print the variable satrating and the type of the variable. xa train is a numpy array that contains decimal values that are all greater than zero. - These values represent the city population times 10,000 - For example, 6.1101 means that the population for that city is 61,101 Now, let's print Yattain. Similarly, yantrain is a numpy array that has decimal values, some negative, some positive. - These represent your grocery store's average monthly profits in each city, in units of $10,000. - For example, 17.592 represents $175,920 in average monthly profits for that city. - 2.6807 represents $26,807 in average monthly loss for that city. Check the dimensions of your variables Another useful way to get familiar with your data is to view its dimensions. Please print the shape of xastratin and yourratin and see how many training examples you have in your dataset. Visualize your data It is often useful to understand the data by visualizing it. - For this dataset, you can use a scatter plot to visualize the data, since it has only two properties to plot (profit and population). - Many other problems that you will encounter in real life have more than two properties (for example, population, average household income, monthly profits, monthly sales L When you have more than two properties, you can still use a scatter plot to see the relationship between each pair of properties. Your goal is to build a linear regression model to fit this data. - With this model, you can then input a new city's population, and have the model estimate your grocery store's potential monthly profits for that city. 4 - Refresher on linear regression In this practice lab, you will fit the linear regression parameters (w,b) to your dataset. - The model function for linear regression, which is a function that maps from (city population) to y (your grocery store's monthly profit for that city) is represented as fw,b(x)=wx+b - To train a linear regression model, you want to find the best (w,b) parameters that fit your dataset. - To compare how one choice of (w,b) is better or worse than another choice, you can evaluate it with a cost function J(w,b) - J is a function of (w,b). That is, the value of the cost J(w,b) depends on the value of (w,b). - The choice of (w,b) that fits your data the best is the one that has the smallest costJ(w,b). - To find the values (w,b) that gets the smallest possible cost J(w,b), you can use a method called gradient descent. - With each step of gradient descent, your parameters (w,b) come closer to the optimal values that will achieve the lowest costJ(w,b). - The trained linear regression model can then take the input feature x (city population) and output a prediction fw,b(x) (predicted monthly profit for a grocery store in that city). 5 - Compute Cost Gradient descent involves repeated steps to adjust the value of your parameter (w,b) to gradually get a smaller and smaller cost J(w,b). - At each step of gradient descent, it will be helpful for you to monitor your progress by computing the cost J(w,b) as (w,b) gets updated. - In this section, you will implement a function to calculate J(w,b) so that you can check the progress of your gradient descent implementation. Cost function As you may recall from the lecture, for one variable, the cost function for linear regression J(w,b) is defined as J(w,b)=2m1i=0m1(fw,b(x(i))y(i))2 - You can think of w,b(x(i)) as the model's prediction of your grocery store's profit, as opposed to y(i), which is the actual profit that is recorded in the data. - m is the number of training examples in the dataset Model prediction - For linear regression with one variable, the prediction of the model fw,b for an example x(i) is representented as: fw,b(x(i))=wx(i)+b This is the equation for a line, with an intercept b and a slope w Implementation II Please complete the sempute cost()) function below to compute the cost J(w,b). Step 1 Complete the crmeutersest below to: - Iterate over the training examples, and for each example, compute: - The prediction of the model for that example fw,b(x(i))=wx(i)+b - The cost for that example cost(i)=(fw,by(i))2 - Return the total cost over all examples J(w,b)=2m1i=0m1cos(i) - Here, m is the number of training examples and is the summation operator 6 - Gradient descent In this section, you will implement the gradient for parameters w,h for linear regression. As described in the lecture videos, the gradient descent algorithm is: repeat until convergence: . bw:=bbJ(w,b):=wwJ(w,b)} where, parameters w,b are both updated simultaniously and where bJ(w,b)=m1i=0m1(fw,b(x(i))y(i))wJ(w,b)=m1i=0m1(fw,b(x(i))y(i))x(i) - m is the number of training examples in the dataset - fw,b(x(i)) is the model's prediction, while y(i), is the target value You will implement a function called compute gradient which calculates wJ(w,b),bJ(w,b) Step 2 Please complete the compute gradient function to: - Iterate over the training examples, and for each example, compute: - The prediction of the model for that example fwb(x{i})=wx{i}+b - The gradient for the parameters w,h from that example bJ(w,b)(i)=fw,b(x(i))y(i)wJ(w,b)(i)=(fw,b(x(i))y(i))x(i) - Return the total gradient update from all the examples bJ(w,b)=m1l=0m1bJ(w,b)(i)wJ(w,b)=m1l=0m1wJ(w,b)(i) - Here, m is the number of training examples and is the summation operator The end of the linear regression lab! 1 - Packages First, import all the packages that you will need for this assignment. Some of the packages are given below. - numpo is the fundamental package for working with matrices in Python. - matplotlib is a famous library to plot graphs in Python. - utils.py contains helper functions for this assignment. You do not need to modify code in this file. 2 - Problem Statement Suppose you are the CEO of a grocery store and are considering different cities for opening a new outlet. - You would like to expand your business to cities that may give your store higher profits. - The chain already has store in various cities and you have data for profits and populations from the cities. - You also have data on cities that are candidates for a new grocery store. - For these cities, you have the city population. Can you use the data to help you identify which cities may potentially give your business higher profits? 3 - Dataset range (30,30). You will store the dataset for this task to xatrain and y atrain. - variables xa tratain and yaatraio - xa+x2ain is the population of a city - Xatrain is the profit of a grocery store in that city. A negative value for profit indicates a loss. - Both xatrain and yatrain are numpy arrays. View the variables/ Before starting on any task, it is useful to get more familiar with your dataset. - A good place to start is to just print out each variable and see what it contains. Write the code to print the variable satrating and the type of the variable. xa train is a numpy array that contains decimal values that are all greater than zero. - These values represent the city population times 10,000 - For example, 6.1101 means that the population for that city is 61,101 Now, let's print Yattain. Similarly, yantrain is a numpy array that has decimal values, some negative, some positive. - These represent your grocery store's average monthly profits in each city, in units of $10,000. - For example, 17.592 represents $175,920 in average monthly profits for that city. - 2.6807 represents $26,807 in average monthly loss for that city. Check the dimensions of your variables Another useful way to get familiar with your data is to view its dimensions. Please print the shape of xastratin and yourratin and see how many training examples you have in your dataset. Visualize your data It is often useful to understand the data by visualizing it. - For this dataset, you can use a scatter plot to visualize the data, since it has only two properties to plot (profit and population). - Many other problems that you will encounter in real life have more than two properties (for example, population, average household income, monthly profits, monthly sales L When you have more than two properties, you can still use a scatter plot to see the relationship between each pair of properties. Your goal is to build a linear regression model to fit this data. - With this model, you can then input a new city's population, and have the model estimate your grocery store's potential monthly profits for that city. 4 - Refresher on linear regression In this practice lab, you will fit the linear regression parameters (w,b) to your dataset. - The model function for linear regression, which is a function that maps from (city population) to y (your grocery store's monthly profit for that city) is represented as fw,b(x)=wx+b - To train a linear regression model, you want to find the best (w,b) parameters that fit your dataset. - To compare how one choice of (w,b) is better or worse than another choice, you can evaluate it with a cost function J(w,b) - J is a function of (w,b). That is, the value of the cost J(w,b) depends on the value of (w,b). - The choice of (w,b) that fits your data the best is the one that has the smallest costJ(w,b). - To find the values (w,b) that gets the smallest possible cost J(w,b), you can use a method called gradient descent. - With each step of gradient descent, your parameters (w,b) come closer to the optimal values that will achieve the lowest costJ(w,b). - The trained linear regression model can then take the input feature x (city population) and output a prediction fw,b(x) (predicted monthly profit for a grocery store in that city). 5 - Compute Cost Gradient descent involves repeated steps to adjust the value of your parameter (w,b) to gradually get a smaller and smaller cost J(w,b). - At each step of gradient descent, it will be helpful for you to monitor your progress by computing the cost J(w,b) as (w,b) gets updated. - In this section, you will implement a function to calculate J(w,b) so that you can check the progress of your gradient descent implementation. Cost function As you may recall from the lecture, for one variable, the cost function for linear regression J(w,b) is defined as J(w,b)=2m1i=0m1(fw,b(x(i))y(i))2 - You can think of w,b(x(i)) as the model's prediction of your grocery store's profit, as opposed to y(i), which is the actual profit that is recorded in the data. - m is the number of training examples in the dataset Model prediction - For linear regression with one variable, the prediction of the model fw,b for an example x(i) is representented as: fw,b(x(i))=wx(i)+b This is the equation for a line, with an intercept b and a slope w Implementation II Please complete the sempute cost()) function below to compute the cost J(w,b). Step 1 Complete the crmeutersest below to: - Iterate over the training examples, and for each example, compute: - The prediction of the model for that example fw,b(x(i))=wx(i)+b - The cost for that example cost(i)=(fw,by(i))2 - Return the total cost over all examples J(w,b)=2m1i=0m1cos(i) - Here, m is the number of training examples and is the summation operator 6 - Gradient descent In this section, you will implement the gradient for parameters w,h for linear regression. As described in the lecture videos, the gradient descent algorithm is: repeat until convergence: . bw:=bbJ(w,b):=wwJ(w,b)} where, parameters w,b are both updated simultaniously and where bJ(w,b)=m1i=0m1(fw,b(x(i))y(i))wJ(w,b)=m1i=0m1(fw,b(x(i))y(i))x(i) - m is the number of training examples in the dataset - fw,b(x(i)) is the model's prediction, while y(i), is the target value You will implement a function called compute gradient which calculates wJ(w,b),bJ(w,b) Step 2 Please complete the compute gradient function to: - Iterate over the training examples, and for each example, compute: - The prediction of the model for that example fwb(x{i})=wx{i}+b - The gradient for the parameters w,h from that example bJ(w,b)(i)=fw,b(x(i))y(i)wJ(w,b)(i)=(fw,b(x(i))y(i))x(i) - Return the total gradient update from all the examples bJ(w,b)=m1l=0m1bJ(w,b)(i)wJ(w,b)=m1l=0m1wJ(w,b)(i) - Here, m is the number of training examples and is the summation operator The end of the linear regression lab

Step by Step Solution

There are 3 Steps involved in it

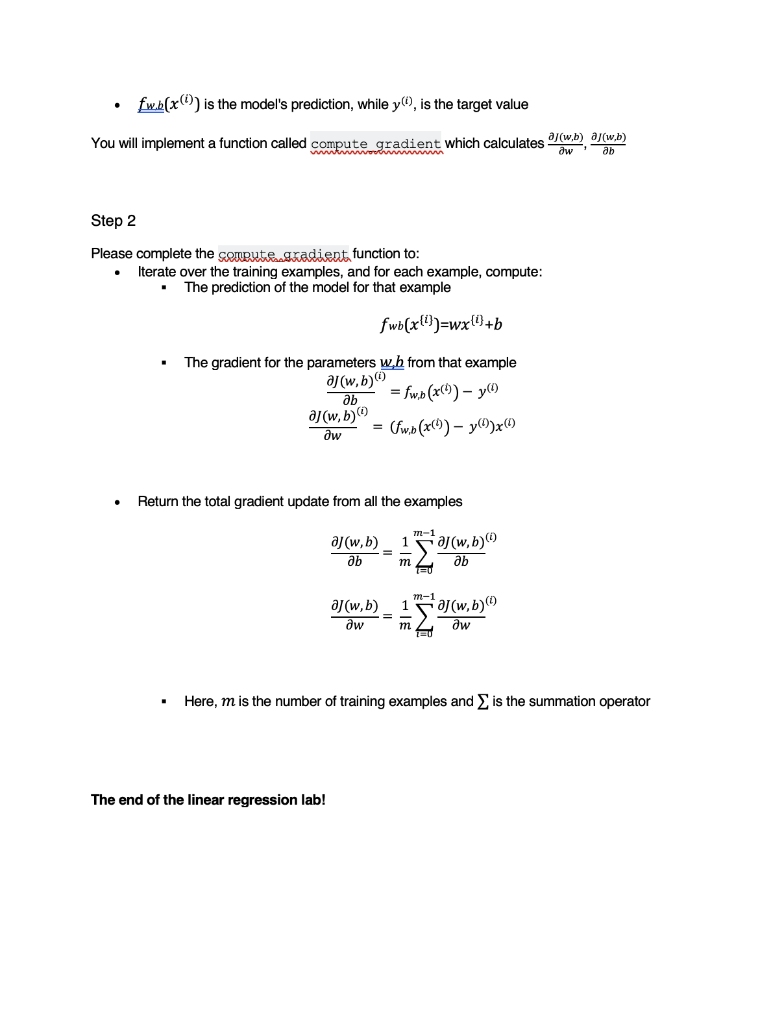

Get step-by-step solutions from verified subject matter experts