Question: 1 Purpose MapReduce [1, 2] is a programming model that allows processing on large datasets using two functions: map and reduce. It allows automatic parallelization

![1 Purpose MapReduce [1, 2] is a programming model that allows](https://dsd5zvtm8ll6.cloudfront.net/si.experts.images/questions/2024/09/66f09570b9377_92866f0957029375.jpg)

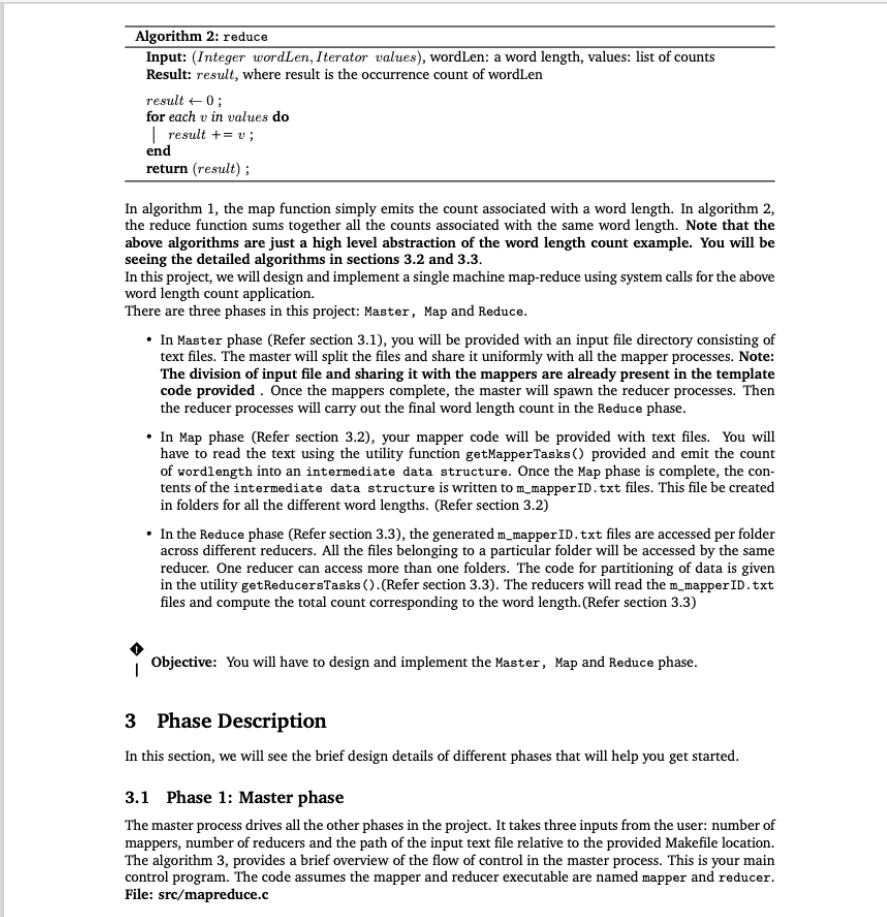

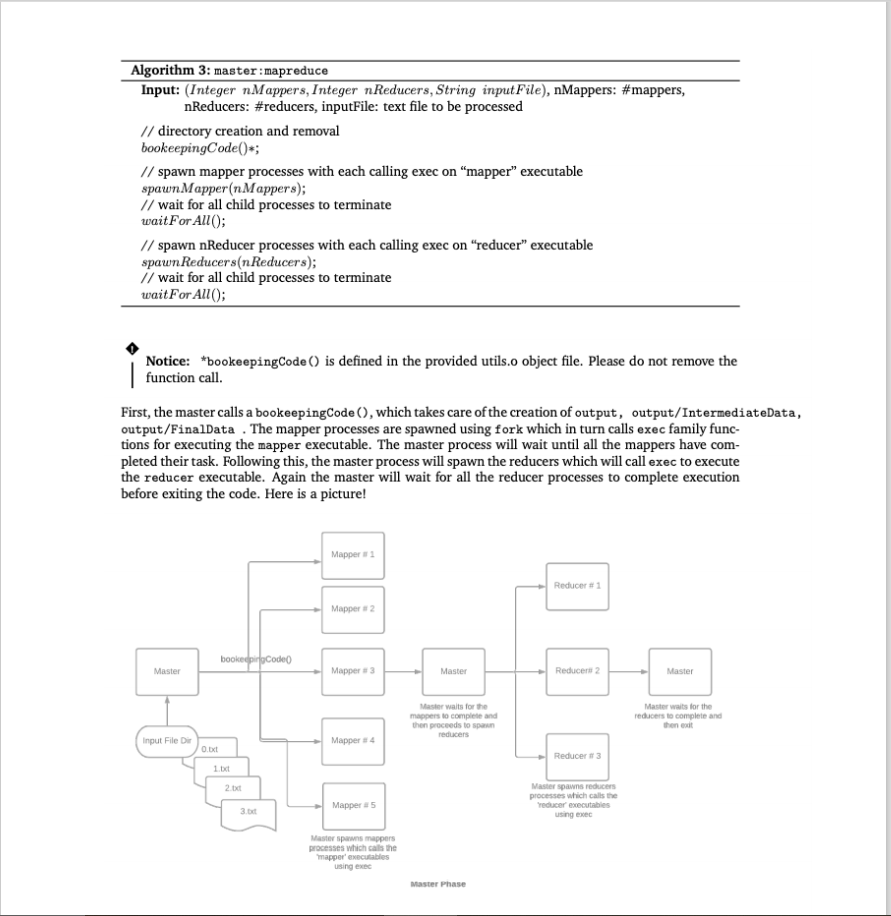

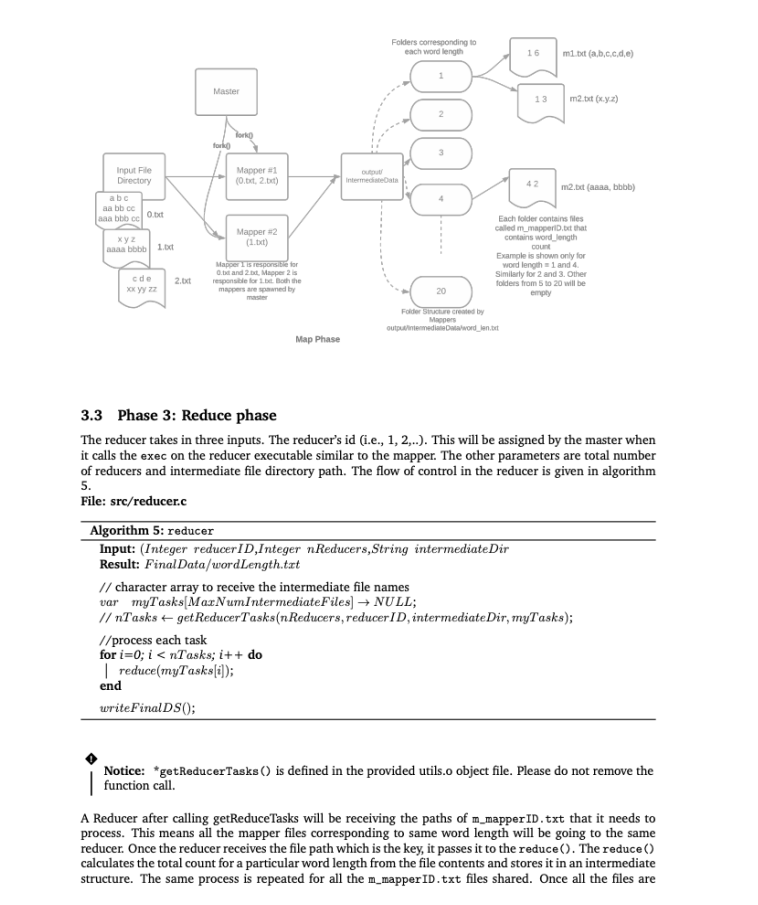

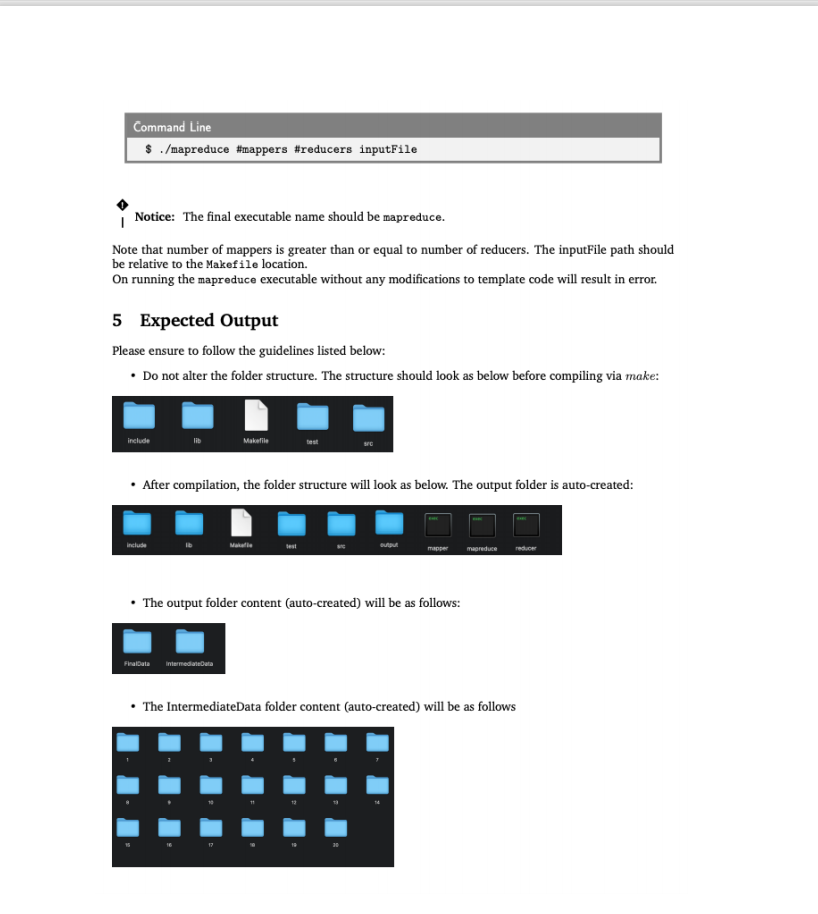

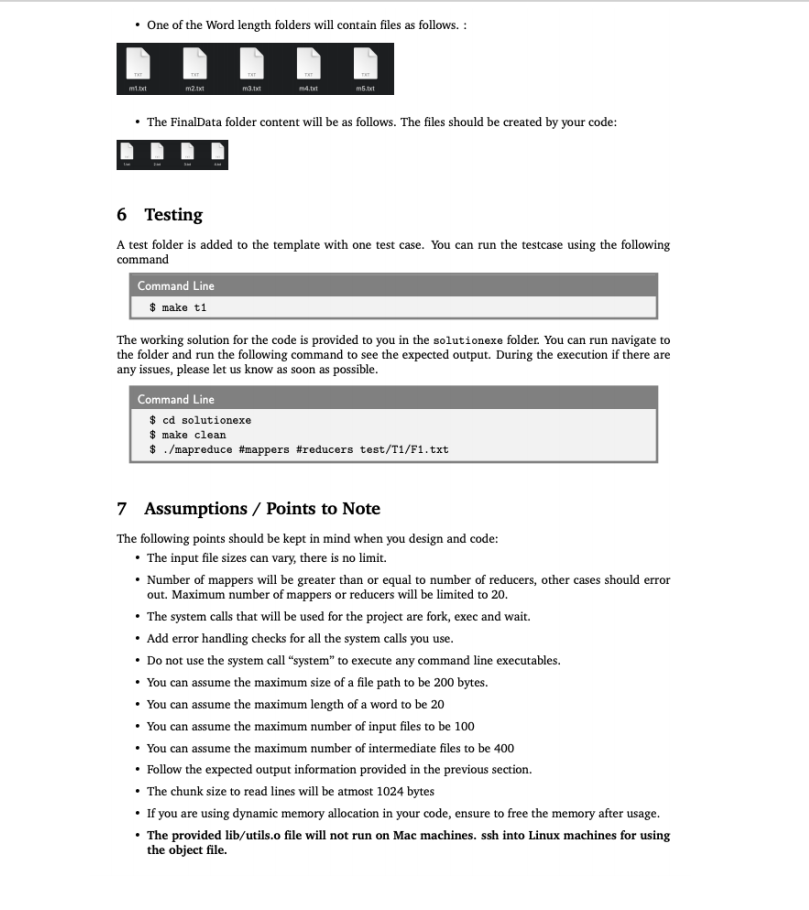

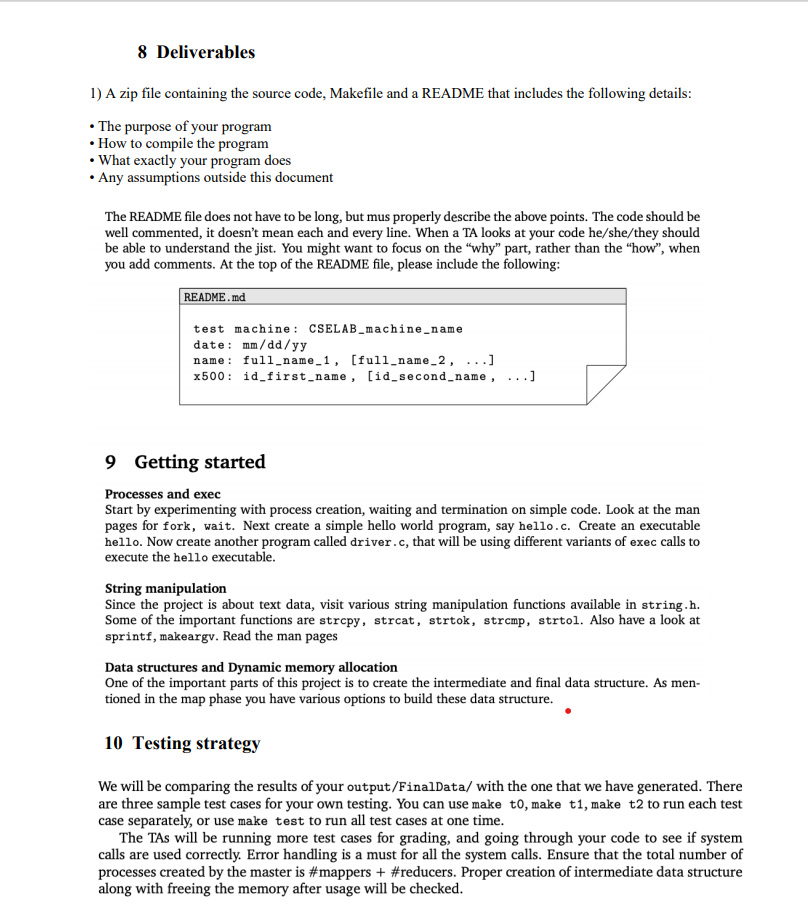

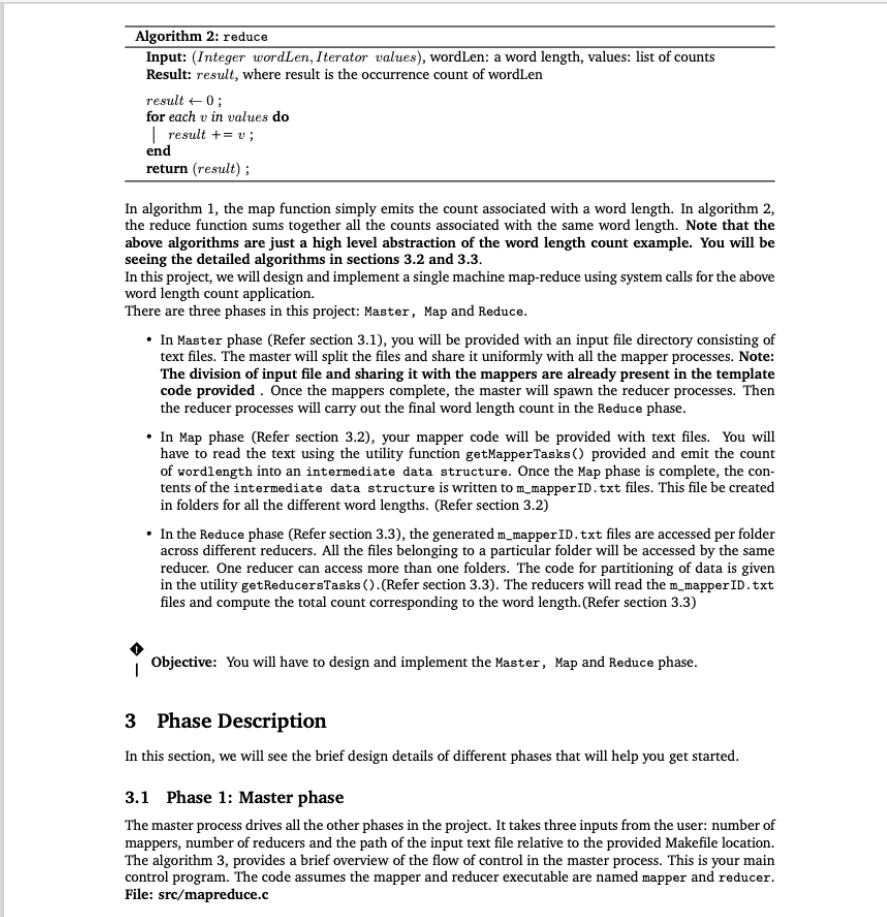

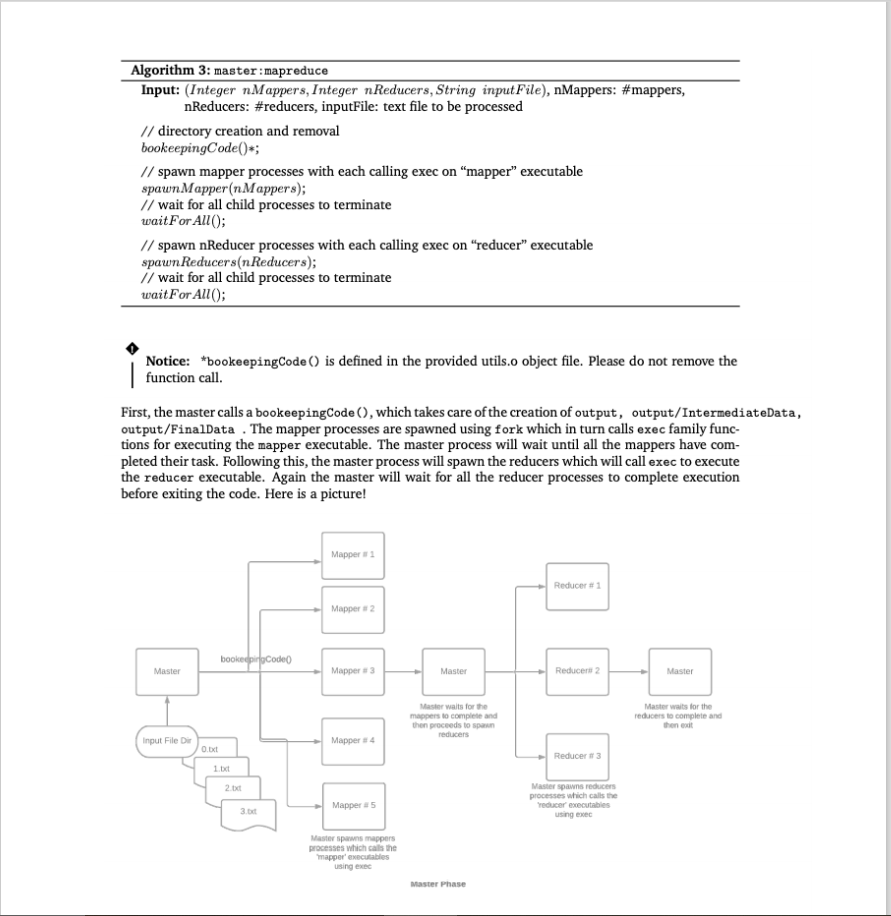

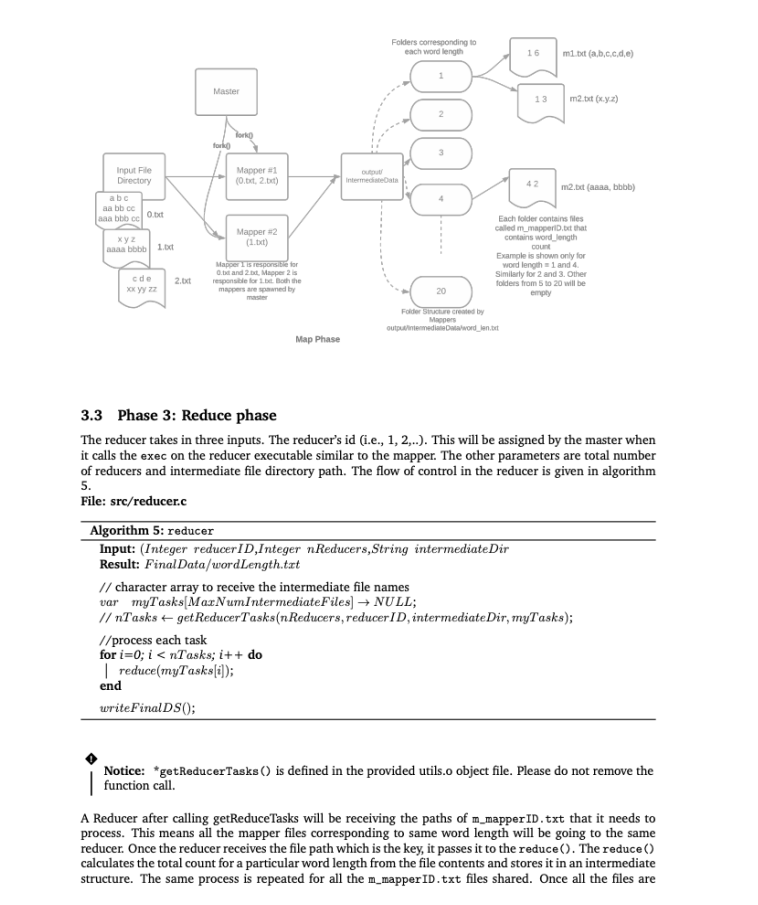

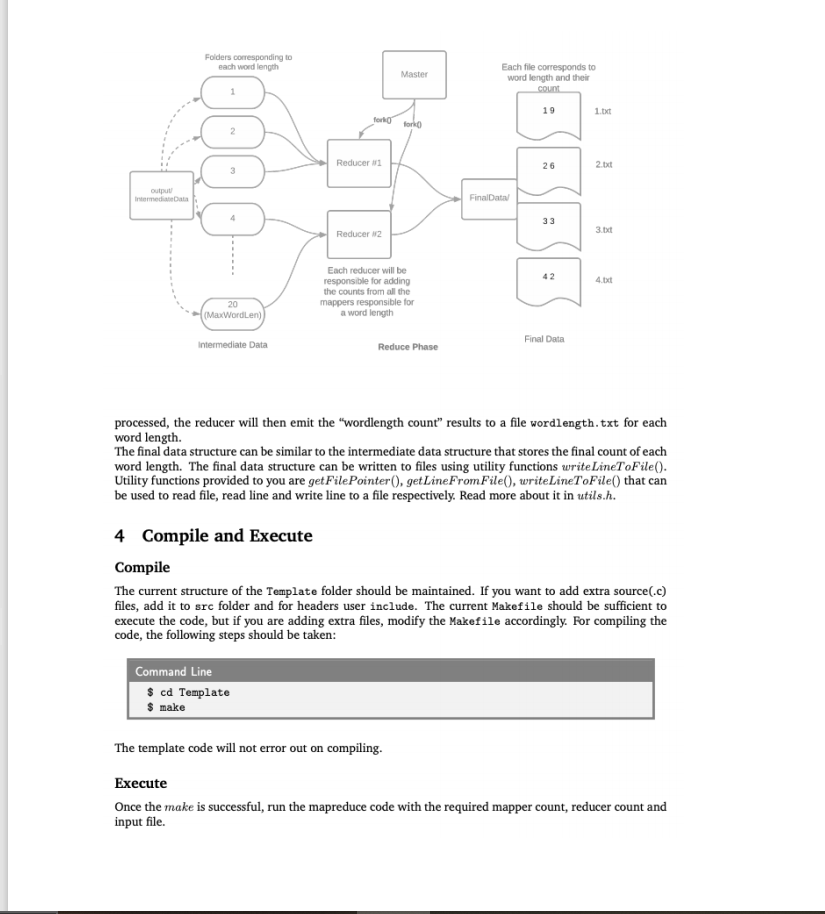

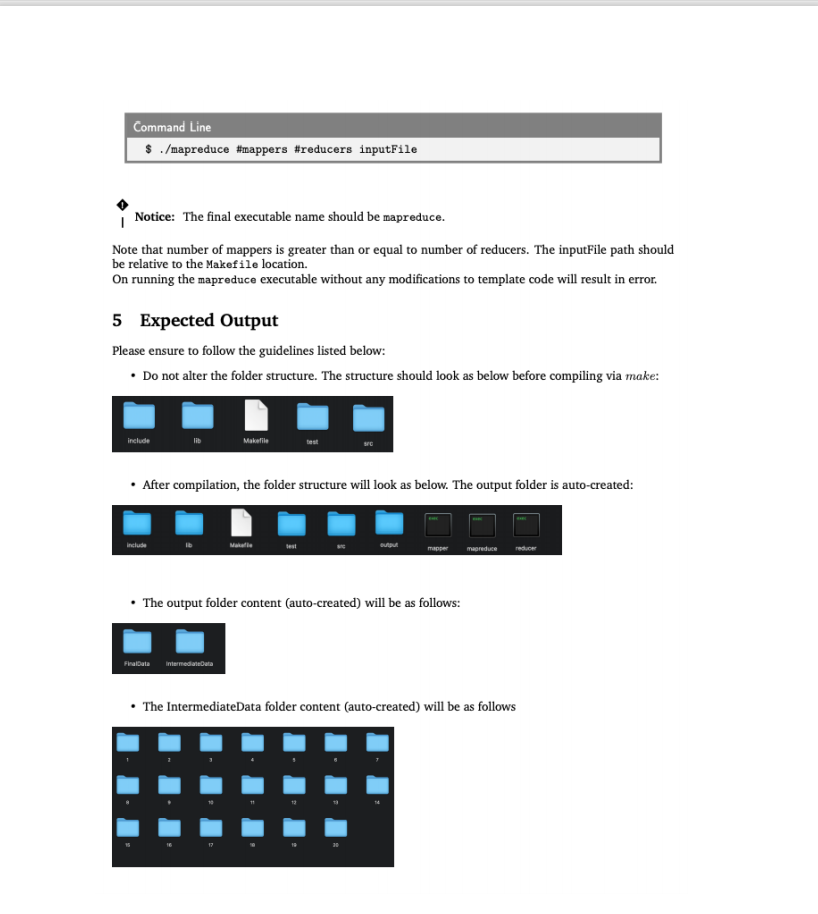

1 Purpose MapReduce [1, 2] is a programming model that allows processing on large datasets using two functions: map and reduce. It allows automatic parallelization of computation across multiple machines using multiple processes. Mapreduce model is widely used in industry for Big Data Analytics and is the de- facto standard for Big Data computing! In this project we will explore a simple verison of mapreduce using operating system primitives on a single machine which will use fork, exec and wait to run map and reduce functions. Utility functions that will help you with building the map and reduce tasks are provided with the project template. You will be given binaries for utilities that will run on the CSE-IT lab machines, we are NOT giving you source for the utilities since you may be asked to write them in future projects. Please adhere to the output formats provided in each section. 2 Problem Statement The mapreduce programming model consist of two functions: map and reduce. The map function takes in pairs, processes them and produces a set of intermediate pairs. The key and value(s) are determined from input files. The intermediate pairs are then grouped based on the key. The reduce function will then reduce/merge the grouped intermediate values based on the key to produce the final result. In this assignment, you will use map and reduce logic paradigm for counting the number of words of different lengths in a large collection of documents. You can refer to the Word Count Example [1, 2], where the objective is to calculate the frequency of each word in the list of documents. In the map phase, each mapper is responsible for a subset of input files and a set create intermediate files word.txt that contains the frequency of each word calculated by a specific mapper. Master then shuffles the data in such a way that one reducer receives all the files related to a specific word. And, in the reduce phase each reducer calculates the final count of each word and store the result in the file. Algorithm 1: map Input: (String fileName, String value), fileName: document name, value: document content Result: (len, count), where len is the word length and count is the number of occurrences of len in fileName for each line l in value do EmitIntermediate(); end Algorithm 2: reduce Input: (Integer wordLen, Iterator values), wordLen: a word length, values: list of counts Result: result, where result is the occurrence count of wordLen result = 0; for each v in values do | result += v; return (result); end In algorithm 1, the map function simply emits the count associated with a word length. In algorithm 2, the reduce function sums together all the counts associated with the same word length. Note that the above algorithms are just a high level abstraction of the word length count example. You will be seeing the detailed algorithms in sections 3.2 and 3.3. In this project, we will design and implement a single machine map-reduce using system calls for the above word length count application. There are three phases in this project: Master, Map and Reduce. In Master phase (Refer section 3.1), you will be provided with an input file directory consisting of text files. The master will split the files and share it uniformly with all the mapper processes. Note: The division of input file and sharing it with the mappers are already present in the template code provided. Once the mappers complete, the master will spawn the reducer processes. Then the reducer processes will carry out the final word length count in the Reduce phase. In Map phase (Refer section 3.2), your mapper code will be provided with text files. You will have to read the text using the utility function getMapperTasks() provided and emit the count of wordlength into an intermediate data structure. Once the Map phase is complete, the con- tents of the intermediate data structure is written to m_mapper ID.txt files. This file be created in folders for all the different word lengths. (Refer section 3.2) In the Reduce phase (Refer section 3.3), the generated m_mapper ID.txt files are accessed per folder across different reducers. All the files belonging to a particular folder will be accessed by the same reducer. One reducer can access more than one folders. The code for partitioning of data is given in the utility getReducersTasks().(Refer section 3.3). The reducers will read the m_mapper ID.txt files and compute the total count corresponding to the word length.(Refer section 3.3) Objective: You will have to design and implement the Master, Map and Reduce phase. 3 Phase Description In this section, we will see the brief design details of different phases that will help you get started. 3.1 Phase 1: Master phase The master process drives all the other phases in the project. It takes three inputs from the user: number of mappers, number of reducers and the path of the input text file relative to the provided Makefile location. The algorithm 3, provides a brief overview of the flow of control in the master process. This is your main control program. The code assumes the mapper and reducer executable are named mapper and reducer. File: src/mapreduce.c Algorithm 3: master:mapreduce Input: (Integer n Mappers, Integer n Reducers, String input File), nMappers: #mappers, nReducers: #reducers, inputFile: text file to be processed // directory creation and removal bookeepingCode()*; // spawn mapper processes with each calling exec on mapper" executable spawn Mapper(n Mappers); // wait for all child processes to terminate wait For All(); // spawn nReducer processes with each calling exec on reducer executable spawn Reducers(n Reducers); // wait for all child processes to terminate wait For All(); Notice: *bookeepingCode () is defined in the provided utils.o object file. Please do not remove the function call. First, the master calls a bookeepingCode (), which takes care of the creation of output, output/IntermediateData, output/FinalData . The mapper processes are spawned using fork which in turn calls exec family func- tions for executing the mapper executable. The master process will wait until all the mappers have com- pleted their task. Following this, the master process will spawn the reducers which will call exec to execute the reducer executable. Again the master will wait for all the reducer processes to complete execution before exiting the code. Here is a picture! Mapper Reducer #1 Mapper #2 booked Code Master Mapper Master Reducers 2 Master Master waits for the mappens to complete and the proceeds to reducers Master waits for the reducers to complete and then exit Input File Mapper 4 0.txt Reducer # 3 1.x 2x Master Spawns reduces processes which calls the reducer executables using exec 3.xt Mapper 15 Master spawns mappers processes which as the mapper executables using Master Phase 3.2 Phase 2: Map phase The mapper takes in three inputs. The mapper's id (i.e. 1, 2, ...). This will be assigned by the master when it calls exec on the mapper executable (i.e. it must be passed to exec as a command-line argument). Similarly, total number of mappers and path to the input file directory will be passed. The flow of control in mapper is defined in the algorithm 4. File: src/mapper.c Algorithm 4: mapper Input: (Integer mapperID, Integer n Mappers, String input FileDir) Result: (m_mapperID.txt), // variable to store input file names var myTasks + NULL; // get list of input files to be processed by this mapper nTasks + get MapperTasks(neMappers, mapperID, inputFileDir, myTasks); 1/process each task for i=0; i count. The utility getMapperTasks() will be used to retrieve the input files that a particular mapper should process. The list of input file names will be stored in myTasks array. So, if a mapper processes n files and each file contains some words so the mapper will count all the occurrences of a particular word length that was encountered in those n files. There are some other utility functions provided getFilePointer(). get Line FromFile(), writeLineToFile() that can be used to read file, read line and write line to a file respectively. Read more about it in utils.h. These utility functions will be used in Reduce phase too. Notice: A word should be composed of consecutive characters c, where c {A...., .........9} Words are separated by whitespaces as delimeters. Example: Thi's is a text* Oh gr8 The words in this sentence are {Thi's, is., a, text*, Oh, gr8} Words are case sensitive, which means "text" and "Text" are different. Folders corresponding to each word length 16 mi bit (a,b,c.c,d,e) Master 13 m2.tit (x,y) Torko forko Jooo Input File Directory Mapper (0.txt, 2.bit) IntermediateData m2.bit (ana, bbbb) abc aa bb cc saa bbb cc Obct xyz ? aaaabbbb 1. Mapper #2 (1.txt) Mapper is responsible for 0.bt and 2 bt Mapper 2 is responsible for both the cdo Each folder contains les called m_mapperit that contains word_longth count Example is shown only for word length 1 and 4 Similarly for 2 and 3. Other folders from 5 to 20 will be 20 empty Folder Scre created by Meepers output intermediateData/word_len.be 2.txt Map Phase 3.3 Phase 3: Reduce phase The reducer takes in three inputs. The reducer's id (i.e., 1, 2,..). This will be assigned by the master when it calls the exec on the reducer executable similar to the mapper. The other parameters are total number of reducers and intermediate file directory path. The flow of control in the reducer is given in algorithm 5. File: src/reducer.c Algorithm 5: reducer Input: (Integer reducerID,Integer nReducers, String intermediate Dir Result: Final Data/wordLength.txt // character array to receive the intermediate file names var myTasks[MaxNumIntermediate Files) + NULL; // nTasks + get ReducerTasks(n Reducers, reducerID, intermediate Dir, myTasks); //process each task for i=0; i pairs, processes them and produces a set of intermediate pairs. The key and value(s) are determined from input files. The intermediate pairs are then grouped based on the key. The reduce function will then reduce/merge the grouped intermediate values based on the key to produce the final result. In this assignment, you will use map and reduce logic paradigm for counting the number of words of different lengths in a large collection of documents. You can refer to the Word Count Example [1, 2], where the objective is to calculate the frequency of each word in the list of documents. In the map phase, each mapper is responsible for a subset of input files and a set create intermediate files word.txt that contains the frequency of each word calculated by a specific mapper. Master then shuffles the data in such a way that one reducer receives all the files related to a specific word. And, in the reduce phase each reducer calculates the final count of each word and store the result in the file. Algorithm 1: map Input: (String fileName, String value), fileName: document name, value: document content Result: (len, count), where len is the word length and count is the number of occurrences of len in fileName for each line l in value do EmitIntermediate(); end Algorithm 2: reduce Input: (Integer wordLen, Iterator values), wordLen: a word length, values: list of counts Result: result, where result is the occurrence count of wordLen result = 0; for each v in values do | result += v; return (result); end In algorithm 1, the map function simply emits the count associated with a word length. In algorithm 2, the reduce function sums together all the counts associated with the same word length. Note that the above algorithms are just a high level abstraction of the word length count example. You will be seeing the detailed algorithms in sections 3.2 and 3.3. In this project, we will design and implement a single machine map-reduce using system calls for the above word length count application. There are three phases in this project: Master, Map and Reduce. In Master phase (Refer section 3.1), you will be provided with an input file directory consisting of text files. The master will split the files and share it uniformly with all the mapper processes. Note: The division of input file and sharing it with the mappers are already present in the template code provided. Once the mappers complete, the master will spawn the reducer processes. Then the reducer processes will carry out the final word length count in the Reduce phase. In Map phase (Refer section 3.2), your mapper code will be provided with text files. You will have to read the text using the utility function getMapperTasks() provided and emit the count of wordlength into an intermediate data structure. Once the Map phase is complete, the con- tents of the intermediate data structure is written to m_mapper ID.txt files. This file be created in folders for all the different word lengths. (Refer section 3.2) In the Reduce phase (Refer section 3.3), the generated m_mapper ID.txt files are accessed per folder across different reducers. All the files belonging to a particular folder will be accessed by the same reducer. One reducer can access more than one folders. The code for partitioning of data is given in the utility getReducersTasks().(Refer section 3.3). The reducers will read the m_mapper ID.txt files and compute the total count corresponding to the word length.(Refer section 3.3) Objective: You will have to design and implement the Master, Map and Reduce phase. 3 Phase Description In this section, we will see the brief design details of different phases that will help you get started. 3.1 Phase 1: Master phase The master process drives all the other phases in the project. It takes three inputs from the user: number of mappers, number of reducers and the path of the input text file relative to the provided Makefile location. The algorithm 3, provides a brief overview of the flow of control in the master process. This is your main control program. The code assumes the mapper and reducer executable are named mapper and reducer. File: src/mapreduce.c Algorithm 3: master:mapreduce Input: (Integer n Mappers, Integer n Reducers, String input File), nMappers: #mappers, nReducers: #reducers, inputFile: text file to be processed // directory creation and removal bookeepingCode()*; // spawn mapper processes with each calling exec on mapper" executable spawn Mapper(n Mappers); // wait for all child processes to terminate wait For All(); // spawn nReducer processes with each calling exec on reducer executable spawn Reducers(n Reducers); // wait for all child processes to terminate wait For All(); Notice: *bookeepingCode () is defined in the provided utils.o object file. Please do not remove the function call. First, the master calls a bookeepingCode (), which takes care of the creation of output, output/IntermediateData, output/FinalData . The mapper processes are spawned using fork which in turn calls exec family func- tions for executing the mapper executable. The master process will wait until all the mappers have com- pleted their task. Following this, the master process will spawn the reducers which will call exec to execute the reducer executable. Again the master will wait for all the reducer processes to complete execution before exiting the code. Here is a picture! Mapper Reducer #1 Mapper #2 booked Code Master Mapper Master Reducers 2 Master Master waits for the mappens to complete and the proceeds to reducers Master waits for the reducers to complete and then exit Input File Mapper 4 0.txt Reducer # 3 1.x 2x Master Spawns reduces processes which calls the reducer executables using exec 3.xt Mapper 15 Master spawns mappers processes which as the mapper executables using Master Phase 3.2 Phase 2: Map phase The mapper takes in three inputs. The mapper's id (i.e. 1, 2, ...). This will be assigned by the master when it calls exec on the mapper executable (i.e. it must be passed to exec as a command-line argument). Similarly, total number of mappers and path to the input file directory will be passed. The flow of control in mapper is defined in the algorithm 4. File: src/mapper.c Algorithm 4: mapper Input: (Integer mapperID, Integer n Mappers, String input FileDir) Result: (m_mapperID.txt), // variable to store input file names var myTasks + NULL; // get list of input files to be processed by this mapper nTasks + get MapperTasks(neMappers, mapperID, inputFileDir, myTasks); 1/process each task for i=0; i count. The utility getMapperTasks() will be used to retrieve the input files that a particular mapper should process. The list of input file names will be stored in myTasks array. So, if a mapper processes n files and each file contains some words so the mapper will count all the occurrences of a particular word length that was encountered in those n files. There are some other utility functions provided getFilePointer(). get Line FromFile(), writeLineToFile() that can be used to read file, read line and write line to a file respectively. Read more about it in utils.h. These utility functions will be used in Reduce phase too. Notice: A word should be composed of consecutive characters c, where c {A...., .........9} Words are separated by whitespaces as delimeters. Example: Thi's is a text* Oh gr8 The words in this sentence are {Thi's, is., a, text*, Oh, gr8} Words are case sensitive, which means "text" and "Text" are different. Folders corresponding to each word length 16 mi bit (a,b,c.c,d,e) Master 13 m2.tit (x,y) Torko forko Jooo Input File Directory Mapper (0.txt, 2.bit) IntermediateData m2.bit (ana, bbbb) abc aa bb cc saa bbb cc Obct xyz ? aaaabbbb 1. Mapper #2 (1.txt) Mapper is responsible for 0.bt and 2 bt Mapper 2 is responsible for both the cdo Each folder contains les called m_mapperit that contains word_longth count Example is shown only for word length 1 and 4 Similarly for 2 and 3. Other folders from 5 to 20 will be 20 empty Folder Scre created by Meepers output intermediateData/word_len.be 2.txt Map Phase 3.3 Phase 3: Reduce phase The reducer takes in three inputs. The reducer's id (i.e., 1, 2,..). This will be assigned by the master when it calls the exec on the reducer executable similar to the mapper. The other parameters are total number of reducers and intermediate file directory path. The flow of control in the reducer is given in algorithm 5. File: src/reducer.c Algorithm 5: reducer Input: (Integer reducerID,Integer nReducers, String intermediate Dir Result: Final Data/wordLength.txt // character array to receive the intermediate file names var myTasks[MaxNumIntermediate Files) + NULL; // nTasks + get ReducerTasks(n Reducers, reducerID, intermediate Dir, myTasks); //process each task for i=0; i

![1 Purpose MapReduce [1, 2] is a programming model that allows](https://dsd5zvtm8ll6.cloudfront.net/si.experts.images/questions/2024/09/66f09570b9377_92866f0957029375.jpg)