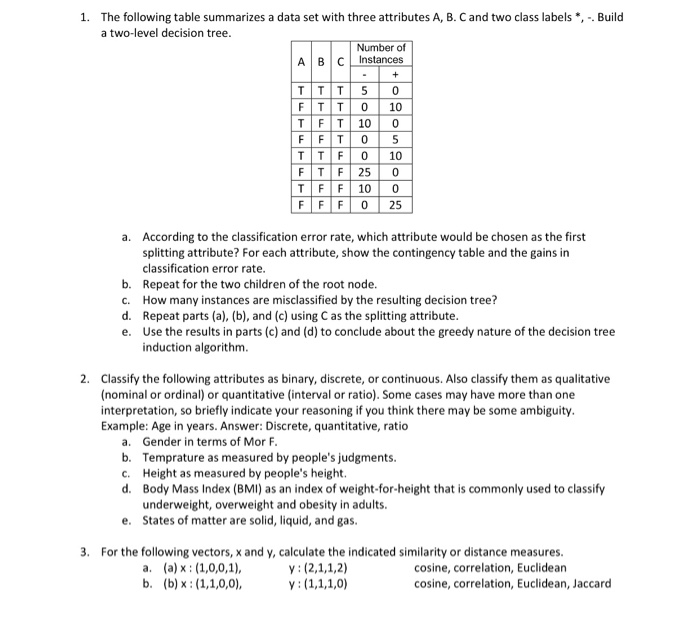

Question: 1. The following table summarizes a data set with three attributes A, B. C and two class labels * a two-level decision tree Build Number

1. The following table summarizes a data set with three attributes A, B. C and two class labels * a two-level decision tree Build Number of AB CInstances F TT 0 10 TT F 010 According to the classification error rate, which attribute would be chosen as the first splitting attribute? For each attribute, show the contingency table and the gains in classification error rate Repeat for the two children of the root node. How many instances are misclassified by the resulting decision tree? Repeat parts (a), (b), and (c) using C as the splitting attribute Use the results in parts (c) and (d) to conclude about the greedy nature of the decision tree induction algorithm a. b. c. d. e. 2. Classify the following attributes as binary, discrete, or continuous. Also classify them as qualitative (nominal or ordinal) or quantitative (interval or ratio). Some cases may have more than one interpretation, so briefly indicate your reasoning if you think there may be some ambiguity Example: Age in years. Answer: Discrete, quantitative, ratio a. b. c. d. Gender in terms of Mor F Temprature as measured by people's judgments. Height as measured by people's height Body Mass Index (BMI) as an index of weight-for-height that is commonly used to classify underweight, overweight and obesity in adults. States of matter are solid, liquid, and gas. e. 3. For the following vectors, x and y, calculate the indicated similarity or distance measures. cosine, correlation, Euclidean cosine, correlation, Euclidean, Jaccard

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts