Question: 1.2 Reward Functions (20 pts) For this problem consider the MDP is shown in Figure1. The numbers in each square represent reward the agent receives

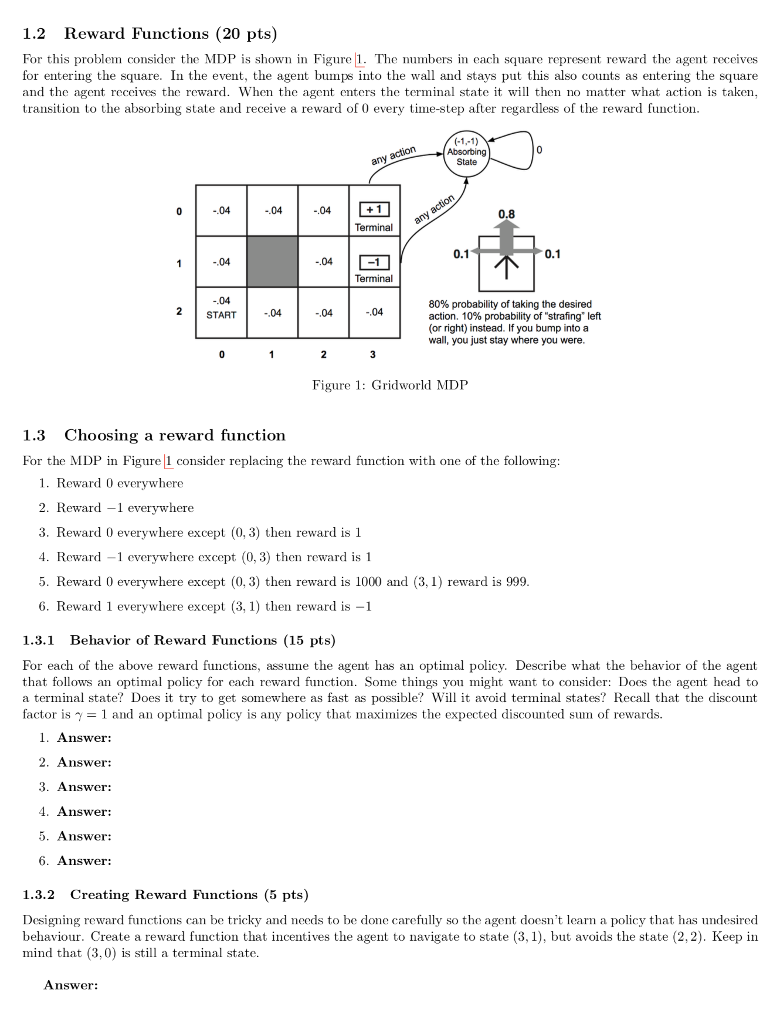

1.2 Reward Functions (20 pts) For this problem consider the MDP is shown in Figure1. The numbers in each square represent reward the agent receives for entering the square. In the event, the agent bumps into the wall and stays put this also counts as entering the square and the agent receives the reward. When the agent enters the terminal state it will then no matter what action is taken, transition to the absorbing state and receive a reward of 0 every tme-step after regardless of the reward function action bsorbing State -.04 04 04 0.8 0.1 -.04 04 Terminal 80% probability of taking the desired action, 10% probability of "strafing, left (or right) instead. If you bump into a wall, you just stay where you were. 2START .04 04 .04 Figure 1: Gridworld MDP 1.3 Choosing a reward function For the MDP in Figure1 consider replacing the reward function with one of the following: 1. Reward 0 everywhere 2. Reward 1 everywhere 3. Reward 0 everywhere except (0, 3) then reward is 1 4. Reward -1 everywhere except (0, 3) then reward is 1 5. Reward 0 everywhere except (0, 3) then reward is 1000 and (3,1) reward is 999 6. Reward 1 everywhere except (3,1) then reward is -1 1.3.1 Behavior of Reward Functions (15 pts) For each of the above reward functions, assune the agent has an optimal policy. Describe what the behavior of the agent that follows an optimal policy for each reward function. Some things you might want to consider: Does the agent head to a terminal state? Does it try to get somewhere as fast as possible? Will it avoid terminal states? Recall that the discount factor is ? = 1 and an optimal policy is any policy that mhaximizes the expected discounted sum of rewards. 1. Answer: 2. Answer: 3. Answer: 4. Answer: 5. Answer: nswer: 1.3.2 Creating Reward Functions (5 pts) Designing reward functions can be tricky and needs to be done carefully so the agent doesn't learn a policy that has undesired behaviour. Create a reward function that incentives the agent to navigate to state (3,1), but avoids the state (2,2). Keep in mind that (3,0) is still a terminal state. Answer: 1.2 Reward Functions (20 pts) For this problem consider the MDP is shown in Figure1. The numbers in each square represent reward the agent receives for entering the square. In the event, the agent bumps into the wall and stays put this also counts as entering the square and the agent receives the reward. When the agent enters the terminal state it will then no matter what action is taken, transition to the absorbing state and receive a reward of 0 every tme-step after regardless of the reward function action bsorbing State -.04 04 04 0.8 0.1 -.04 04 Terminal 80% probability of taking the desired action, 10% probability of "strafing, left (or right) instead. If you bump into a wall, you just stay where you were. 2START .04 04 .04 Figure 1: Gridworld MDP 1.3 Choosing a reward function For the MDP in Figure1 consider replacing the reward function with one of the following: 1. Reward 0 everywhere 2. Reward 1 everywhere 3. Reward 0 everywhere except (0, 3) then reward is 1 4. Reward -1 everywhere except (0, 3) then reward is 1 5. Reward 0 everywhere except (0, 3) then reward is 1000 and (3,1) reward is 999 6. Reward 1 everywhere except (3,1) then reward is -1 1.3.1 Behavior of Reward Functions (15 pts) For each of the above reward functions, assune the agent has an optimal policy. Describe what the behavior of the agent that follows an optimal policy for each reward function. Some things you might want to consider: Does the agent head to a terminal state? Does it try to get somewhere as fast as possible? Will it avoid terminal states? Recall that the discount factor is ? = 1 and an optimal policy is any policy that mhaximizes the expected discounted sum of rewards. 1. Answer: 2. Answer: 3. Answer: 4. Answer: 5. Answer: nswer: 1.3.2 Creating Reward Functions (5 pts) Designing reward functions can be tricky and needs to be done carefully so the agent doesn't learn a policy that has undesired behaviour. Create a reward function that incentives the agent to navigate to state (3,1), but avoids the state (2,2). Keep in mind that (3,0) is still a terminal state

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts