Question: 2) 10pt-Decision Trees Mark as True or False. 2pts each , with -1 for wrong answers. i) 2pts - True / False Decision tree learning

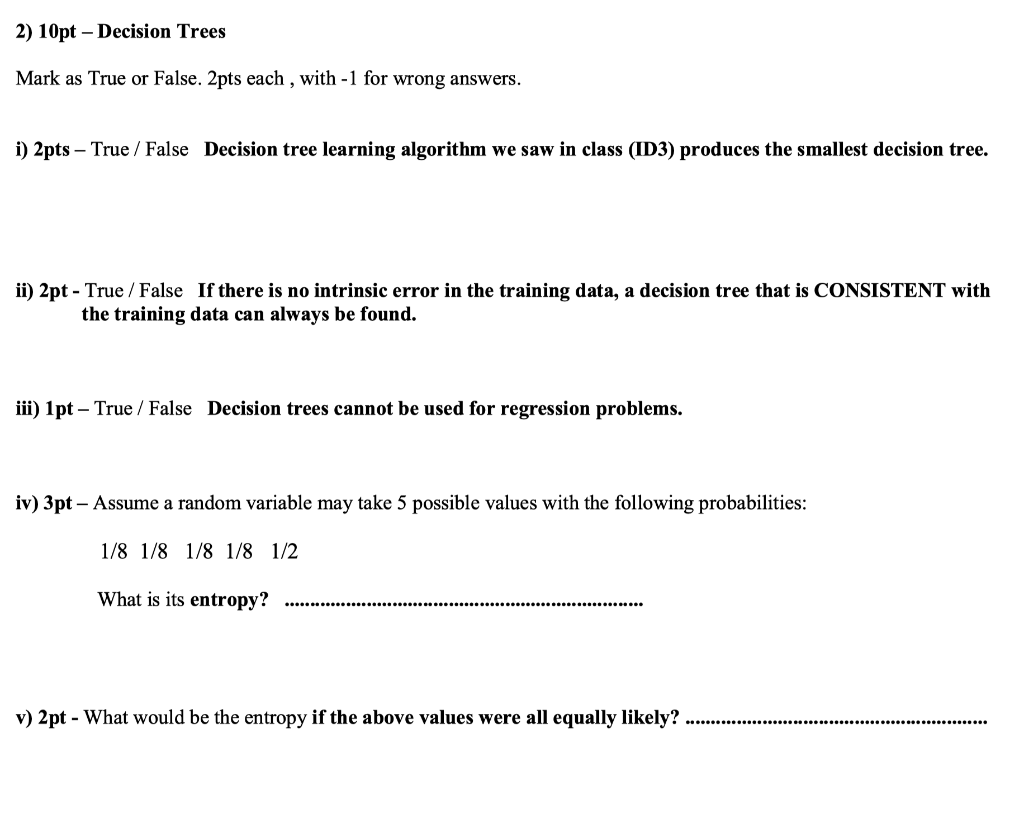

2) 10pt-Decision Trees Mark as True or False. 2pts each , with -1 for wrong answers. i) 2pts - True / False Decision tree learning algorithm we saw in class (ID3) produces the smallest decision tree. ii) 2pt - True / False If there is no intrinsic error in the training data, a decision tree that is CONSISTENT with the training data can always be found. iii) 1pt - True / False Decision trees cannot be used for regression problems. iv) 3pt - Assume a random variable may take 5 possible values with the following probabilities: What is its entropy? v) 2 pt - What would be the entropy if the above values were all equally likely

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts