Question: ( 2 5 points ) We have argued in class that a deterministic policy may result in extremely suboptimal outcomes. This is especially true when

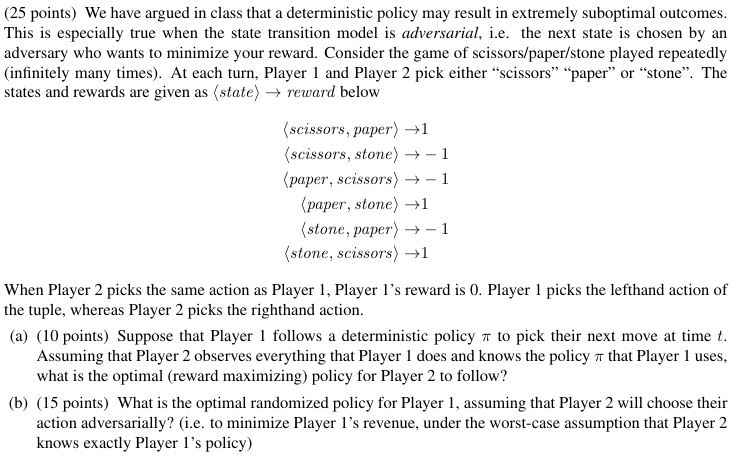

points We have argued in class that a deterministic policy may result in extremely suboptimal outcomes.

This is especially true when the state transition model is adversarial, ie the next state is chosen by an

adversary who wants to minimize your reward. Consider the game of scissorspaperstone played repeatedly

infinitely many times At each turn, Player and Player pick either "scissors" "paper" or "stone". The

states and rewards are given as state : reward below

: scissors, paper :

: scissors, stone :

: paper, scissors :

: paper, stone :

: stone, paper :

: stone, scissors :

When Player picks the same action as Player Player s reward is Player picks the lefthand action of

the tuple, whereas Player picks the righthand action.

a points Suppose that Player follows a deterministic policy to pick their next move at time

Assuming that Player observes everything that Player does and knows the policy that Player uses,

what is the optimal reward maximizing policy for Player to follow?

b points What is the optimal randomized policy for Player assuming that Player will choose their

action adversarially? ie to minimize Player ls revenue, under the worstcase assumption that Player

knows exactly Player s policy points Consider the following modification of the greedy algorithm for learning a regretminimizing

policy in the expert systems domain. Instead of picking an action out of the set of bestperforming actions

so far as is the case for the simple greedy algorithm we pick the best performing action in the previous

timesteps; if there is more than one best performing action, we pick the one with the lowest index. For

example, if we simply pick any one of the actions that offered the highest reward in the previous

round; if we pick the action that had the highest cumulative reward in the last three rounds, etc. More

formally, the greedy algorithm works as follows. At time let be the set of actions that had maximal

total reward at time steps dots,; we pick the action with the lowest index out of if we run

the original greedy algorithm What is the worstcase regret of the greedy algorithm? You must provide a

formal proof of its regret guarantees with respect to the best action.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock