Question: 2. (50 points) Bilinear interpolation. For an input 2D single-channel feature map U E RHXW of height H and width W and a point at

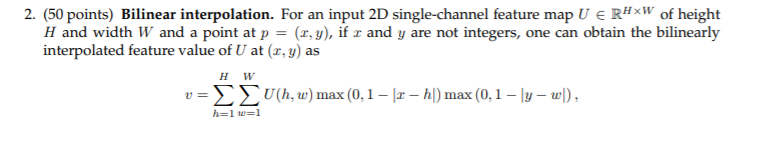

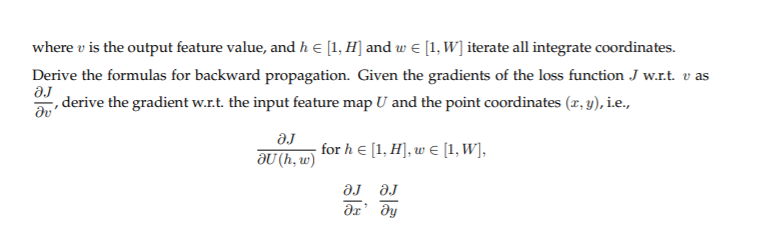

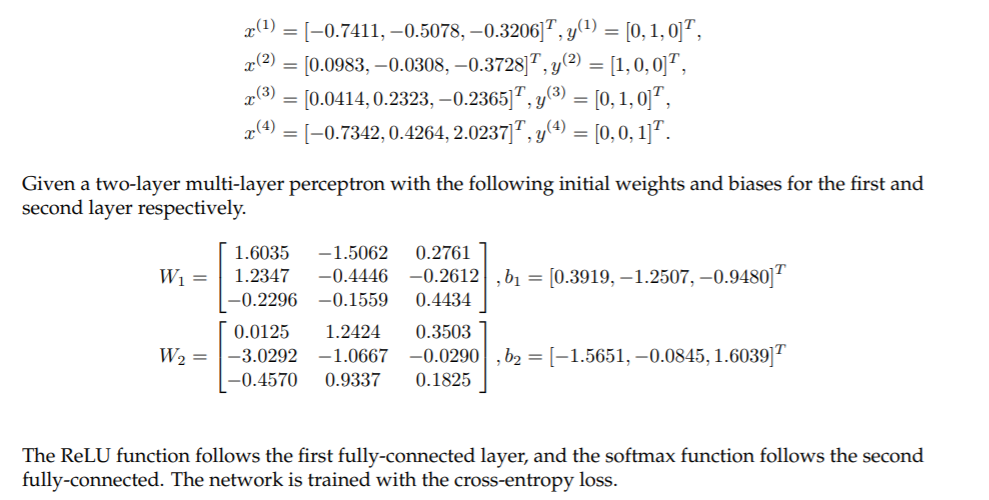

2. (50 points) Bilinear interpolation. For an input 2D single-channel feature map U E RHXW of height H and width W and a point at p = (x, y), if r and y are not integers, one can obtain the bilinearly interpolated feature value of U at (2,y) as Hw v=EU(h,w) max (0,1 12 h) max (0,1 - \y w), h=1 w=1 where v is the output feature value, and h [1, H] and we [1, W] iterate all integrate coordinates. Derive the formulas for backward propagation. Given the gradients of the loss function J w.r.t. v as aj , derive the gradient w.r.t. the input feature map U and the point coordinates (x, y), i.e., aj for h [1, H), w E (1,W], au (h, w) ajaJ ar ay (1) = (-0.7411, -0.5078, -0.3206], y(1) = [0, 1, 0]", 2(2) = [0.0983, -0.0308, -0.3728]?, y(2) = [1,0,0]", 2(3) = [0.0414, 0.2323, -0.2365)", y(3) = [0, 1, 0]", zo (4) = (-0.7342, 0.4264, 2.0237]", y(4) = [0,0,1)?. Given a two-layer multi-layer perceptron with the following initial weights and biases for the first and second layer respectively. W1 = 1.6035 -1.5062 0.2761 1.2347 -0.4446 -0.2612 , b1 = [0.3919, -1.2507, -0.9480] -0.2296 -0.1559 0.4434 0.0125 1.2424 0.3503 -3.0292 -1.0667 -0.0290,62 = [-1.5651,-0.0845, 1.6039] -0.4570 0.9337 0.1825 W2= The ReLU function follows the first fully-connected layer, and the softmax function follows the second fully-connected. The network is trained with the cross-entropy loss. 2. (50 points) Bilinear interpolation. For an input 2D single-channel feature map U E RHXW of height H and width W and a point at p = (x, y), if r and y are not integers, one can obtain the bilinearly interpolated feature value of U at (2,y) as Hw v=EU(h,w) max (0,1 12 h) max (0,1 - \y w), h=1 w=1 where v is the output feature value, and h [1, H] and we [1, W] iterate all integrate coordinates. Derive the formulas for backward propagation. Given the gradients of the loss function J w.r.t. v as aj , derive the gradient w.r.t. the input feature map U and the point coordinates (x, y), i.e., aj for h [1, H), w E (1,W], au (h, w) ajaJ ar ay (1) = (-0.7411, -0.5078, -0.3206], y(1) = [0, 1, 0]", 2(2) = [0.0983, -0.0308, -0.3728]?, y(2) = [1,0,0]", 2(3) = [0.0414, 0.2323, -0.2365)", y(3) = [0, 1, 0]", zo (4) = (-0.7342, 0.4264, 2.0237]", y(4) = [0,0,1)?. Given a two-layer multi-layer perceptron with the following initial weights and biases for the first and second layer respectively. W1 = 1.6035 -1.5062 0.2761 1.2347 -0.4446 -0.2612 , b1 = [0.3919, -1.2507, -0.9480] -0.2296 -0.1559 0.4434 0.0125 1.2424 0.3503 -3.0292 -1.0667 -0.0290,62 = [-1.5651,-0.0845, 1.6039] -0.4570 0.9337 0.1825 W2= The ReLU function follows the first fully-connected layer, and the softmax function follows the second fully-connected. The network is trained with the cross-entropy loss

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts