Question: 2. Follow regularized the leader The reason the algorithm above didn't do so well, is because when we deterministically jump from one strategy to another,

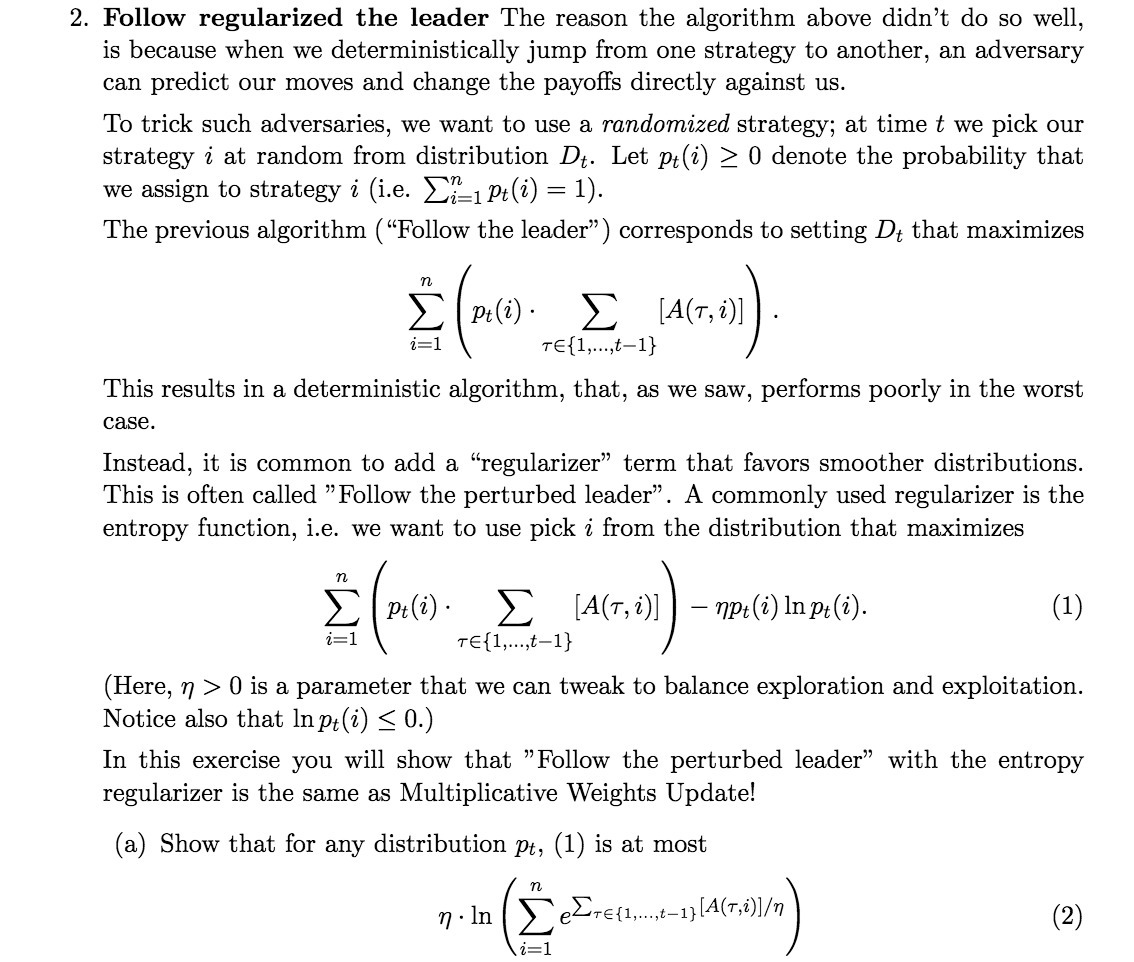

2. Follow regularized the leader The reason the algorithm above didn't do so well, is because when we deterministically jump from one strategy to another, an adversary can predict our moves and change the payoffs directly against us. To trick such adversaries, we want to use a randomized strategy; at time t we pick our strategy 1" at random from distribution Dt. Let p44.) 2 0 denote the probability that we assign to strategy 3' (i.e. 2:;1 \"(72) = 1). The previous algorithm (\"Follow the leader\") corresponds to setting I): that maximizes n 2 Ptl' Z [A('r,'i)] i=1 T{l,...,t1} This results in a deterministic algorithm, that, as we saw, performs poorly in the worst case. Instead, it is common to add a \"regularizer\" term that favors smoother distributions. This is often called \"Follow the perturbed leader\". A commonly used regularizer is the entropy function, i.e. we want to use pick 2' from the distribution that maximizes n 2 10:6)- : [140:0] apt('i)1npt(i)- (1) i=1 TE{1,...,tl} (Here, 1} > 0 is a parameter that we can tweak to balance exploration and exploitation. Notice also that lnp) S 0.) In this exercise you will show that \"Follow the perturbed leader\" with the entropy regularizer is the same as Multiplicative Weights Update! (a) Show that for any distribution 33:, (1) is at most '1']! - ln (2 ezre ..... t1}[A(T:i)]) (2) i=1

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts