Question: 2) (FP Arithmetic, 20 points) Bfloat16 in Google TPU v.3. Google recently announced its TPU 3.0 processor. As part of the TPU 3.0 design, they

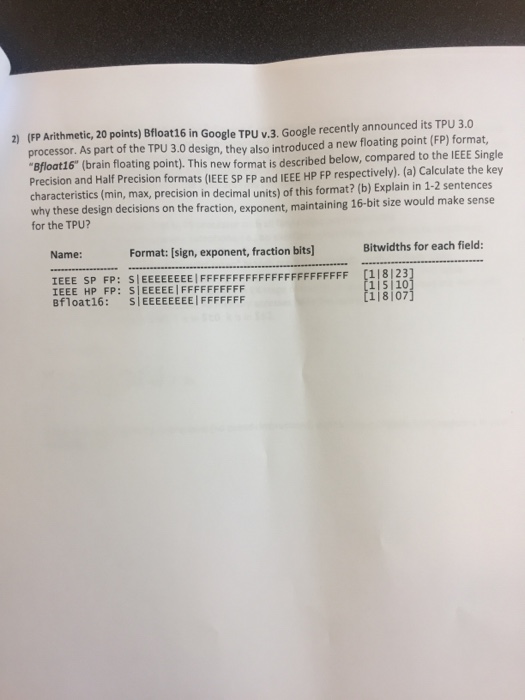

2) (FP Arithmetic, 20 points) Bfloat16 in Google TPU v.3. Google recently announced its TPU 3.0 processor. As part of the TPU 3.0 design, they also introduced a new floating point (FP) format, Bfloat16" (brain floating point). This new format is described below, compared to the IEEE Single Precision and Half Precision formats (IEEE SP FP and IEEE HP FP respectively). (a) Calculate the key characteristics (min, max, precision in decimal units) of this format? (b) Explain in 1-2 sentences why these design decisions on the fraction, exponent, maintaining 16-bit size would make sense for the TPU? Name: Format: [sign, exponent, fraction bits] Bitwidths for each field: IEEE SP FP: SIEEEEEEEE FFFFFFFFFFFFFFFFFFFFFFF [118123 IEEE HP FP: SIEEEEE FFFFFFFFFF Bfloat16: S|EEEEEEEE!FFFFFFF [115/10 [118107

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts