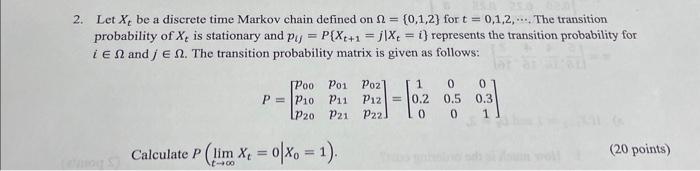

Question: 2. Let Xt be a discrete time Markov chain defined on ={0,1,2} for t=0,1,2,. The transition probability of Xt is stationary and plj=P{Xt+1=jXt=i} represents the

2. Let Xt be a discrete time Markov chain defined on ={0,1,2} for t=0,1,2,. The transition probability of Xt is stationary and plj=P{Xt+1=jXt=i} represents the transition probability for i and j. The transition probability matrix is given as follows: P=p00p10p20p01p11p21p02p12p22=10.2000.5000.31 Calculate P(limtXt=0X0=1)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts