Question: 2 Linear Decision Boundaries from Logistic Re- gression and SVMs (18 points) We consider the regularized loss function used in logistic regression N 1 L((w.b),

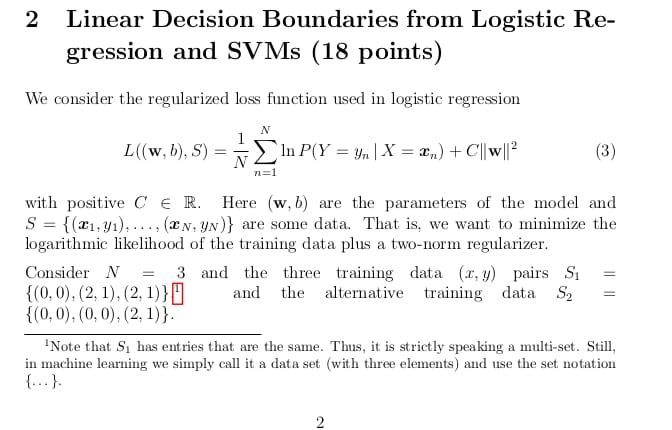

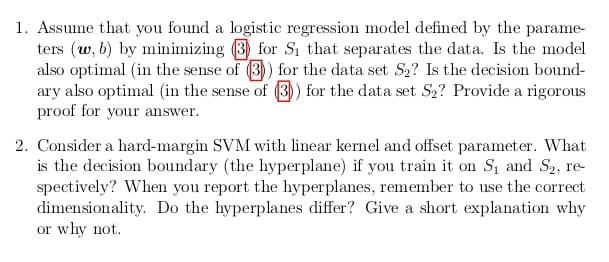

2 Linear Decision Boundaries from Logistic Re- gression and SVMs (18 points) We consider the regularized loss function used in logistic regression N 1 L((w.b), S) == In P(Y = yn | X = 2n) + C||w|l? (3) 7 = 1 with positive CER Here (w.b) are the parameters of the model and S = {(21,41),..., (CN, YN)} are some data. That is, we want to minimize the logarithmic likelihood of the training data plus a two-norm regularizer. Consider N = 3 and the three training data (2,y) pairs S = {(0,0), (2, 1), (2,1)}" and the alternative training data S2 = {(0,0), (0,0), (2.1)}. Note that S, has entries that are the same. Thus, it is strictly speaking a multi-set. Still, in machine learning we simply call it a data set (with three elements, and use the set notation {...}: 1. Assume that you found a logistic regression model defined by the parame- ters (w, b) by minimizing (3) for Si that separates the data. Is the model also optimal (in the sense of (3)) for the data set S2? Is the decision bound- ary also optimal (in the sense of 3)) for the data set S2? Provide a rigorous proof for your answer. 2. Consider a hard-margin SVM with linear kernel and offset parameter. What is the decision boundary (the hyperplane) if you train it on S, and S2, re- spectively? When you report the hyperplanes, remember to use the correct dimensionality. Do the hyperplanes differ? Give a short explanation why or why not

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts