Question: + 2 points Someone is trying to learn the data above using logistic regression. It is clearly linearly separable data. So we can assume that

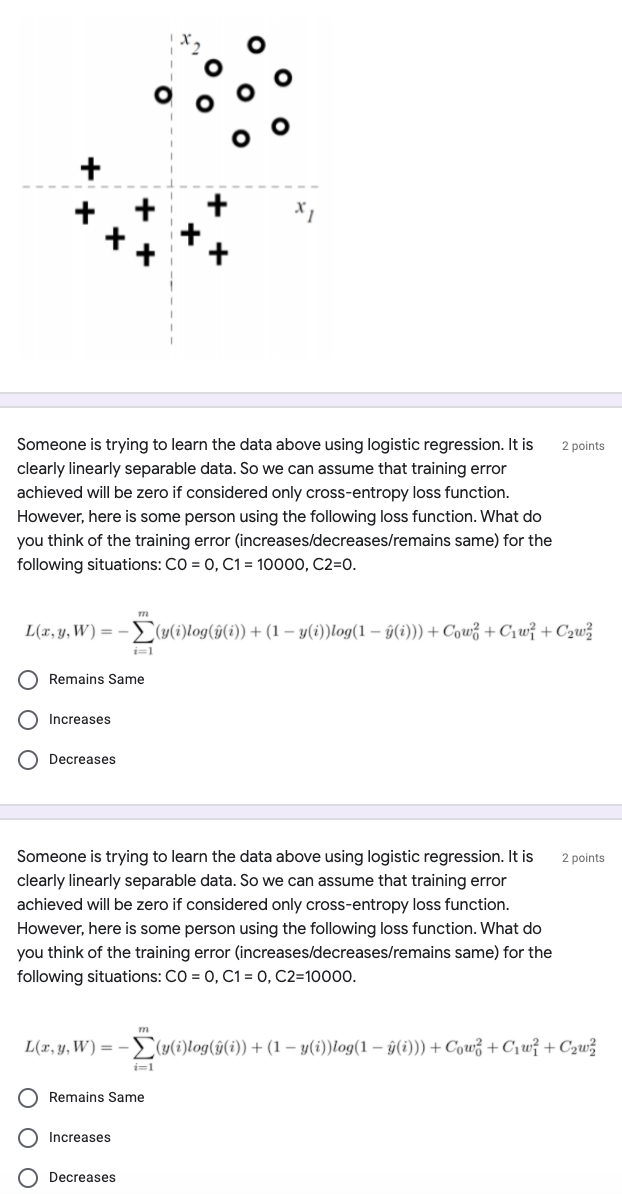

+ 2 points Someone is trying to learn the data above using logistic regression. It is clearly linearly separable data. So we can assume that training error achieved will be zero if considered only cross-entropy loss function. However, here is some person using the following loss function. What do you think of the training error (increases/decreases/remains same) for the following situations: CO = 0, C1 = 10000, C2=0. m L(x,y, W) = -(y(i)log(90))+ (1 - y(i))log(1 - y(i))) + Cow +C w + C2w i=1 O Remains Same O Increases Decreases 2 points Someone is trying to learn the data above using logistic regression. It is clearly linearly separable data. So we can assume that training error achieved will be zero if considered only cross-entropy loss function. However, here is some person using the following loss function. What do you think of the training error (increases/decreases/remains same) for the following situations: CO = 0, C1 = 0, C2=10000. L(2, 4,W) = -Zy(i)log(i(i)) + (1 y(i))log(1 (i))) + Cow +C\w{+Cyw i=1 O Remains Same O Increases Decreases

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts