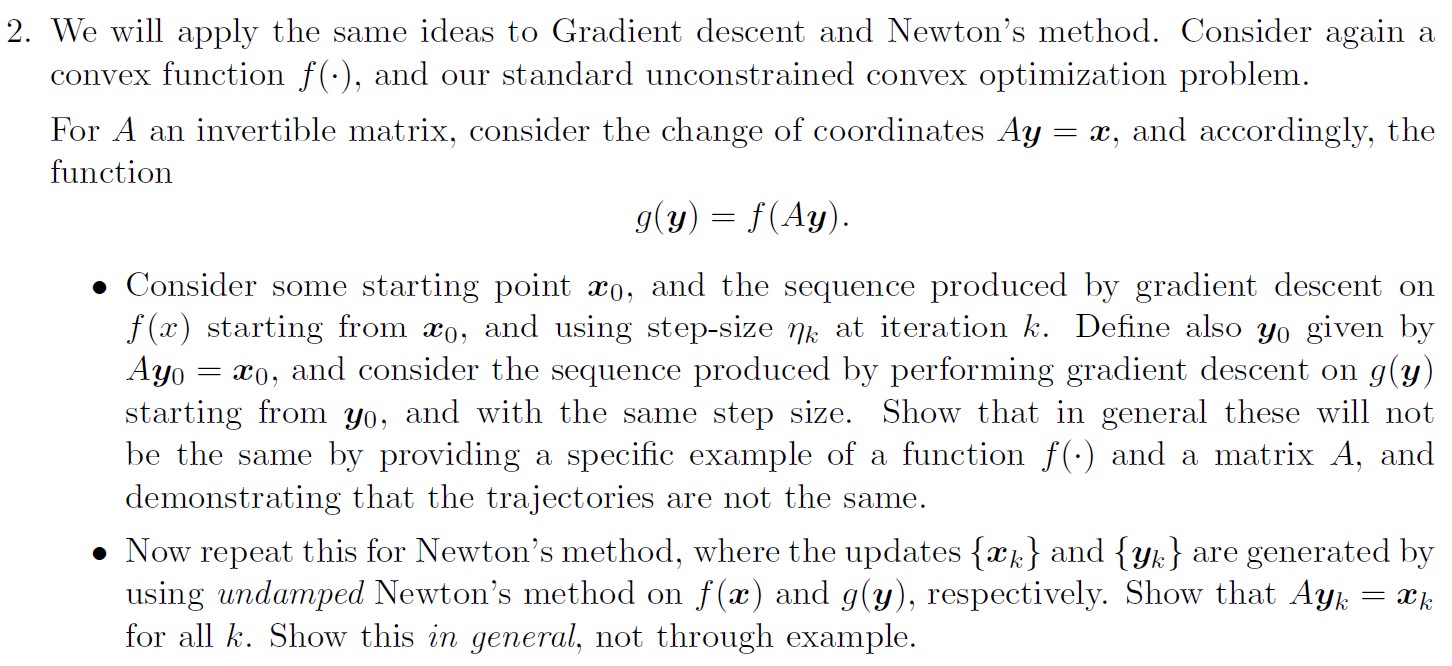

Question: 2. We Will apply the same ideas to Gradient descent and Newton's method. Consider again a convex function f(-), and our standard unconstrained convex optimization

2. We Will apply the same ideas to Gradient descent and Newton's method. Consider again a convex function f(-), and our standard unconstrained convex optimization problem. For A an invertible matrix, consider the change of coordinates Ay : :13, and accordingly, the function 9(21) : f(A3/)~ 9 Consider some starting point me, and the sequence produced by gradient descent on f (:c) starting from mo, and using stepsize We at iteration k. Dene also yo given by Ayo : m0, and consider the sequence produced by performing gradient descent on g(y) starting from yo, and With the same step size. Show that in general these Will not be the same by providing a specic example of a function f(-) and a matrix A, and demonstrating that the trajectories are not the same. 9 Now repeat this for Newton's method, Where the updates {ink} and {yk} are generated by using undamped Newton7s method on f (w) and g(y), respectively. Show that Ayk : ask for all k. Show this in general, not through example

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts