Question: 3. (10 points) In this question you will see that soft-SVM can be formulated as solving a regularized ERM rule, which uses a popular error

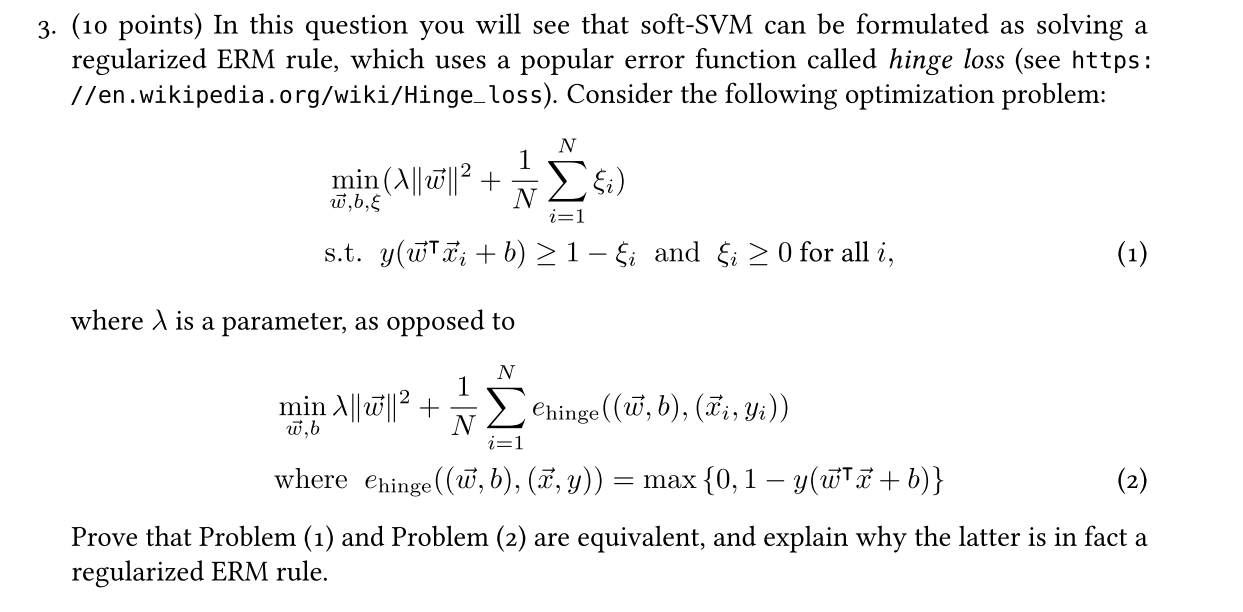

3. (10 points) In this question you will see that soft-SVM can be formulated as solving a regularized ERM rule, which uses a popular error function called hinge loss (see https: //en . wikipedia . org/wiki/Hinge_loss). Consider the following optimization problem: N min ()||w|12 + Si) w,b,& i=1 s.t. y(wxi + b) 2 1 -S; and & 2 0 for all i, (1) where A is a parameter, as opposed to N w,b min >||w|12 + 1 Chinge ((w, b), (xi, yi) ) i=1 where ehinge ((w, b), (x, y)) = max {0, 1 - y(WT x + b) } (2) Prove that Problem (1) and Problem (2) are equivalent, and explain why the latter is in fact a regularized ERM rule

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts