Question: 3. Decision Tree. Compute the following for learning a decision tree from the family car examples. Show your work. a. Compute the entropy of the

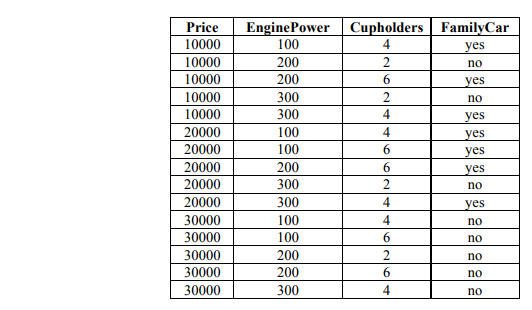

3. Decision Tree. Compute the following for learning a decision tree from the family car examples. Show your work.

a. Compute the entropy of the training set.

b. Compute the information gain for using Price 10000 and Price 20000 as the test at the root of the decision tree.

c. Compute the information gain for using EnginePower 100 and EnginePower 200 as the test at the root of the decision tree.

d. Compute the information gain for using Cupholders 2 and Cupholders 4 as the test at the root of the decision tree.

e. Show the final decision tree learned by the DECISION-TREE-LEARNING algorithm in Figure 18.5 of the textbook using the six tests described in parts (b), (c) and (d).

f. How would the learned Decision Tree classify the new instance ?

Price EnginePower CupholdersFamilyCar 10000 100 200 200 300 300 100 100 200 300 300 100 100 200 200 300 no 10000 10000 10000 20000 no yes yes yes yes no yes no no 20000 30000 no 30000 no Price EnginePower CupholdersFamilyCar 10000 100 200 200 300 300 100 100 200 300 300 100 100 200 200 300 no 10000 10000 10000 20000 no yes yes yes yes no yes no no 20000 30000 no 30000 no

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts