Question: 3. Linear Least-Median Squared algorithm (Algorithm 6.3, page 218 in Jain et al's Machine Vision book). In this problem, use a maximum of 6 trials.

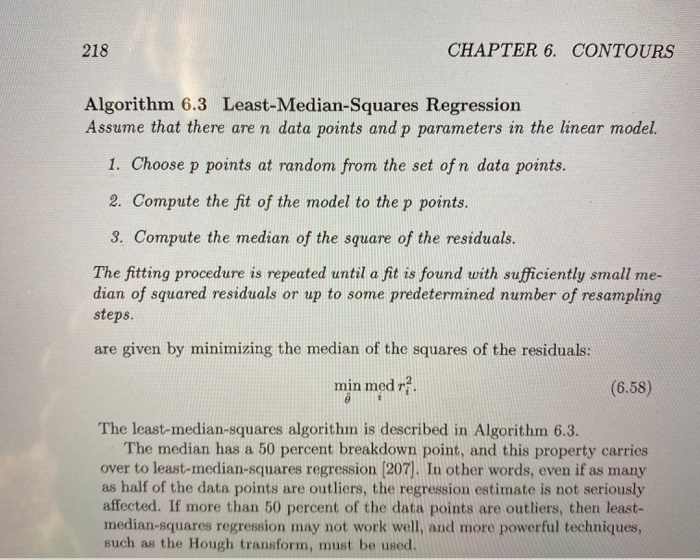

3. Linear Least-Median Squared algorithm (Algorithm 6.3, page 218 in Jain et al's Machine Vision book). In this problem, use a maximum of 6 trials. Explain how the algorithm below works on the numerical problem above, i.e. fitting a straight line to edge pixel coordinates Pi(Xi, Yi) for i=1 to 7 (2, 1), (3,5),(6,9), (12,7), (15,20), (18, 16), (22,30). Algorithm 6.3 Least-Median-Squares Regression Assume that there are n data points and p parameters in the linear model. Choose p points at random from the set of n data points. Compute the fit of the model to the p points. Compute the median of the square of the residuals. The fitting procedure is repeated until a fit is found with sufficiently small median of squared residuals or up to some predetermined number of resampling steps. In least-median-squares regression, the estimates of the model parameters are given by minimizing the median of the squares of the residuals: Min(theta) ( median(i) (Residual 1)^2 ] Express the equation of the line through two chosen points in the form ax+by+c=0 using the following steps. If a line passes through two points (x1,71) and (x2,42), then the equation of the line is (x-x1)/(x2-x1)= (y-y1)/(y2-y1). Simplyfying, we get a'x+b'y+c'=0 where a'= (y2-y1), b' = (x1-x2), and c' = y1(x2-x1)-x1(y2-y1) Then, divide a', b', and c', by sqrt(a'2+b^2) to get a,b, and c respectively. (These new a,b,c, are a=Cos(Theta), b=Sin(theta), and c = -rho.) The perpendicular distance of any point (x3,73) from the line is d= a x3 + b y3 +c 218 CHAPTER 6. CONTOURS Algorithm 6.3 Least-Median-Squares Regression Assume that there are n data points and p parameters in the linear model. 1. Choose p points at random from the set of n data points. 2. Compute the fit of the model to the p points. 3. Compute the median of the square of the residuals. The fitting procedure is repeated until a fit is found with sufficiently small me- dian of squared residuals or up to some predetermined number of resampling steps. are given by minimizing the median of the squares of the residuals: min med r. (6.58) The least-median-squares algorithm is described in Algorithm 6.3. The median has a 50 percent breakdown point, and this property carries over to least-median-squares regression (207). In other words, even if as many as half of the data points are outliers, the regression estimate is not seriously affected. If more than 50 percent of the data points are outliers, then least- median-squares regression may not work well, and more powerful techniques, such as the Hough transform, must be used. 3. Linear Least-Median Squared algorithm (Algorithm 6.3, page 218 in Jain et al's Machine Vision book). In this problem, use a maximum of 6 trials. Explain how the algorithm below works on the numerical problem above, i.e. fitting a straight line to edge pixel coordinates Pi(Xi, Yi) for i=1 to 7 (2, 1), (3,5),(6,9), (12,7), (15,20), (18, 16), (22,30). Algorithm 6.3 Least-Median-Squares Regression . Assume that there are n data points and p parameters in the linear model. Choose p points at random from the set of n data points. Compute the fit of the model to the p points. Compute the median of the square of the residuals. The fitting procedure is repeated until a fit is found with sufficiently small median of squared residuals or up to some predetermined number of resampling steps. In least-median-squares regression, the estimates of the model parameters are given by minimizing the median of the squares of the residuals: Min(theta) ( median(i) (Residual 1)^2 ] Express the equation of the line through two chosen points in the form ax+by+c=0 using the following steps. If a lidhe passes through two points (x1,y1) and (x2,42), then the equation of the line is (x-x1)/(x2-x1)= (y-y1)/(y2-y1). Simplyfying, we get a'x+b'y+c'=0 where a'= (y2-y1), b' = (x1-x2), and c' = y1(x2-x1)-x1(y2-y1) Then, divide a' b', and c', by sqrt(a'2+b'^2) to get a,b, and c respectively. (These new a,b,c, are a=Cos(Theta), b=sin(theta), and co-ho.) The perpendicular distance of any point (3.y3) from the line is d= a x3 + b y3 +c. 3. Linear Least-Median Squared algorithm (Algorithm 6.3, page 218 in Jain et al's Machine Vision book). In this problem, use a maximum of 6 trials. Explain how the algorithm below works on the numerical problem above, i.e. fitting a straight line to edge pixel coordinates Pi(Xi, Yi) for i=1 to 7 (2, 1), (3,5),(6,9), (12,7), (15,20), (18, 16), (22,30). Algorithm 6.3 Least-Median-Squares Regression Assume that there are n data points and p parameters in the linear model. Choose p points at random from the set of n data points. Compute the fit of the model to the p points. Compute the median of the square of the residuals. The fitting procedure is repeated until a fit is found with sufficiently small median of squared residuals or up to some predetermined number of resampling steps. In least-median-squares regression, the estimates of the model parameters are given by minimizing the median of the squares of the residuals: Min(theta) ( median(i) (Residual 1)^2 ] Express the equation of the line through two chosen points in the form ax+by+c=0 using the following steps. If a line passes through two points (x1,71) and (x2,42), then the equation of the line is (x-x1)/(x2-x1)= (y-y1)/(y2-y1). Simplyfying, we get a'x+b'y+c'=0 where a'= (y2-y1), b' = (x1-x2), and c' = y1(x2-x1)-x1(y2-y1) Then, divide a', b', and c', by sqrt(a'2+b^2) to get a,b, and c respectively. (These new a,b,c, are a=Cos(Theta), b=Sin(theta), and c = -rho.) The perpendicular distance of any point (x3,73) from the line is d= a x3 + b y3 +c 218 CHAPTER 6. CONTOURS Algorithm 6.3 Least-Median-Squares Regression Assume that there are n data points and p parameters in the linear model. 1. Choose p points at random from the set of n data points. 2. Compute the fit of the model to the p points. 3. Compute the median of the square of the residuals. The fitting procedure is repeated until a fit is found with sufficiently small me- dian of squared residuals or up to some predetermined number of resampling steps. are given by minimizing the median of the squares of the residuals: min med r. (6.58) The least-median-squares algorithm is described in Algorithm 6.3. The median has a 50 percent breakdown point, and this property carries over to least-median-squares regression (207). In other words, even if as many as half of the data points are outliers, the regression estimate is not seriously affected. If more than 50 percent of the data points are outliers, then least- median-squares regression may not work well, and more powerful techniques, such as the Hough transform, must be used. 3. Linear Least-Median Squared algorithm (Algorithm 6.3, page 218 in Jain et al's Machine Vision book). In this problem, use a maximum of 6 trials. Explain how the algorithm below works on the numerical problem above, i.e. fitting a straight line to edge pixel coordinates Pi(Xi, Yi) for i=1 to 7 (2, 1), (3,5),(6,9), (12,7), (15,20), (18, 16), (22,30). Algorithm 6.3 Least-Median-Squares Regression . Assume that there are n data points and p parameters in the linear model. Choose p points at random from the set of n data points. Compute the fit of the model to the p points. Compute the median of the square of the residuals. The fitting procedure is repeated until a fit is found with sufficiently small median of squared residuals or up to some predetermined number of resampling steps. In least-median-squares regression, the estimates of the model parameters are given by minimizing the median of the squares of the residuals: Min(theta) ( median(i) (Residual 1)^2 ] Express the equation of the line through two chosen points in the form ax+by+c=0 using the following steps. If a lidhe passes through two points (x1,y1) and (x2,42), then the equation of the line is (x-x1)/(x2-x1)= (y-y1)/(y2-y1). Simplyfying, we get a'x+b'y+c'=0 where a'= (y2-y1), b' = (x1-x2), and c' = y1(x2-x1)-x1(y2-y1) Then, divide a' b', and c', by sqrt(a'2+b'^2) to get a,b, and c respectively. (These new a,b,c, are a=Cos(Theta), b=sin(theta), and co-ho.) The perpendicular distance of any point (3.y3) from the line is d= a x3 + b y3 +c