Question: 3. Regularization (4 pts.): We have mentioned how when we are looking for parameters that optimize a loss/error function, sometimes we want to ensure these

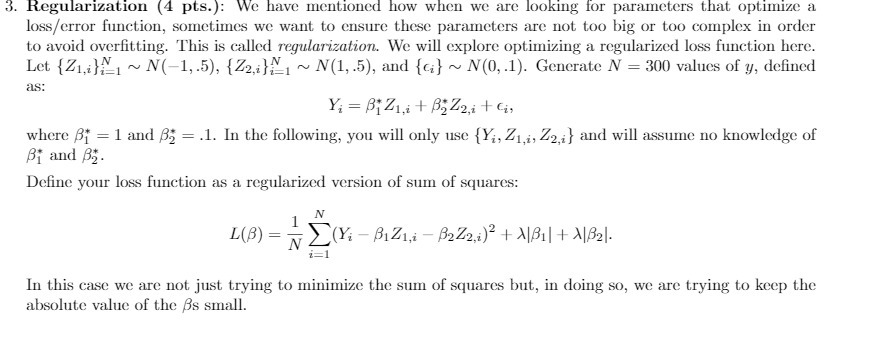

3. Regularization (4 pts.): We have mentioned how when we are looking for parameters that optimize a loss/error function, sometimes we want to ensure these parameters are not too big or too complex in order to avoid overfitting. This is called regularization. We will explore optimizing a regularized loss function here. Let {Z1,it ~ N(-1,.5), {Z2, ~ N(1, .5), and {} ~ N(0, .1). Generate N = 300 values of y, defined as: Yi = Biznit Bizzi + ci, where BY = 1 and ; = .1. In the following, you will only use {Yi, Z1,i, Z2{} and will assume no knowledge of Bi and By. Define your loss function as a regularized version of sum of squares: N 1 L(B) = N (Yi - BIZ1,i - B2Z2,i)2 + AlBil + AlBel. In this case we are not just trying to minimize the sum of squares but, in doing so, we are trying to keep the absolute value of the As small

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts