Question: 3. Unconditioned performances: For the three methods respectively, construct the VaR breach indicator series. (that is, on each day t, 1 if breach happened, 0

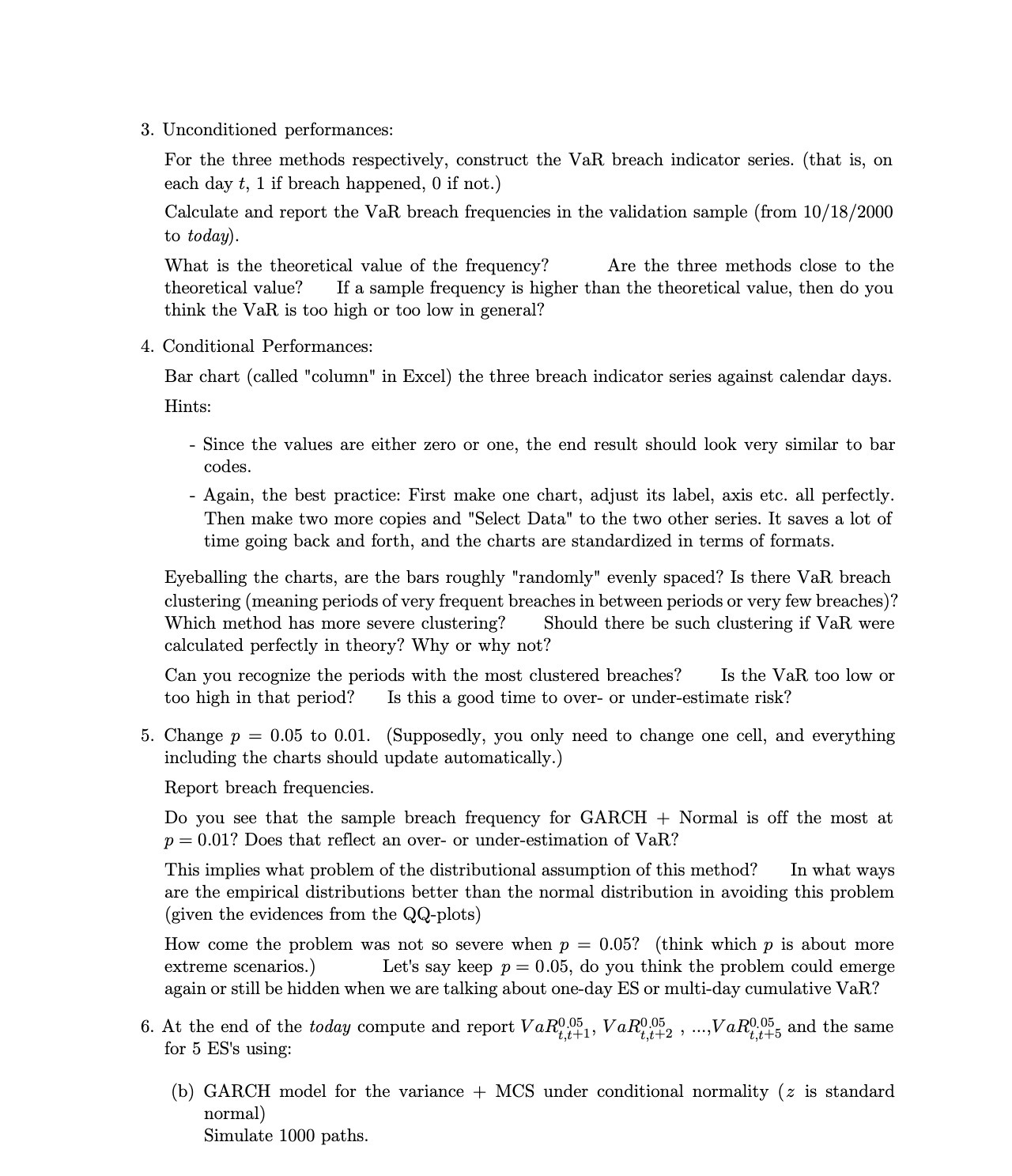

3. Unconditioned performances: For the three methods respectively, construct the VaR breach indicator series. (that is, on each day t, 1 if breach happened, 0 if not.) Calculate and report the VaR breach frequencies in the validation sample (from 10/ 18/ 2000 to today). What is the theoretical value of the frequency? Are the three methods close to the theoretical value? If a sample frequency is higher than the theoretical value, then do you think the VaR is too high or too low in general? 4. Conditional Performances: Bar chart (called "column" in Excel) the three breach indicator series against calendar days. Hints: Since the values are either zero or one, the end result should look very similar to bar codes. - Again, the best practice: First make one chart, adjust its label, axis etc. all perfectly. Then make two more copies and "Select Data" to the two other series. It saves a lot of time going back and forth, and the charts are standardized in terms of formats. Eyeballing the charts, are the bars roughly "randomly" evenly spaced? Is there Val-1 breach clustering (meaning periods of very frequent breaches in between periods or very few breaches)? Which method has more severe clustering? Should there be such clustering if VaR were calculated perfectly in theory? Why or why not? Can you recognize the periods with the most clustered breaches? Is the VaR too low or too high in that period? Is this a good time to over- or under-estimate risk? 5. Change 3) = 0.05 to 0.01. (Supposedly, you only need to change one cell, and everything including the charts should update automatically.) Report breach frequencies. Do you see that the sample breach frequency for GARCH + Normal is off the most at p = 0.01? Does that reect an over- or under-estimation of VaR? This implies what problem of the distributional assumption of this method? In what ways are the empirical distributions better than the normal distribution in avoiding this problem (given the evidences from the QQ-plots) How come the problem was not so severe when p = 0.05? (think which p is about more extreme scenarios.) Let's say keep p = 0.05, do you think the problem could emerge again or still be hidden when we are talking about one-day ES or multiday cumulative VaR? 6. At the end of the today compute and report VaRg-fl, VaRg-ffz , ...,VaRSff5 and the same for 5 ES's using: I I I (b) GARCH model for the variance + MCS under conditional normality (z is standard normal) Simulate 1000 paths