Question: 3. We are given n = 7 observations in p = 2 dimensions. For each observation, there is an 1 point associated class label. .

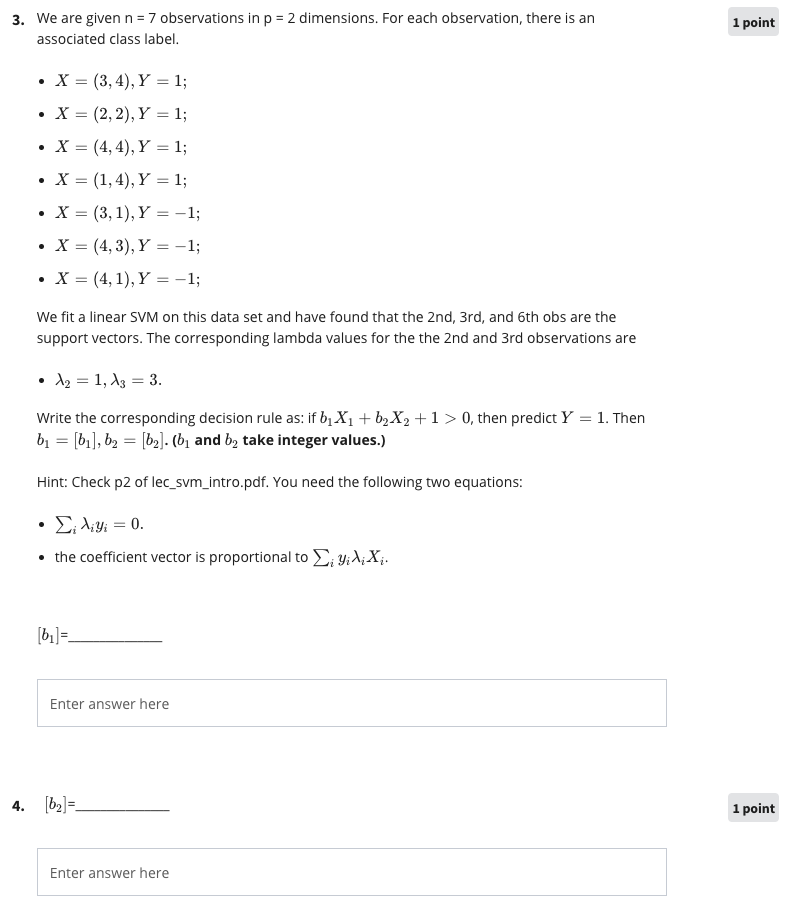

3. We are given n = 7 observations in p = 2 dimensions. For each observation, there is an 1 point associated class label. . X = (3, 4), Y =1; . X = (2, 2), Y =1; . X = (4, 4), Y =1; . X = (1, 4), Y =1; . X = (3, 1), Y = -1; . X = (4,3), Y = -1; . X = (4, 1), Y = -1; We fit a linear SVM on this data set and have found that the 2nd, 3rd, and 6th obs are the support vectors. The corresponding lambda values for the the 2nd and 3rd observations are . 12 = 1, A3 = 3. Write the corresponding decision rule as: if bj X1 + b2 X2 + 1 > 0, then predict Y" = 1. Then b1 = [bi], by = [b2]. (b, and by take integer values.) Hint: Check p2 of lec_svm_intro.pdf. You need the following two equations: . Ei diyi = 0. . the coefficient vector is proportional to ), yixi Xi. [61] = Enter answer here [b2]= 1 point Enter answer here

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts