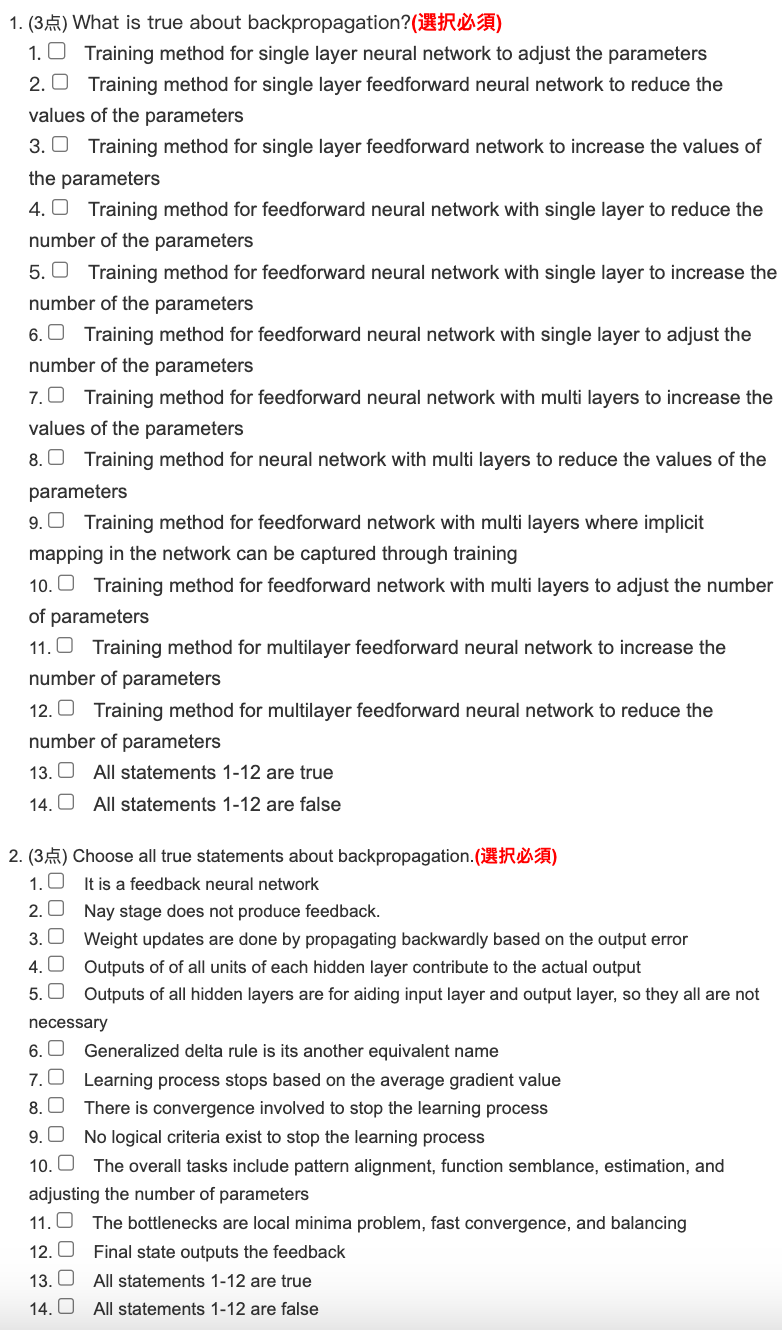

Question: ( 3 ) What is true about backpropagation? Training method for single layer neural network to adjust the parameters Training method for single layer feedforward

What is true about backpropagation?

Training method for single layer neural network to adjust the parameters

Training method for single layer feedforward neural network to reduce the

values of the parameters

Training method for single layer feedforward network to increase the values of

the parameters

Training method for feedforward neural network with single layer to reduce the

number of the parameters

Training method for feedforward neural network with single layer to increase the

number of the parameters

Training method for feedforward neural network with single layer to adjust the

number of the parameters

Training method for feedforward neural network with multi layers to increase the

values of the parameters

Training method for neural network with multi layers to reduce the values of the

parameters

Training method for feedforward network with multi layers where implicit

mapping in the network can be captured through training

Training method for feedforward network with multi layers to adjust the number

of parameters

Training method for multilayer feedforward neural network to increase the

number of parameters

Training method for multilayer feedforward neural network to reduce the

number of parameters

All statements are true

All statements are false

Choose all true statements about backpropagation.

It is a feedback neural network

Nay stage does not produce feedback.

Weight updates are done by propagating backwardly based on the output error

Outputs of of all units of each hidden layer contribute to the actual output

Outputs of all hidden layers are for aiding input layer and output layer, so they all are not

necessary

Generalized delta rule is its another equivalent name

Learning process stops based on the average gradient value

There is convergence involved to stop the learning process

No logical criteria exist to stop the learning process

The overall tasks include pattern alignment, function semblance, estimation, and

adjusting the number of parameters

The bottlenecks are local minima problem, fast convergence, and balancing

Final state outputs the feedback

All statements are true

All statements are false

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock