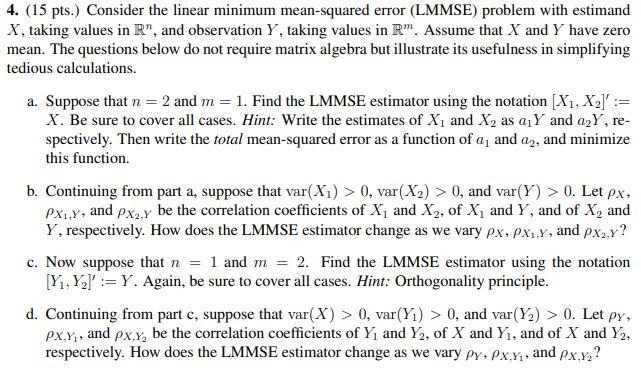

Question: 4. (15 pts. Consider the linear minimum mean-squared error (LMMSE) problem with estimand X, taking values in R, and observation Y, taking values in RM.

4. (15 pts. Consider the linear minimum mean-squared error (LMMSE) problem with estimand X, taking values in R", and observation Y, taking values in RM. Assume that X and Y have zero mean. The questions below do not require matrix algebra but illustrate its usefulness in simplifying tedious calculations. a. Suppose that n=2 and m= 1. Find the LMMSE estimator using the notation (X1, X2)':= X. Be sure to cover all cases. Hint: Write the estimates of X1 and X, as a Y and a2Y, re- spectively. Then write the total mean-squared error as a function of a, and az, and minimize this function. b. Continuing from part a, suppose that var(Xi) > 0, var(X2) > 0, and var(Y) > 0. Let Px. PX1,Y, and Px,y be the correlation coefficients of X, and X2, of X, and Y, and of X, and Y respectively. How does the LMMSE estimator change as we vary Px, px.y, and PX2,Y? c. Now suppose that n = 1 and m = 2. Find the LMMSE estimator using the notation [Y, Y.':=Y. Again, be sure to cover all cases. Hint: Orthogonality principle. d. Continuing from part c, suppose that var(x) > 0, var(71) > 0, and var(Y2) > 0. Let py, Px.x, and px,y, be the correlation coefficients of Y, and Y2, of X and Y1, and of X and Y2, respectively. How does the LMMSE estimator change as we vary Py, Px.x, and Px.x2? 4. (15 pts. Consider the linear minimum mean-squared error (LMMSE) problem with estimand X, taking values in R", and observation Y, taking values in RM. Assume that X and Y have zero mean. The questions below do not require matrix algebra but illustrate its usefulness in simplifying tedious calculations. a. Suppose that n=2 and m= 1. Find the LMMSE estimator using the notation (X1, X2)':= X. Be sure to cover all cases. Hint: Write the estimates of X1 and X, as a Y and a2Y, re- spectively. Then write the total mean-squared error as a function of a, and az, and minimize this function. b. Continuing from part a, suppose that var(Xi) > 0, var(X2) > 0, and var(Y) > 0. Let Px. PX1,Y, and Px,y be the correlation coefficients of X, and X2, of X, and Y, and of X, and Y respectively. How does the LMMSE estimator change as we vary Px, px.y, and PX2,Y? c. Now suppose that n = 1 and m = 2. Find the LMMSE estimator using the notation [Y, Y.':=Y. Again, be sure to cover all cases. Hint: Orthogonality principle. d. Continuing from part c, suppose that var(x) > 0, var(71) > 0, and var(Y2) > 0. Let py, Px.x, and px,y, be the correlation coefficients of Y, and Y2, of X and Y1, and of X and Y2, respectively. How does the LMMSE estimator change as we vary Py, Px.x, and Px.x2

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts