Question: 4. (50 pt) Consider a binary classification problem with a single continuous input variable X. (a univariate classification). a) Assuming class likelihoods are normally distributed.

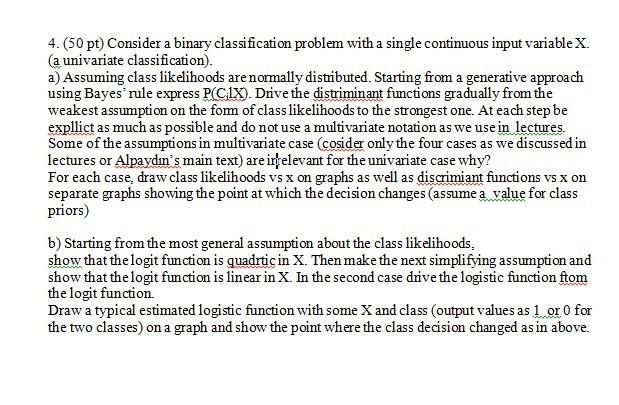

4. (50 pt) Consider a binary classification problem with a single continuous input variable X. (a univariate classification). a) Assuming class likelihoods are normally distributed. Starting from a generative approach using Bayes' rule express P(CiX). Drive the distriminant functions gradually from the weakest assumption on the form of class likelihoods to the strongest one. At each step be expllict as much as possible and do not use a multivariate notation as we use in lectures. Some of the assumptions in multivariate case (cosider only the four cases as we discussed in lectures or Alpaydin's main text) are ingelevant for the univariate case why? For each case, draw class likelihoods vs x on graphs as well as discrimiant functions vs x on separate graphs showing the point at which the decision changes (assume a value for class priors) b) Starting from the most general assumption about the class likelihoods, show that thelogit function is quadrtic in X. Then make the next simplifying assumption and show that thelogit function is linear in X. In the second case drive the logistic function ftom the logit function. Draw a typical estimated logistic function with some X and class (output values as 1 or 0 for the two classes) on a graph and show the point where the class decision changed as in above

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts