Question: 4. (Classification 10 pt)) Suppose you are working in a binary classification problem so C t0, 1u say (think of 0 is spam and

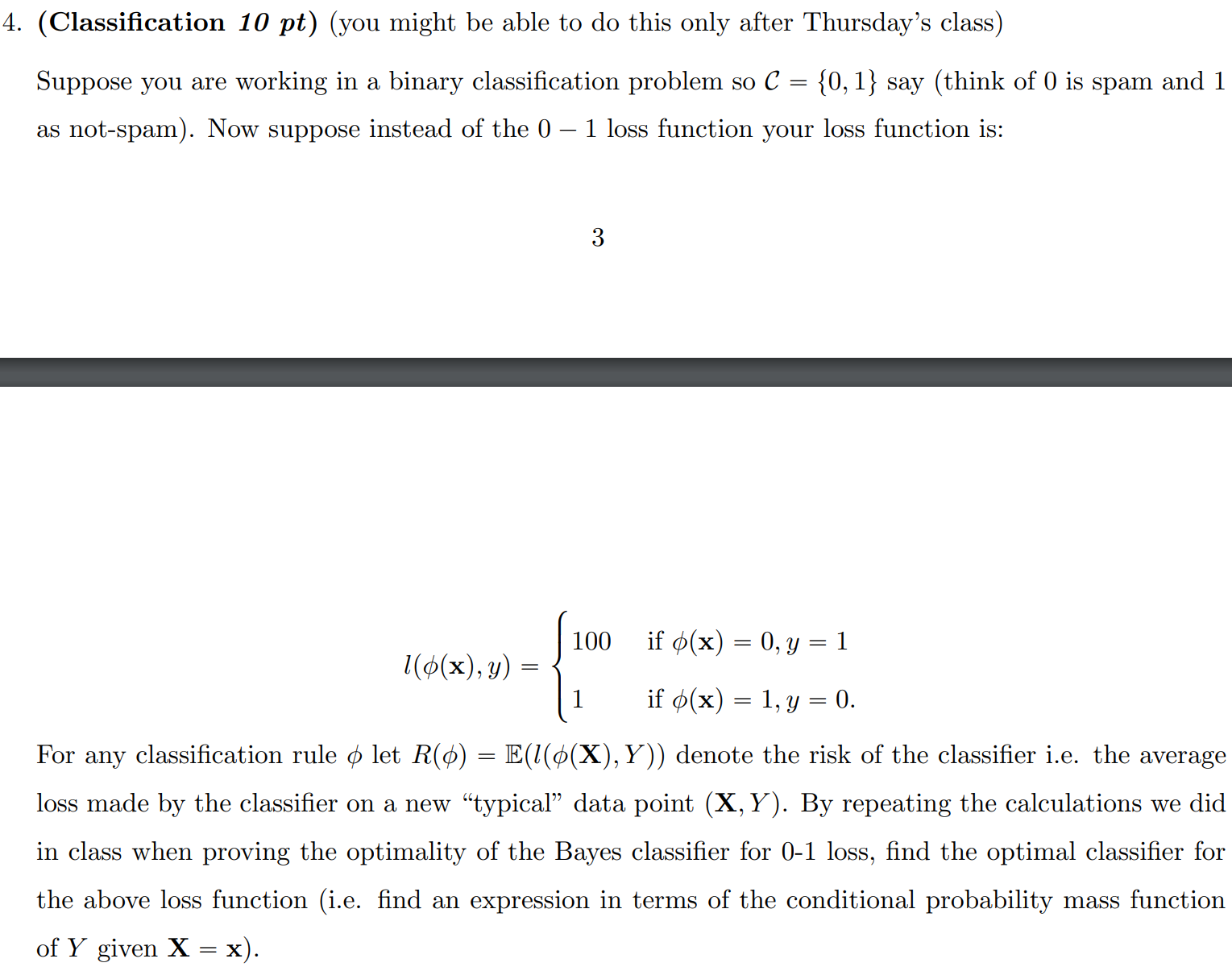

4. (Classification 10 pt)) Suppose you are working in a binary classification problem so C " t0, 1u say (think of 0 is spam and 1 as not-spam). Now suppose instead of the 0 1 loss function your loss function is: 3 lp?pxq, yq " $ '& '% 100 if ?pxq " 0, y " 1 1 if ?pxq " 1, y " 0. For any classification rule ? let Rp?q " Eplp?pXq, Y qq denote the risk of the classifier i.e. the average loss made by the classifier on a new "typical" data point pX, Y q. By repeating the calculations we did in class when proving the optimality of the Bayes classifier for 0-1 loss, find the optimal classifier for the above loss function (i.e. find an expression in terms of the conditional probability mass function of Y given X " x).

??

4. (Classification 10 pt) (you might be able to do this only after Thursday's class) Suppose you are working in a binary classification problem so C = {0, 1} say (think of 0 is spam and 1 as not-spam). Now suppose instead of the 0 - 1 loss function your loss function is: CO 1($(x) , y ) = 100 if p(x) = 0, y = 1 if o(x) = 1, y = 0. For any classification rule o let R() = E(1(6(X), Y) ) denote the risk of the classifier i.e. the average loss made by the classifier on a new "typical" data point (X, Y). By repeating the calculations we did in class when proving the optimality of the Bayes classifier for 0-1 loss, find the optimal classifier for the above loss function (i.e. find an expression in terms of the conditional probability mass function of Y given X = x)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts