Question: 4. In class we derived the expression for the optimal ridge regression parameters by differentiating the regularized error function (J(w)) with respect to the weights

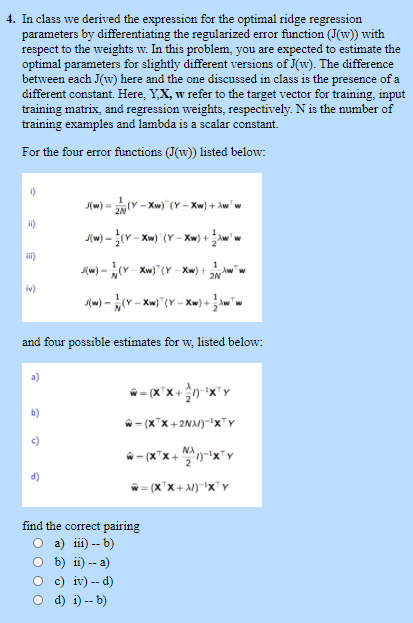

4. In class we derived the expression for the optimal ridge regression parameters by differentiating the regularized error function (J(w)) with respect to the weights w. In this problem, you are expected to estimate the optimal parameters for slightly different versions of (w). The difference between each J(w) here and the one discussed in class is the presence of a different constant. Here, Y.X, w refer to the target vector for training, input training matrix, and regression weights, respectively. N is the number of training examples and lambda is a scalar constant. For the four error functions (J(w)) listed below: J(w) 1) = n(v - Xw)" (V - Xw) + uw we sw) }(v - Xw) (Y - Xw)+{Xw w Mw) RIY - Xw)"(Y - Xw)+ i) 1 2N iv) and four possible estimates for w, listed below: =(x+x+2, XY b) W-(x + 2NX/)'XTY NA w=(x+x+-xTY W=(X X + M) X Y find the correct pairing a) iii) -- b) O b) ii) -- a) Oc) iv) -- d) d) 1) -- b)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts