Question: 4. Weight Quantization Assuming your design needs to fetch N weights from the memory and the average memory access bandwidth is S bytes per second,

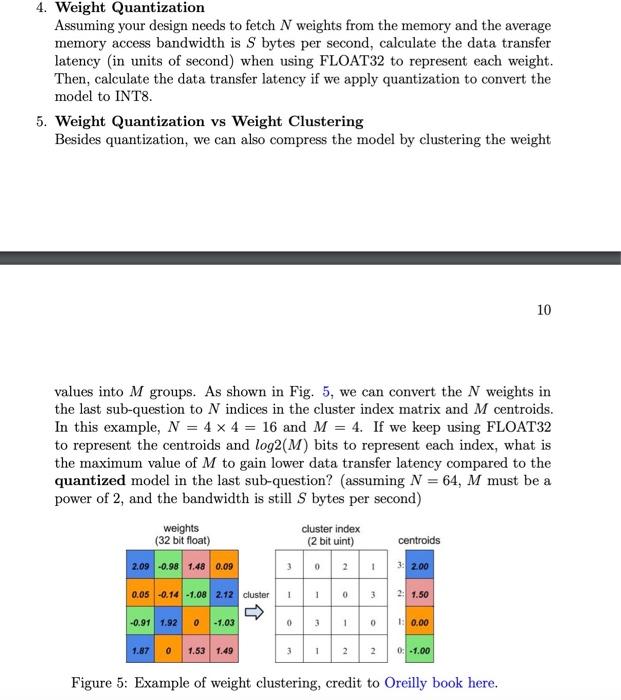

4. Weight Quantization Assuming your design needs to fetch N weights from the memory and the average memory access bandwidth is S bytes per second, calculate the data transfer latency (in units of second) when using FLOAT32 to represent each weight. Then, calculate the data transfer latency if we apply quantization to convert the model to INT8. 5. Weight Quantization vs Weight Clustering Besides quantization, we can also compress the model by clustering the weight 10 values into M groups. As shown in Fig. 5, we can convert the N weights in the last sub-question to N indices in the cluster index matrix and M centroids. In this example, N=44=16 and M=4. If we keep using FLOAT32 to represent the centroids and log2(M) bits to represent each index, what is the maximum value of M to gain lower data transfer latency compared to the quantized model in the last sub-question? (assuming N=64,M must be a power of 2, and the bandwidth is still S bytes per second) viusi Figure 5: Example of weight clustering, credit to Oreilly book here

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts