Question: 4.3 (Matrix calculus) The optimization problem we posed for A R in 4.1.4 is an example of a problem where the unknown is a matrix

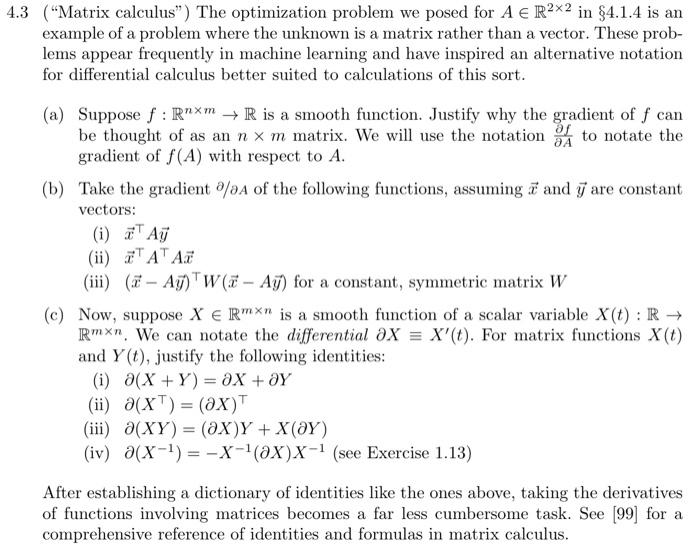

3 ("Matrix calculus") The optimization problem we posed for AR22 in 4.1.4 is an example of a problem where the unknown is a matrix rather than a vector. These problems appear frequently in machine learning and have inspired an alternative notation for differential calculus better suited to calculations of this sort. (a) Suppose f:RnmR is a smooth function. Justify why the gradient of f can be thought of as an nm matrix. We will use the notation Af to notate the gradient of f(A) with respect to A. (b) Take the gradient /A of the following functions, assuming x and y are constant vectors: (i) xAy (ii) xAAx (iii) (xAy)W(xAy) for a constant, symmetric matrix W (c) Now, suppose XRmn is a smooth function of a scalar variable X(t):R Rmn. We can notate the differential XX(t). For matrix functions X(t) and Y(t), justify the following identities: (i) (X+Y)=X+Y (ii) (X)=(X) (iii) (XY)=(X)Y+X(Y) (iv) (X1)=X1(X)X1 (see Exercise 1.13) After establishing a dictionary of identities like the ones above, taking the derivatives of functions involving matrices becomes a far less cumbersome task. See [99] for a comprehensive reference of identities and formulas in matrix calculus

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts