Question: 5. Consider the Markov chain defined on states S - {0, 1, 2,3} whose transition probability matrix is 1 0 0 0 0.2 0.3 0.1

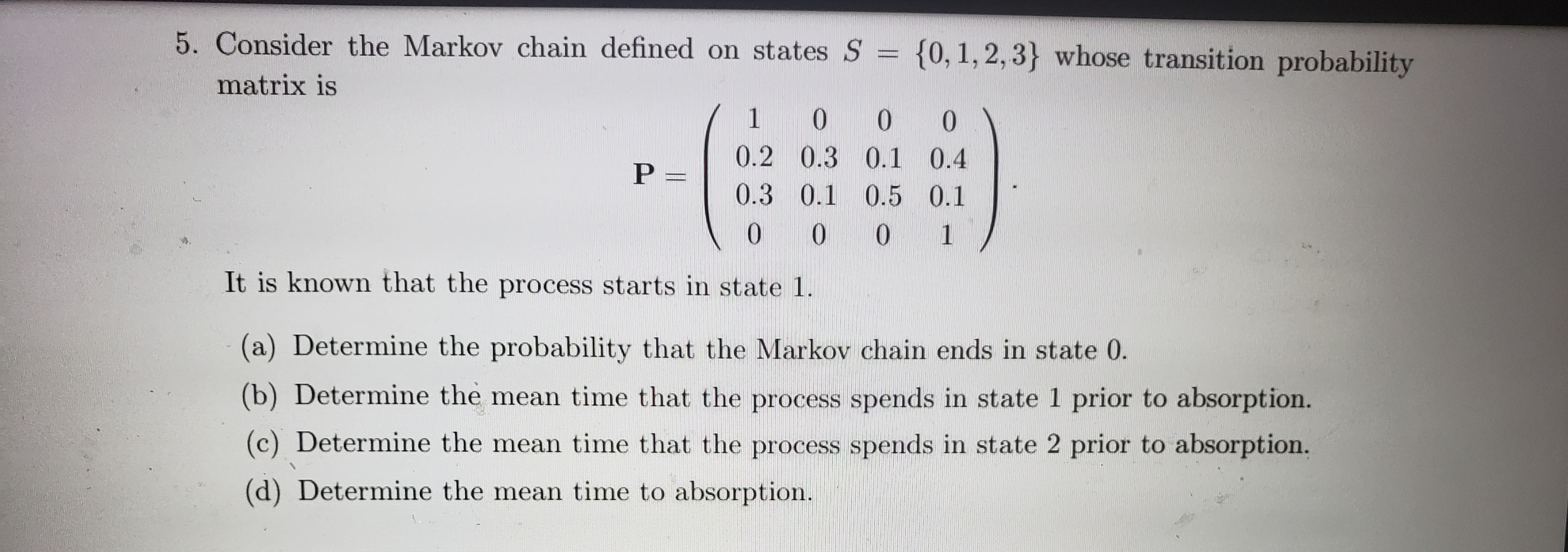

5. Consider the Markov chain defined on states S - {0, 1, 2,3} whose transition probability matrix is 1 0 0 0 0.2 0.3 0.1 0.4 P = 0.3 0.1 0.5 0.1 0 0 0 1 It is known that the process starts in state 1. (a) Determine the probability that the Markov chain ends in state 0. (b) Determine the mean time that the process spends in state 1 prior to absorption. (c) Determine the mean time that the process spends in state 2 prior to absorption. (d) Determine the mean time to absorption

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock