Question: 5. In this problem we will show that mistake bounded learning is stronger than PAC learning, which should help crystallize both definitions. Let C be

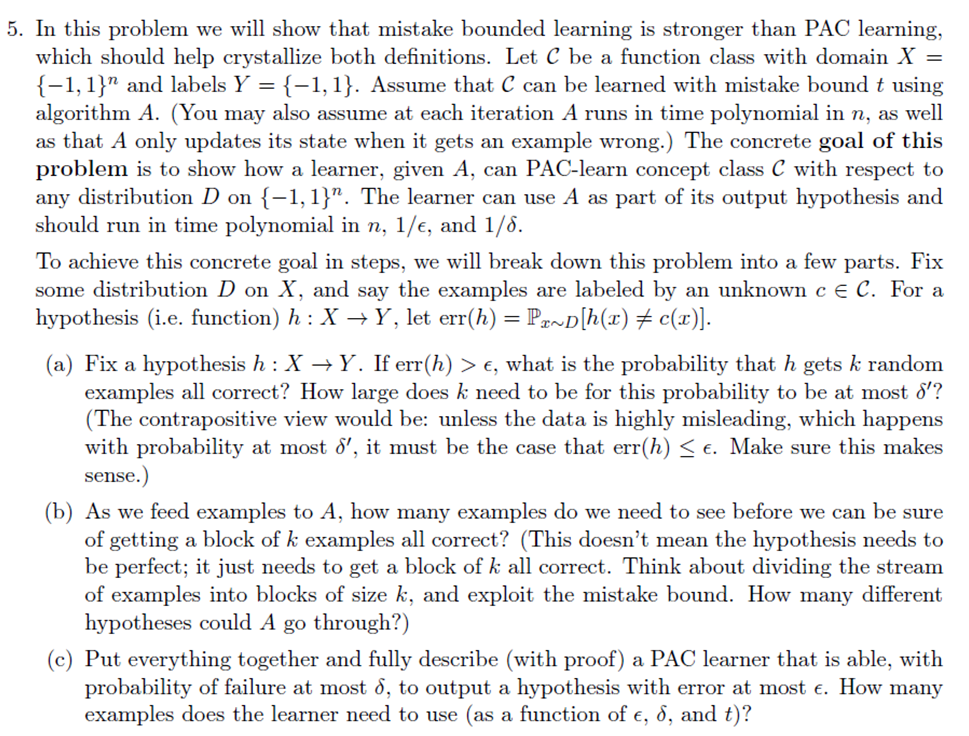

5. In this problem we will show that mistake bounded learning is stronger than PAC learning, which should help crystallize both definitions. Let C be a function class with domain X = {-1,1} and labels Y = {-1,1}. Assume that C can be learned with mistake bound t using algorithm A. (You may also assume at each iteration A runs in time polynomial in n, as well as that A only updates its state when it gets an example wrong.) The concrete goal of this problem is to show how a learner, given A, can PAC-learn concept class C with respect to any distribution D on {-1,1}". The learner can use A as part of its output hypothesis and should run in time polynomial in n, 1/, and 1/8. To achieve this concrete goal in steps, we will break down this problem into a few parts. Fix some distribution D on X, and say the examples are labeled by an unknown c E C. For a hypothesis (i.e. function) h: XY, let err(h) = Pr~D[h(x) = c(x)]. (a) Fix a hypothesis h: X+Y. If err(h) > , what is the probability that h gets k random examples all correct? How large does k need to be for this probability to be at most s'? (The contrapositive view would be: unless the data is highly misleading, which happens with probability at most d', it must be the case that err(h) , what is the probability that h gets k random examples all correct? How large does k need to be for this probability to be at most s'? (The contrapositive view would be: unless the data is highly misleading, which happens with probability at most d', it must be the case that err(h)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts