Question: (a) (5 points) Show that the ridge regression which introduces a squared 62 norm penalty on the parameter in the maximum likelihood estimate of /

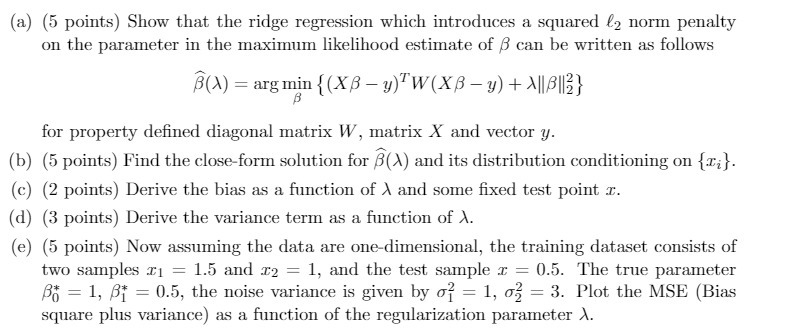

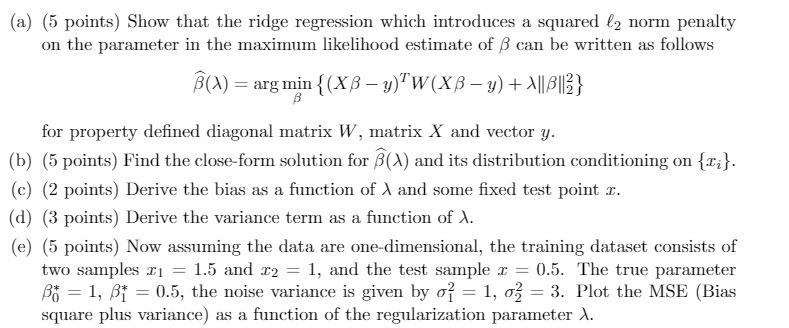

(a) (5 points) Show that the ridge regression which introduces a squared 62 norm penalty on the parameter in the maximum likelihood estimate of / can be written as follows B(X) = arg min { (XB - y) W(XB - y) + >IBli}} B for property defined diagonal matrix W, matrix X and vector y. (b) (5 points) Find the close-form solution for B(A) and its distribution conditioning on {c;}. (c) (2 points) Derive the bias as a function of A and some fixed test point I. (d) (3 points) Derive the variance term as a function of 1. (e) (5 points) Now assuming the data are one-dimensional, the training dataset consists of two samples 21 = 1.5 and x2 = 1, and the test sample > = 0.5. The true parameter By = 1, B* = 0.5, the noise variance is given by of = 1, 0? = 3. Plot the MSE (Bias square plus variance) as a function of the regularization parameter 1

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts