Question: A A Modern Day Trolley Problem This activity is important because it illustrates a variant of a classic problem in ethics. It is the so-called

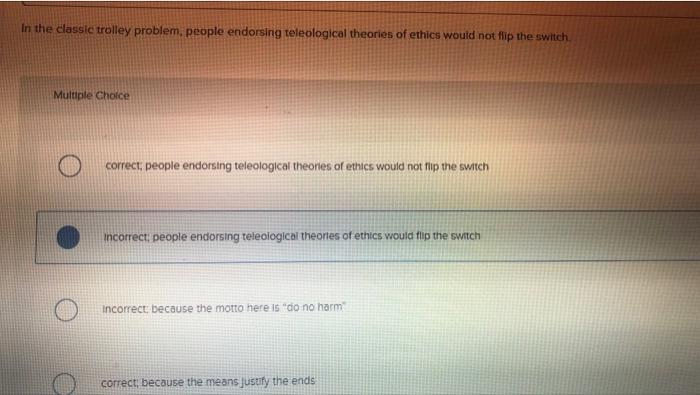

A A Modern Day Trolley Problem This activity is important because it illustrates a variant of a classic problem in ethics. It is the so-called trolley problem and working through it will help reinforce your knowledge of various terms and theories in the field. Specifically, the possible courses of action one can take with this problem each map on to one of the existing theories of ethics mentioned in the chapter. Can you identify which actions correspond to which theory? The goal of this activity is to identify how possible responses to the trolley problem map on to various theories of ethics. Read the case study below, and then answer the questions that follow. A trolley is running out of control down a track, in its path are five people who have been tied to the rails. Fortunately, you can flip a switch, which will lead the trolley down a second track. Unfortunately, there is a single person tied to that second track. Diverting the trolley to this track will kill the single person. When asked about this scenario, some people have no problems flipping the switch and others refuse to do so. At any rate, the act of flipping the switch or not maps on to the different theories of ethics presented in this chapter What a utilitarian might do here differs from what those endorsing a deontological theory would do. There is a modern-day counterpart to the trolley problem involving driverless cars. Uber, Tesla, Google, and so on must program ethics into how these cars should operate when faced with an impending collision. For example, imaginea driver-less Uber vehicle cruising down the road. In one second, a no-fault unavoidable, fatal accident involving one or another person will occur Uber's computer programmers must decide who gets killed Their decision can be framed as a moral argument. Here are three possible arguments: (1) We have a duty to protect our customers. They pay a premium for driverless cars, partly because they are safer. Therefore, we should program the car to protect the driver first arome person wat occur Ders computer programmers must decide who gets killed their decision can be framed as a moral argument. Here are three possible arguments: ( We have a duty to protect our customers. They pay a premium for driverless cars, partly because they are safer Therefore, we should program the car to protect the driver first (2) Uber lacks the moral authority to make this decision, and so the software should not intervene (.e. the car should stay its course). If the car is headed toward Sue, it should not divert toward Joe Fate (vs the software's "morality) should determine the outcome. (3) The software should take the least tragic path. For example, as with the trolley problem, the car should crash into one person to avoid crashing into five. As this scenario illustrates, business decisions are often ethical decisions as well In the classic trolley problem, people endorsing teleological theories of ethics would not flip the switch Multiple Choice correct, people endorsing teleological theories of ethics would not flip the switch incorrect people endorsing teleological theories of ethics would lip the switch incorrect because the motto here is "do no harm correct, because the means justify the ends correct because the means justify the ends correct, because flipping the switch is predicted by virtue ethics Prev 1 2 3 5 of 9 Ne O search