Question: A learning agent interacts with an MDP (S, A, T, R, 7), where S = A = {a1, a2, a3). No discounting is used

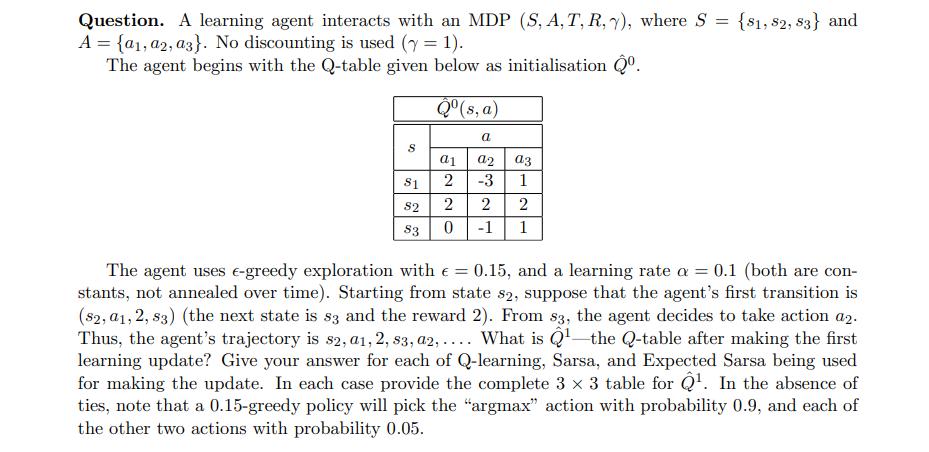

A learning agent interacts with an MDP (S, A, T, R, 7), where S = A = {a1, a2, a3). No discounting is used (y = 1). The agent begins with the Q-table given below as initialisation Q. S $1 82 83 Q(s, a) a1 220 a a2 -3 2 -1 a3 1 2 1 {81, 82, 83) and The agent uses e-greedy exploration with = 0.15, and a learning rate a = 0.1 (both are con- stants, not annealed over time). Starting from state s2, suppose that the agent's first transition is (82, 01, 2, 83) (the next state is s3 and the reward 2). From $3, the agent decides to take action a2. Thus, the agent's trajectory is s2, a1, 2, 83, a2, .... What is Q the Q-table after making the first learning update? Give your answer for each of Q-learning, Sarsa, and Expected Sarsa being used for making the update. In each case provide the complete 3 x 3 table for Q. In the absence of ties, note that a 0.15-greedy policy will pick the "argmax" action with probability 0.9, and each of the other two actions with probability 0.05.

Step by Step Solution

3.39 Rating (152 Votes )

There are 3 Steps involved in it

The detailed ... View full answer

Get step-by-step solutions from verified subject matter experts