Question: (a) Let X 6 Rd be a ddimensional random vector and l E R be a onedimensional random vari able. Assume a linear model between

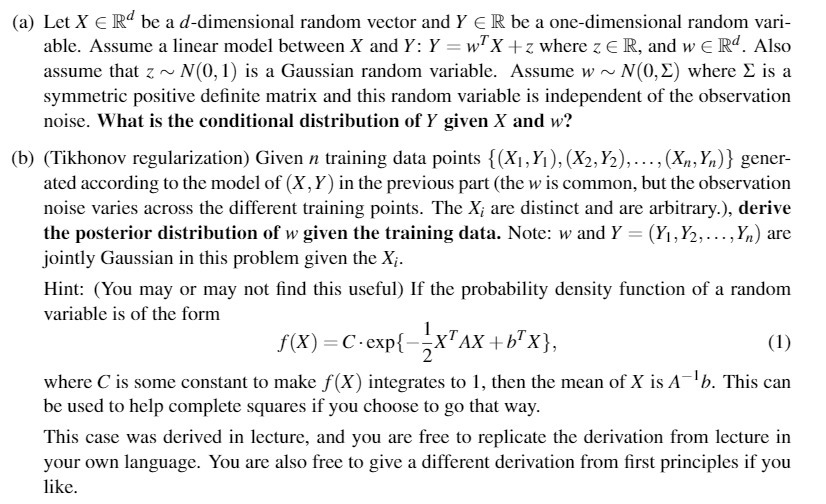

(a) Let X 6 Rd be a ddimensional random vector and l\" E R be a onedimensional random vari able. Assume a linear model between X and Y : Y = wTX + z where z E R, and w E Rd. Also assume that z w NU), l] is a Gaussian random variable. Assume w w N(0,E) where E is a symmetric positive denite matrix and this random variable is independent of the observation noise. What is the conditional distribution of Y given X and w? (h) (Tikhonov regularization) Given a training data points {{X I ,Y] ), [XLYEL . . . , [XmYnll gener ated according to the model of (X, Y] in the previous part (the w is common, but the observation noise varies across the different training points. The X,- are distinct and are arbitrary), derive the posterior distribution of as given the training data. Note: or and Y = (YI1Y21...1YH) are jointly Gaussian in this problem given the X;. Hint: (You may or may not nd this useful) If the probability density function of a random variable is of the form fiX) =C-exp{%XTAX+IJTX], (I) where C is some constant to make f (X ] integrates to 'I, then the mean of X is xiilb. This can be used to help complete squares if you choose to go that way. This case was derived in lecture, and you are free to replicate the derivation from lecture in your own language. You are also free to give a different derivation from rst principles if you like

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts