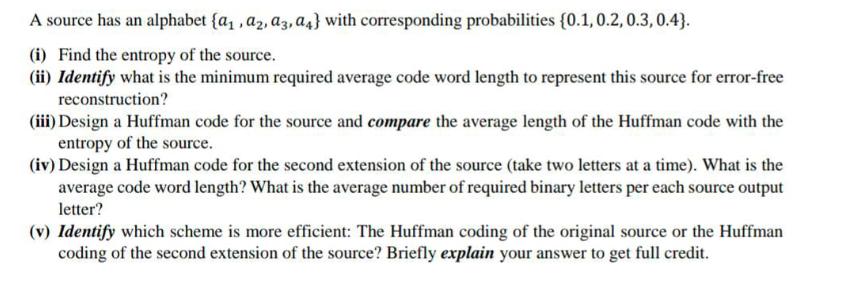

Question: A source has an alphabet (a, az, a3, a4} with corresponding probabilities (0.1,0.2, 0.3, 0.4). (i) Find the entropy of the source. (ii) Identify

A source has an alphabet (a, az, a3, a4} with corresponding probabilities (0.1,0.2, 0.3, 0.4). (i) Find the entropy of the source. (ii) Identify what is the minimum required average code word length to represent this source for error-free reconstruction? (iii) Design a Huffman code for the source and compare the average length of the Huffman code with the entropy of the source. (iv) Design a Huffman code for the second extension of the source (take two letters at a time). What is the average code word length? What is the average number of required binary letters per each source output letter? (v) Identify which scheme is more efficient: The Huffman coding of the original source or the Huffman coding of the second extension of the source? Briefly explain your answer to get full credit.

Step by Step Solution

3.38 Rating (142 Votes )

There are 3 Steps involved in it

i Entropy of the source The entropy of the source is calculated using the following formula H pi log21pi where pi is the probability of the symbol i F... View full answer

Get step-by-step solutions from verified subject matter experts