Question: (a) With initial point xo = [0.5 0.1]', perform two GD iterations by hand for the Rosenbrock function f(x,x)=(x - 1)+100(x1 - x3) . Refer

![(a) With initial point xo = [0.5 0.1]', perform two GD](https://dsd5zvtm8ll6.cloudfront.net/si.experts.images/questions/2024/09/66ebb37743e38_93466ebb376ba35c.jpg)

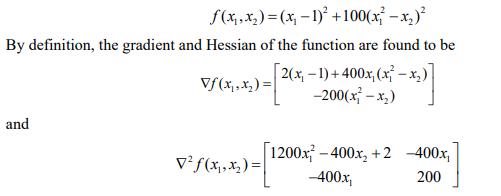

(a) With initial point xo = [0.5 0.1]', perform two GD iterations by hand for the Rosenbrock function f(x,x)=(x - 1)+100(x1 - x3) . Refer to Example 2.2 for its gradient and Hessian. Use optimized step size an 0.0026 for the first iteration and a, = 0.0592 for the second iteration (b) With the same initial point, perform two Newton iterations by hand for the Rosenbrock function. Use optimized step size ag = 1 for the first iteration and Q, = 0.2640 for the second iteration. (e) It is known that the Rosenbrock function possesses a global minimizer x* = [1 1]' at which f(x)=0. Use this information to evaluate the performance of GD and Newton algorithms in 46 terms of closeness of the iterates to the global solution and how much reduction in the objective function is achieved by each iteration of the GD and Newton algorithms. f(x,x)=(x, -1)2 +100(x - x)? By definition, the gradient and Hessian of the function are found to be [2(x, 1)+400x, (x1 - x) Vf(x,x) = -200(x+ - x) :-*)] and V?5(7,45)=L 1200x7 - 400x, +2 -400x, -400x 200 (a) With initial point xo = [0.5 0.1]', perform two GD iterations by hand for the Rosenbrock function f(x,x)=(x - 1)+100(x1 - x3) . Refer to Example 2.2 for its gradient and Hessian. Use optimized step size an 0.0026 for the first iteration and a, = 0.0592 for the second iteration (b) With the same initial point, perform two Newton iterations by hand for the Rosenbrock function. Use optimized step size ag = 1 for the first iteration and Q, = 0.2640 for the second iteration. (e) It is known that the Rosenbrock function possesses a global minimizer x* = [1 1]' at which f(x)=0. Use this information to evaluate the performance of GD and Newton algorithms in 46 terms of closeness of the iterates to the global solution and how much reduction in the objective function is achieved by each iteration of the GD and Newton algorithms. f(x,x)=(x, -1)2 +100(x - x)? By definition, the gradient and Hessian of the function are found to be [2(x, 1)+400x, (x1 - x) Vf(x,x) = -200(x+ - x) :-*)] and V?5(7,45)=L 1200x7 - 400x, +2 -400x, -400x 200

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts