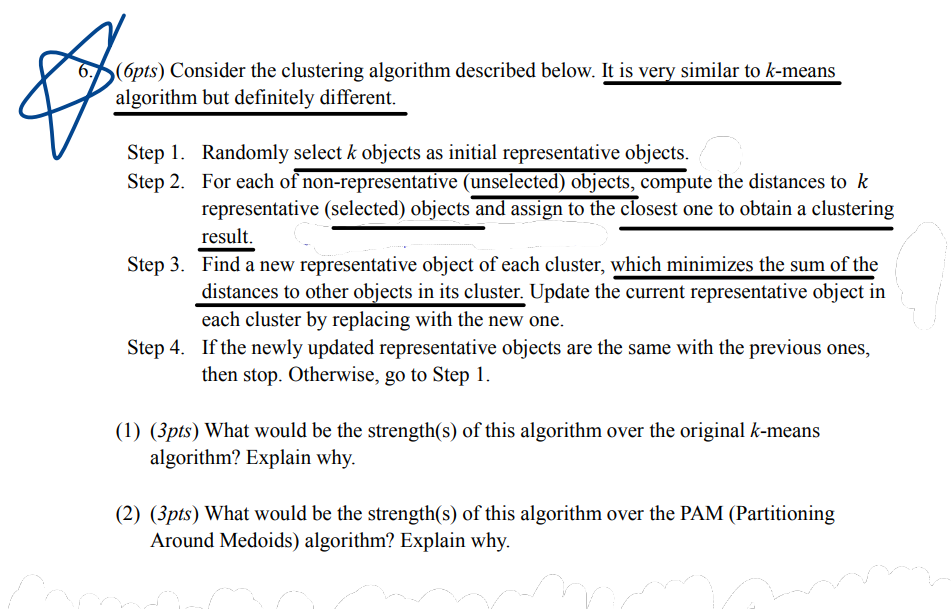

Question: algorithm but definitely different. Step 1 . Randomly select k objects as initial representative objects. Step 2 . For each of non - representative (

algorithm but definitely different.

Step Randomly select objects as initial representative objects.

Step For each of nonrepresentative unselected objects, compute the distances to

representative selected objects and assign to the closest one to obtain a clustering

result.

Step Find a new representative object of each cluster, which minimizes the sum of the

distances to other objects in its cluster. Update the current representative object in

each cluster by replacing with the new one.

Step If the newly updated representative objects are the same with the previous ones,

then stop. Otherwise, go to Step

pts What would be the strengths of this algorithm over the original means

algorithm? Explain why.

pts What would be the strengths of this algorithm over the PAM Partitioning

Around Medoids algorithm? Explain why.

pts Suppose that we perform PCA using the fivedimensional dataset shown below.

tableXXXXX

How much variability of the dataset can be explained by the first principal component? Explain why.

pts Consider the similarity matrix of four data points shown below.

pts Find the optimal clustering result that maximizes the following quantity,

where is the similarity between object i and and indicates the th cluster.

Notice that the number of clusters is If there are multiple optimal results, find them all.

pts Covert similarities to distances and cluster the four points using complete linkage.

Draw a dendrogram. pts Answer the following questions using the datasets in the figure shown below. Note that each dataset contains items and transactions. Dark cells indicate ones presence of items and white cells indicate zeros absence of items We will apply the apriori algorithm to extract frequent itemsets with minsupie itemsets must be contained in at least transactions.

cIpt Which datasets will produce the most number of frequent itemsets? Explain why.

lpt Which datasets will produce the fewest number of frequent itemsets? Explain why.

pt Which datasets will produce the longest frequent itemset? Explain why.

lpt Which datasets will prodyce the frequent itemset with highest support? Explain

why.

pt Which datasets will pooduce frequent itemsets with widevarying support levels?

Explain why.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock