Question: alldocuments = [] iterate the directories for each file open the file and use readlines() to read all lines fetch the lines related to Subject

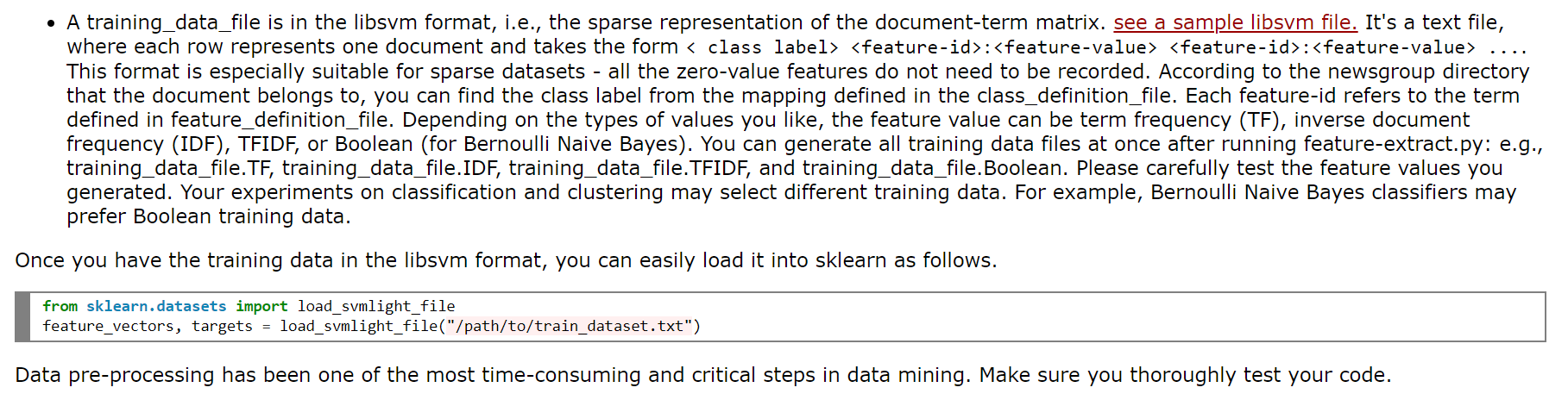

alldocuments = [] iterate the directories for each file open the file and use readlines() to read all lines fetch the lines related to "Subject" and the body started with "Lines: number" put all docuement related information into a list ["subdirectory", [list of the subject and body lines]] append the list to the alldocuments iterate allldocuments: for each docuement pre-process the lines part and convert them to terms append a dictionary of {term: term-frequency} to the document structure: now you have [subdirectory", [list of the subject and body lines], {term: term-frequency, term:term-freq, ...}] term-dict = {} term-doc-freq = {} # document-frequency iterate all documents: for each document: for each term in the last part of the term-term-freq dictionary if it's not in term-dict add the term to term-dict, with default feature-id set to 0 if not in term-doc-freq term-doc-freq [term] = 1 else term-doc-freq[term] += 1 get all keys from term-dict and sort them feature-id =0 for each key in term-dict term-dict[key] = feature-id feature-id +=1 class-dict ={} create the dictionary for subdirectory -> class In this task, you will develop the program feature-extract.py, which has the following usage. python feature-extract.py directory_of_newsgroups_data feature_definition_file class_definition_file training_data_file where . input: directory_of_newsgroups_data is the directory of the unzipped newsgroups data, output: feature_definition_file contains (term, feature_id) pairs, output: class_definition_file contains (class_name, class_id) pairs, output: training_data_file has a specific format, which will be described later. feature-extract.py contains the following two components 1.1 and 1.2 1.1 document preprocessing. For each document, the preprocessing steps include split a document to a list of tokens and lowercase the tokens. remove the stopwords, using this list of stopwords. stemming. Use a stemmer in NLTK. When you parse the documents, you may only look at the subject and body. The subject line is indicated by the keyword "Subject:", while the number of lines in the body is indicated by "Lines: xx", which are the last xx lines of the document. Some files may miss the "Lines: xx" or have other exceptions. Please manually add the "Lines: xx" line for these few files and appropriately handle the exceptions. If you believe other fields of the documents might also be useful, you are free to include them. If you are interested, you may also try other preprocessing steps described in the class. At the end of preprocessing, each document is converted to a bag of terms. You can collect all the unique terms, sort them, and create the dictionary. 1.2 generating document vector representation. According to the dictionary, you assign each term an integer id - the feature_id, starting from 0. The (term, feature_id) pairs will be written to the feature_definition_file. The 20 newsgroups are grouped into 6 classes: (comp.graphics, comp.os.ms-windows.misc, comp.sys.ibm.pc.hardware, comp.sys.mac.hardware, . A training_data_file is in the libsvm format, i.e., the sparse representation of the document-term matrix. see a sample libsvm file. It's a text file, where each row represents one document and takes the form

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts

![alldocuments = [] iterate the directories for each file open the file](https://dsd5zvtm8ll6.cloudfront.net/si.experts.images/questions/2024/09/66f540a2e0024_89866f540a24640e.jpg)