Question: Answer Part 2 Plz: REINFORCE: Monte - Carlo Policy - Gradient Control ( episodic ) for * Input: a differentiable policy parameterization ( a |

Answer Part Plz: REINFORCE: MonteCarlo PolicyGradient Control episodic for

Input: a differentiable policy parameterization

Algorithm parameter: step size

Initialize policy parameter eg to

Loop forever for each episode:

Generate an episode dots, following

Loop for each step of the episode dots, :

Glarr

Ggradln

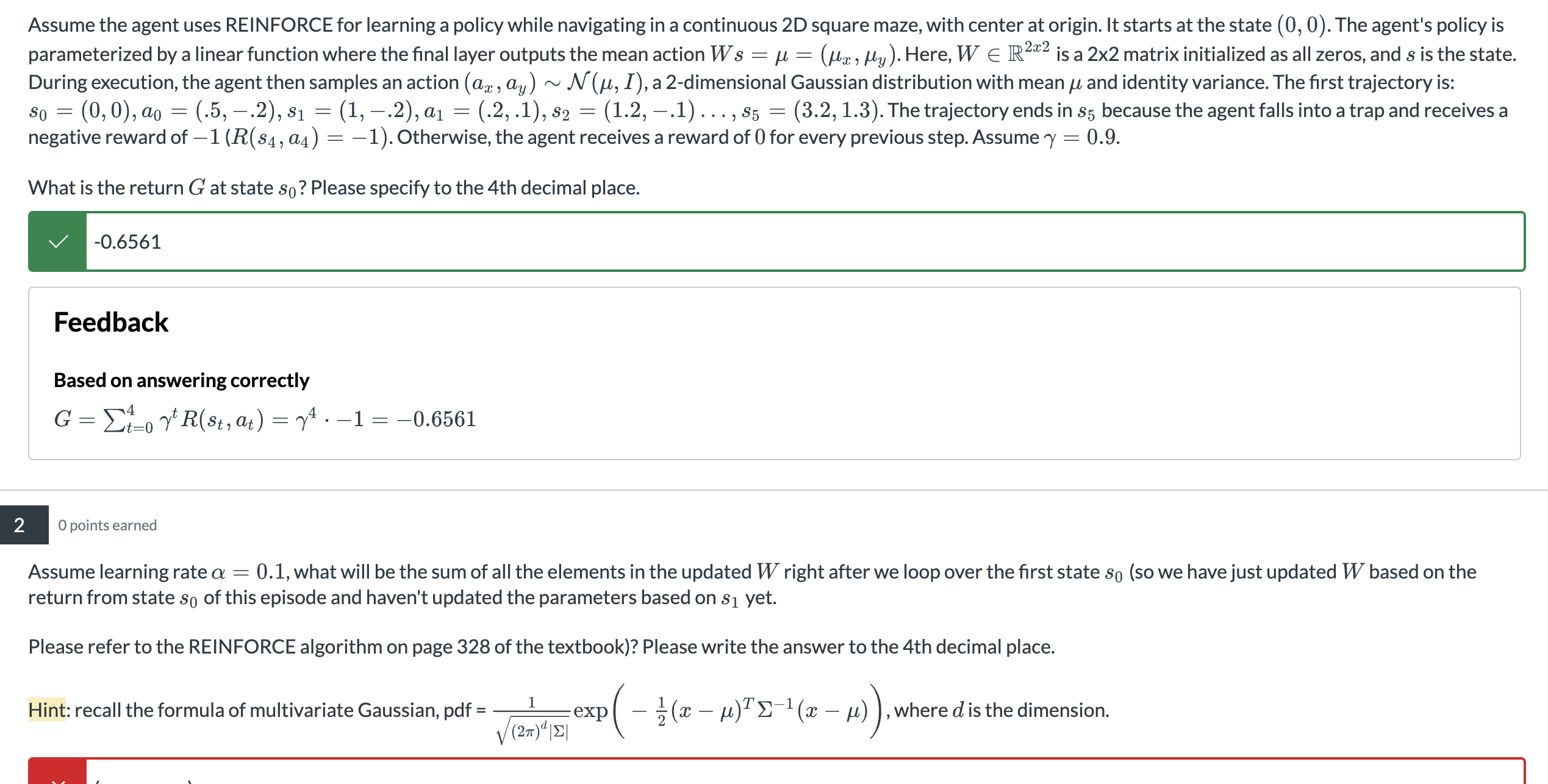

Assume the agent uses REINFORCE for learning a policy while navigating in a continuous D square maze, with center at origin. It starts at the state The agent's policy is

parameterized by a linear function where the final layer outputs the mean action Here, is a matrix initialized as all zeros, and is the state.

During execution, the agent then samples an action a dimensional Gaussian distribution with mean and identity variance. The first trajectory is:

The trajectory ends in because the agent falls into a trap and receives a

negative reward of Otherwise, the agent receives a reward of for every previous step. Assume

What is the return at state Please specify to the th decimal place.

Feedback

Based on answering correctly

points earned

Assume learning rate what will be the sum of all the elements in the updated right after we loop over the first state so we have just updated based on the

return from state of this episode and haven't updated the parameters based on yet.

Please refer to the REINFORCE algorithm on page of the textbook Please write the answer to the th decimal place.

Hint: recall the formula of multivariate Gaussian, exp where is the dimension.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock