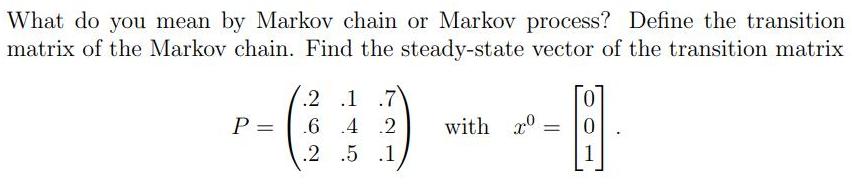

Question: What do you mean by Markov chain or Markov process? Define the transition matrix of the Markov chain. Find the steady-state vector of the

What do you mean by Markov chain or Markov process? Define the transition matrix of the Markov chain. Find the steady-state vector of the transition matrix .2 .1 .7 P = .6 .4 .2 with x %3D 2 .5 .1

Step by Step Solution

3.43 Rating (156 Votes )

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts